Listen Here! The Science of Sound and Hearing

We open our ears to the science of sound and hearing this week with a look at the genetic causes of deafness and how a deaf person's brain decodes sign language. We also hear how auditory illusions can fool you into hearing things that aren't there and meet a sound simulation system that can improve the clarity of railway station announcements and recreate the "cocktail party effect" to help build better hearing aids. Plus, we find out why light makes migraines more painful, how cleaner fish keep each other in check and, in Kitchen Science, Dave swaps Ben's ears around...

In this episode

00:11 - Why Light Makes Migraines Worse...

Why Light Makes Migraines Worse...

Scientists have discovered why light makes migraines worse, and the key to the breakthrough was the observation that some blind people also get relief by retreating to somewhere dark.

Rodrigo Noseda and his colleagues at the Beth Israel Deaconess Medical Centre in the US began by asking 20 blind people with migraines whether they experienced light-sensitivity or photophobia when they had headaches.

Surprisingly, some of them did and these were individuals who had sight-loss conditions like retinitis pigmentosa where the light-sensitive rods and cones degenerate, causing visual loss, but the rest of the retina remains healthy. On the other hand, people who were blind owing to congenital absence of their eyes or destruction or removal of the eyes didn't show this light sensitivity.

This suggested to the researchers that signals arising from the retina must be responsible. To find out how, they used dyes injected into rats to label the nerve cells that connect the retina to the rest of the brain.

They found a group of cells that connect to a region called the posterior thalamus. These nerve cells don't carry visual information but instead arise from a special group of retinal cells used by the brain to tell when it is like or dark in order to set the body clock.

But, the team found, the cells in the thalamus to which these nerves were connecting were also activated by pain nerves supplying the meninges, the layers that surround the brain and spinal cord and which are thought to become irritated during migraines and infections like meningitis.

So, by activating the same brain cells as the pain pathway, the light-signalling nerves boost the perception of pain.

Clinically, say the scientists, who have published the work in the journal Nature Neuroscience, this sets the stage for identifying new ways to block the pathway responsible, making migraines slightly less of a headache to endure...

02:52 - Keeping Cleaner Fish in Check

Keeping Cleaner Fish in Check

Have you ever caught someone just before they say something embarrassing? Did you give them a playful elbow? Well, it turns out that cleaner fish do something quite similar...

Cleaner fish are the little hangers-on you see on larger fish. And their name is self-explanatory, they clean the larger fish of parasites and dead skin cells. This 'dirt' is the cleaner fish's food and it keeps the host fish happy, or at least prevents them from eating their followers.

Now Nicola Raihani and her team have found that male cleaner fish will punish the female cleaners if they step over the line and start munching on the tastier host fish, instead. Because the host fish has a much more nutrient-rich mucus on their skin, and cleaner fish would much rather eat that. But this risks offending the host fish, which might mean the cleaner fish lose their food supply altogether.

In the journal Science this week they tested this by offering the cleaner fish some fish flake feed and some more extravagant prawns. They trained the cleaner fish so that, if one took a bite from the prawns, all the food would be removed from the tank. Very quickly, the researchers saw that whenever a female cleaner took a bite from the prawns the males would punish her by chasing her away. And afterwards the females were much less likely to give into their prawny temptation again.

I'm not sure what it says about male-female relationships. I know I get a telling-off if I reach for the chocolate. Perhaps I'm offending the god of good female figures? Raihani said "the males are less well behaved than the females a lot of the time but perhaps part of the reason the males are so likely to cheat is that females never punish males,"

But it might tell us something about the evolution of human behaviour and how we came to monitor each other's behaviour for an overall benefit to the society. Raihani suggests that, as the male fish are essentially looking after their own stomachs first, this is how behaviour which benefits the group as a whole might have evolved.

Repairing Aldehyde Dehydrogenase 2 - ALDH2

Scientists have found a way to repair the activity of a defective enzyme that prevents some people breaking down alcohol and which may also hold the key to preventing heart attacks and Alzheimer's disease.

Up to 1 billion people worldwide, including 40% of east Asians, carry an altered form of a gene coding for an enzyme called aldehyde dehydrogenase 2 or ALDH2 for short.  This breaks down a chemical called acetaldehyde, which is one of the substances produced when alcohol is metabolised by the liver.

This breaks down a chemical called acetaldehyde, which is one of the substances produced when alcohol is metabolised by the liver.

Shortly after consuming alcohol, individuals with the defective form of this enzyme develop symptoms of facial flushing, a rapid heartbeat and nausea, owing to the accumulation of acetaldehyde in the bloodstream. These individuals also have an increased the risk of oesophageal cancer, Alzheimer's disease and having a worse outcome from a heart attack.

Indeed, higher levels of this enzyme in heart muscle are strongly protective against heart damage and, whilst studying this, scientists recently found a drug molecule called Alda-1 that seems to be able to boost the activity of the healthy form of the enzyme and also to repair the defective enzyme; but they didn't know how it was working.

Now, writing in the journal Nature Structural and Molecular Biology, scientists at Indiana University and Stanford University, led by researcher Thomas Hurley, have found out how it works, which could lead to a whole raft of new treatments.

The team have worked out the three-dimensional structure of the enzyme, at the core of which is a tunnel-like structure that breaks down molecules like acetaldehyde. But in the defective form of the enzyme this tunnel is the wrong shape, so the enzyme cannot function.

But when the Alda-1 drug molecule locks on, which occurs on a different part of the enzyme, it bends the enzyme, prising the tunnel open so that it becomes active again.

Now that scientists have discovered how the Alda-1 drug works, it should be possible to find other molecules capable of doing the job even better and with the potential to impact on many different diseases, including reducing the damage done by heart attacks.

Nothing like a good pair of nitric oxide socks

During these cold winter months you might like to strap yourself into some lovely fluffy socks, perhaps that your granny made you at Christmas. And now you can get special socks for donor organs and people with diabetes, according to a paper from Chemistry of Materials this week.

It's not quite putting livers in jumpers and hepatic veins in booties but chemists this week have described how they've created a special fabric that can deliver nitric oxide to donor organs.

It's not quite putting livers in jumpers and hepatic veins in booties but chemists this week have described how they've created a special fabric that can deliver nitric oxide to donor organs.

Nitric oxide is great in preventing damage to organs which aren't getting enough oxygen. It's actually a molecule which many animal cells use to communicate with other cells. And one of the tasks nitric oxide performs is as a muscle relaxant, which means it can dilate blood vessels and increase blood flow. Actually, it's one of the signalling pathways that Viagra capitalises on.

So this fabric contains zeolites which are molecular cages of aluminium and silicon oxides. And those cages will soak up gas molecules like nitric oxide and then release them in a controlled manner. The way they make the bandage fabric is to construct a water-repellant polymer, then embed some of these zeolites in it. They can control how fast nitric oxide is released by making the polymer more or less water repellent. So to get the nitric oxide flowing you just need to add moisture.

And the scientists working on this, Kenneth Balkus and Harvey Liu at the University of Texas, are solving a problem here that many have struggled with before in medicine. It's quite tricky to find reliable ways of storing and then delivering nitric oxide in a controlled manner. Because, as with many good things, too much is toxic.

So apart from wrapping donated organs ready for transplantation, the zeolite fabric could be used for people with diabetes, in whom it's been found that nitric oxide production is compromised. Wearing this fabric might increase blood flow in all sorts of extremities, and they could really benefit from some NO socks.

11:33 - The Genetics of Hearing

The Genetics of Hearing

with Professor Karen Steel, The Wellcome Trust Sanger Institute

Diana - We're joined by Dr. Karen Steel who works at the Wellcome Trust Sanger Institute and is looking at what can happen in our genes which could cause deafness. Hello, Karen.

Karen - Hello.

Diana - Let's start off with - are there many genetic causes of deafness?

Karen - Yes, there are. Our genome is a very common cause of hearing impairment in the human population. There are many, many different genes, any one of which can be affected, causing deafness. There are lots of different ways that genes can cause deafness. Sometimes, a person can have just a single gene that has a defect, a mutation causing deafness. In other cases, they could have a combination of a number of different variants of different genes that add together to give them a hearing impairment, including progressive hearing loss during their life. Or you can have genes that make you more sensitive to environmental damage like noise induced damage. Some people seem to be especially sensitive to noise induced damage. So genes can play a very important role in causing deafness and are probably involved in more than half of the cases of hearing impairment in the human population.

Diana - And how do you go about separating environmental causes of deafness from genetic?

Karen - Well, that's very difficult in a human population unless you have a large family  with many generations where you can actually track the inheritance of a single gene causing deafness through the generations and most human families aren't like that. For that reason, we usually turn to an animal model and in this case, we use the mouse as a model because the mouse inner ear is almost identical to the human inner ear - it's just a little bit smaller. The mouse also has many different forms of deafness including many of the same genes involved in human deafness.

with many generations where you can actually track the inheritance of a single gene causing deafness through the generations and most human families aren't like that. For that reason, we usually turn to an animal model and in this case, we use the mouse as a model because the mouse inner ear is almost identical to the human inner ear - it's just a little bit smaller. The mouse also has many different forms of deafness including many of the same genes involved in human deafness.

Diana - So, how many genes are linked to deafness which are the same in mice as they are in humans?

Karen - There are dozens and dozens. I mean, we know in the human population that there are over 130 different genes that can cause just simple deafness without any other signs of any other problem elsewhere in the body, but there are probably over 400 genes that include deafness as part of a whole set of different problems that a person might have. There are similar numbers of genes in the mouse that can cause hearing impairment, sometimes associated with other problems like visual problems for example.

Diana - So, I've got flagged up here that myosin 7a is a very important one that you look at. So, could you tell us a little bit about that?

Karen - Right. Myosin 7a is an important gene. It was the first gene that was identified to be associated with deafness and myself and colleagues, in London at the time, identified it first of all in the mouse. The great advantage of using the mouse in this case is that you need lots of offspring in order to study the inheritance of the mutant gene. And in the mouse, you can produce hundreds of offspring and therefore locate, on a particular chromosome very, very carefully, exactly where the mutant gene is and find it much more easily. So in that case, we could cut down the 3,000 million bases in the human genome, down to about 2 million bases. Now, 2 million bases, or 2 million subunits of DNA, is still an awful lot to search through for a mutation especially if it's just one of those bases that's different in the mutant allele, but it's still better than 3,000 million bases, so it's very helpful. You can only really do that sort of work in the mouse. We spent a number of years trying to find exactly where this gene was in a mouse mutant that had a hearing impairment and eventually we found it and it was a mutation in the myosin 7a gene.

Diana - And how did the myosin 7a gene actually affect the mechanical aspect of hearing?

Karen - The myosin 7a is a motor molecule. It's called a motor molecule. It's like myosin in muscle, there are lots of different myosin molecules and they're all thought to be motor molecules. And they walk along little tram lines in cells called actin fibrils, so there are lots of actin fibrils like little train lines, going all around the cell and the myosins are thought to be like the trains, just carrying cargo from A to B within the cell. But in the case of the sensory hair cells which is where myosin 7a is expressed, the sensory hair cells are the cells that change the mechanical energy, the vibration of sound into a nervous impulse that leads to the brain. In these hair cells, the myosin 7a is located just underneath the cell membrane on little projections called hairs, that's why hair cells are called hair cells. They're like hairs or little fingers sticking out of the top of the hair cell. And the myosin 7a is located just underneath the membrane. Now, these hairs are really critical for normal hearing because when the vibration bends that bundle of hairs back, then tiny links in between these hairs are pulled and they pull open a channel which leads ions to flood into the cell, causing a voltage change, and that triggers the synaptic activity and a nervous impulse at the other end of the cell.

Karen - The myosin 7a is a motor molecule. It's called a motor molecule. It's like myosin in muscle, there are lots of different myosin molecules and they're all thought to be motor molecules. And they walk along little tram lines in cells called actin fibrils, so there are lots of actin fibrils like little train lines, going all around the cell and the myosins are thought to be like the trains, just carrying cargo from A to B within the cell. But in the case of the sensory hair cells which is where myosin 7a is expressed, the sensory hair cells are the cells that change the mechanical energy, the vibration of sound into a nervous impulse that leads to the brain. In these hair cells, the myosin 7a is located just underneath the cell membrane on little projections called hairs, that's why hair cells are called hair cells. They're like hairs or little fingers sticking out of the top of the hair cell. And the myosin 7a is located just underneath the membrane. Now, these hairs are really critical for normal hearing because when the vibration bends that bundle of hairs back, then tiny links in between these hairs are pulled and they pull open a channel which leads ions to flood into the cell, causing a voltage change, and that triggers the synaptic activity and a nervous impulse at the other end of the cell.

The hair cell in a normal person detects tiny, tiny movements - less than a nanometre. So that's really, really tiny - a millionth of a millimetre if you can imagine such a tiny movement. And so, in order to pick up such a tiny movement, this channel that is opened by these links, tugging at these links, has to be held very, very firmly in place and our experiments in the mouse mutants have shown that we think that the myosin 7a, instead of acting as a train carrying cargo around the cell, instead of that, it's actually acting as an anchor. So there are lots of actin fibrils inside these finger-like processes and the motor end of the myosin 7a binds to that, and the other end, instead of holding on to a cargo holds on to the cell membrane and keeps it very, very firmly in place so that the whole structure is ready to receive tiny, tiny movements. And if that molecule is not there, then the membrane is very floppy and so, it's very, very much more difficult to open that channel by tugging at it because you just pull at the membrane rather than just pulling at the channel itself.

Diana - I see and is it only myosin 7a that does that? Are there any others?

Karen - Well, there probably are other molecules. We know that there are lots and lots of other molecules involved in normal hearing processes, but we really have great difficulty in finding these and genetics is a way, is a tool, for finding those genes and those molecules and finding out more about the molecular basis of normal hearing function. The key thing about myosin 7a, if I could also say this, is that we found it first in the mouse and then we got in contact with our colleagues who work on human deafness and they very, very quickly, having a candidate gene, were able to find that there are mutations in human families with Usher syndrome, and Usher syndrome is a tragic disease where children are born deaf. They have a balance problem which means they develop their motor function late. They learn to walk late in life and then by the time they're about 10, they start to lose their vision as well through retinitis pigmentosa or degeneration of the retina. So, it's a very sad disease for children to have and it's very sad for the whole family. And we were able to find these mutations and find the cause for a large proportion of the cases Usher syndrome in the human population, and that was very, very useful for the families involved who really wanted to know what the problem was.

Diana - So now that we have these genes, what does that mean next for deaf people? Does it mean we might find a solution to deafness in the end? Is that even possible?

Karen - That's a very interesting question. There are lots of genes, hundreds of genes involved in deafness and we only know a few of them so far, so we do need to understand what they're doing and we need to understand how they're involved in deafness in the population as well. I think when we get to that point, when we have a better understanding, then we'll be able to think about therapeutic interventions. And particularly that's going to be important for people with progressive hearing loss, age relate hearing impairment, it's called. For those people, if we can intervene and stop their hearing getting any worse, that will be a great benefit to a lot of people. So, I think that that's the way forward, but we do need to find the rest of the genes, find out which ones are involved in the human population and understand what it is they're doing in the ear before we can think of ways of replacing their action if they're not acting properly.

20:54 - Analysing Acoustics and the "Cocktail Party Effect"

Analysing Acoustics and the "Cocktail Party Effect"

with Jens Holger Rindel, Odeon A/S and Jorg Buchholz, Technical University of Denmark

Chris- Now, in the past, buildings weren't necessarily designed with the acoustics in mind, which means if you take old structures , like railway stations or concert halls, and then you put in a fancy new electronic PA system, the results can be quite poor quality at best, or maybe unintelligible echoes at worst. And that's largely because you don't know beforehand how to compensate for the intricacies of the architecture and then the presence of people and the furniture. But what if you could use a computer system to simulate what you would hear if you were sitting in any part of the building, listening to the sound system that you're planning to put in?...

Jens - My name is Jens Holger Rindel and I'm working for a company Odeon A/S in Denmark where we developed room acoustic software. The software simulates the sound in a space and it can be used for concert halls, operas, theatres, open plan offices, industrial halls, and a lot of places where acoustics is important.

Jens - My name is Jens Holger Rindel and I'm working for a company Odeon A/S in Denmark where we developed room acoustic software. The software simulates the sound in a space and it can be used for concert halls, operas, theatres, open plan offices, industrial halls, and a lot of places where acoustics is important.

Chris - So, is the basic premise then that if someone's going to build a building or put in some infrastructure, without having to put in the infrastructure they can use your program to work out what it will sound like in that structure? So, let's take an example of, if I'm building a concert hall and I want to work out where to put my speaker system, I can work out how best to arrange the speakers, so that everyone sitting anywhere in the concert gets the best reproduction of the sound?

Jens - Yes and these results can be calculated, covering all possible positions of the audience, so you can easily see from the results how even the acoustics is in the hall and if there are any bad spots, that should be examined further.

Chris - Is the program basically using a model of the structure? So, do you have to feed in a sort of rendition of the arrangement of the building? Where the walls are, where the seats are, so that the program basically sees a virtual construction of the area that you're studying and it uses that to work out how the sound would be experienced within that structure?

Jens - Yes, that's correct. The first step in the modelling is to make a virtual model of the space. The most efficient way can be to simply have the architect's 3D model, then it may be transferred and imported to the Odeon acoustic software. Then assign the sound absorption from the surfaces and scattering of sound which has something to do with the roughness of the structures, and then it's ready to do the acoustic simulation.

Chris - Have there been any situations where people are taking your Odeon software and using it to inform either the ground up creation of a building space or putting in sound systems in existing building spaces?

Jens - Well, one example could be a recent use in the Copenhagen Railway Station. It has recently got a new loudspeaker system and Odeon was used to predict the speech intelligibility that could be obtained for this new system.

Jens - Well, one example could be a recent use in the Copenhagen Railway Station. It has recently got a new loudspeaker system and Odeon was used to predict the speech intelligibility that could be obtained for this new system.

Chris - In other words, to combat the age old problem of, you can't hear the train wherever you're going is departing from platform 3, five minutes ago because you missed it?

Jens - Yes, exactly.

Chris - So how would you go about solving a problem like that then?

Jens - In this case, the problem could not be solved by bringing down reverberation in the hall because it has a very large volume. So, it was solved instead by introducing line array speakers which are very tall columns of speakers and this is exactly what we can model in the Odeon software. This allows us to calculate how good the sound system is going to work and it's possible then to choose positions as a listener and try to listen to some sound samples, and that's what they have prepared for this. The first one represents that you are standing in the middle of this very big central station. There's a lot of people around as background noise and, 2 metres in front of you, there's a lady talking to you directly...

Copenhagen Central Station Natural Sound

Chris - So she's clearly a mathematician as much as anything else, but what is that revealing?

Jens - Well, you are able to understand her, but it's very obvious that this happens in a very big place and a very reverberant place.

Chris - And so, what would be the consequence of putting in a whole bank of speakers into that space? I mean, you just end up with echoes you can't understand presumably?

Jens - Yes. I then modelled the new speaker system, but before we listen to the whole system, I should like to give a second example, that we just turn on one of the speakers to give an idea of how the sound would be if you have a sound system which is not well planned...

Copenhagen Central Station Original Sound

Chris - So that's the experience we've all had at the railway station, isn't it? We just can't hear what the message is.

Jens - Yes, that's right.

Chris - So, what can we do about this.

Jens - We can then design a proper loud speaker system and with sufficient number of speakers, in this case it was 20, it's much better than what you normally would experience in such a place...

Copenhagen Central Station Improved Sound

Chris - So you must agree, that does sound a whole lot clearer. The whole thing was actually done using a computer simulation of the station and then using that simulation to work out where to put the speakers, to achieve the maximum intelligibility for the people using the station. I was talking there to Jens Holger Rindel who wrote the Odeon software that actually does that modelling.

Chris - Now another person using this system is hearing researcher, Jorg Buchholz at the Technical University of Denmark. He uses Odeon to recreate standardized noisy environments in his laboratory to try to get to the bottom of what's called 'The cocktail party effect.' This is the fact that even despite huge amounts of background noise, we can usually follow a conversation quite easily. But people with hearing impairments actually find this really very difficult, but until we can find out why they find it so difficult, it's really hard to make hearing aids that can compensate.

Jorg - Normal hearing people usually have no problem in communicating in cafeterias or other noisy places, but the hearing impaired often do have severe problems in such situations and hearing aids often do not really help and that's exactly what we're interested in. Why are normal hearing people so good in doing that? Why do hearing impaired people have these problems? And why or how can hearing aids help in these situations?

Chris - So, how are you trying to address that problem? How can you solve it or investigate further what's going on?

Jorg - First, we have to understand what goes wrong and there are different aspects in it. But for us, we are interested in real life stimuli which is usually quite complicated to look at because we can't follow a person around the city all the time. So what we do is try to get the city situation or cafeteria situation into the laboratory, and then we can measure speech intelligibility in such environments or aspects like distance perception and so on. We can measure in our laboratory space and this would be as close to real life as possible.

Jorg - First, we have to understand what goes wrong and there are different aspects in it. But for us, we are interested in real life stimuli which is usually quite complicated to look at because we can't follow a person around the city all the time. So what we do is try to get the city situation or cafeteria situation into the laboratory, and then we can measure speech intelligibility in such environments or aspects like distance perception and so on. We can measure in our laboratory space and this would be as close to real life as possible.

Chris - I see. So you're bringing the cafeteria or the noisy station or whatever, to the lab by creating it artificially, but you can put the hearing impaired subject in that environment and see how they react and respond to that environment, what they can hear in that environment, and then you can tweak that environment in very standard ways to work out what is going on.

Jorg - That's exactly what we're doing, so we have lots of loud speakers in the room. We create signals using Odeon and we set the person in the centre of this loud speaker array and we even put some - as we call it a 'master hearing aid' on. So this is a computer platform which we can fully control. So, we have the entire system, we can basically control the acoustic environment, the sound sources in there, but also the signal processing in the hearing aid that the subject is listening through in that environment.

Chris - And presumably, what that will enable you to do is to then work out what aspects of the acoustic environment are bad for a standard hearing aid or bad for a hearing impaired person and work out how to adjust the hearing aid's function to compensate, so the person hears better?

Chris - And presumably, what that will enable you to do is to then work out what aspects of the acoustic environment are bad for a standard hearing aid or bad for a hearing impaired person and work out how to adjust the hearing aid's function to compensate, so the person hears better?

Jorg - That's exactly what we do. For example, early reflections in a room are very, very dominant and in many cases, people consider this as disturbing, but actually for speech, it helps and hearing aids often destroy these early reflections which might not be the most good thing to do.

Chris - Is that a discovery you've only just made, so that hasn't actually filtered down into the hearing aid market yet effectively? Is that something that has yet to break-in and be implemented?

Jorg - Hearing aid companies try these things, but I think it is now the time when you have this transition, where we basically try to go from the simple laboratory into a more realistic environment because there's a difference in how hearing impaired people with hearing aids perform in a laboratory, so how we fit , the audiologist fit hearing aids, and how they then are used in real life. This gap has to be bridged somehow and that's what we do now. So we have the environments - the loudspeaker-based environments, the simulation software and so on, so that we now can do these things and the hearing aid industry is picking up on that right now.

31:02 - Language in the Deaf Brain

Language in the Deaf Brain

with Dr Mairead McSweeney, Institute of Cognitive Science, UCL

Chris - This week, we're talking all about the science of hearing and sound, but hearing isn't just down to your ears. The brain plays a crucial role so now, we're joined by Dr. Mairead McSweeney who's from the Institute of Cognitive Science at University College London. That's where she works on looking at how a deaf person's brain deals with language. Whether that's sign language or lip reading or reading from text, and she's with us now. Hello, Mairead.

Mairead - Hello.

Chris - Tell us a little bit about the deaf persons' brain. How does it differ or not from someone who is normally hearing?

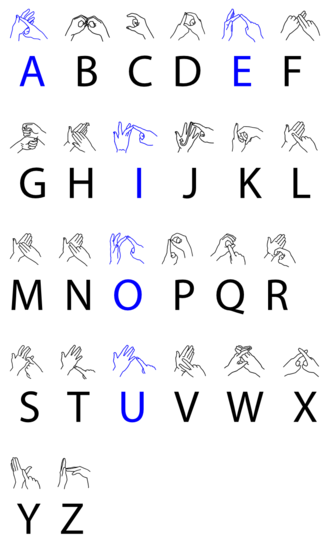

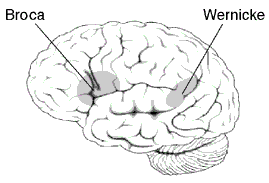

Mairead - Well to date, only a few studies have actually been done to look at the structure of deaf people's brains and perhaps surprisingly, these studies suggest very few, if any, anatomical differences between deaf and hearing brains. So for example, we might wonder what happens to the part of the brain that processes sound in you and I, the auditory cortex, and might predict that this may be atrophied in people who were born deaf. But that doesn't seem to be the case. So, in people born deaf, their size of auditory cortex seems to be the same as in hearing people. What it's actually doing is a different question I can perhaps tell you more about that if you're interested later. And when we think about function and how the brain processes language, very similar neural systems seem to be recruited by deaf and hearing people. So in hearing people processing spoken language and deaf people processing - in our case British Sign Language, which is a real language and is fully independent of spoken English - then the same neural systems seem to be recruited. And these are predominantly located in the left hemisphere of the brain.

Mairead - Well to date, only a few studies have actually been done to look at the structure of deaf people's brains and perhaps surprisingly, these studies suggest very few, if any, anatomical differences between deaf and hearing brains. So for example, we might wonder what happens to the part of the brain that processes sound in you and I, the auditory cortex, and might predict that this may be atrophied in people who were born deaf. But that doesn't seem to be the case. So, in people born deaf, their size of auditory cortex seems to be the same as in hearing people. What it's actually doing is a different question I can perhaps tell you more about that if you're interested later. And when we think about function and how the brain processes language, very similar neural systems seem to be recruited by deaf and hearing people. So in hearing people processing spoken language and deaf people processing - in our case British Sign Language, which is a real language and is fully independent of spoken English - then the same neural systems seem to be recruited. And these are predominantly located in the left hemisphere of the brain.

Chris - So the same bits of a brain that would be decoding language if you were listening to it are being used to decode language arising through other means of communication?

Mairead - That's exactly right, yes. So, we are comparing languages coming in in very different modalities, so we've got auditory verbal speech, and then we've got visio-spacial sign language, and the same systems seem to be recruited. And of course, if we think about it, we as hearing people also deal with visual language, so you mentioned lip reading, we see people's faces when we speak or we might be reading text, but these are all based on spoken language and we have that auditory system that is involved in processing spoken language and these visual derivatives are then built upon that system whereas with sign language of course, we're looking at something that doesn't have any auditory component. So the fact that the same systems are used for spoken language and sign language is very interesting. It tells us that what the brain is doing is saying there's something important about language that's recruiting these regions in the left hemisphere.

Chris - I was just going to say, how does the brain know this is language and I have to present this information to this other bit of the brain whose job it is to decode language and then parcel it out to the other bits of the brain that then do other aspects of linguistic processing, working out what verbs mean, what the nouns mean, what colours mean, and so on.

Mairead - Well, that's the big question that we're working on really! So it's one thing for us to say this system in the left hemisphere, involving the certain parts of the brain that have been identified for a long time - Broca's area and Wernicke's areas being involved in the language processing - are also involved in sign language, but the next step for our research is really, what is it about language and the structure of language that is important for these regions? What is it that is critical and what is it that these regions can do in terms of symbolic processing, or whatever it might be, that is important for language processing. So that's the next step for our research.

Chris - And you're doing this with brain scanning, so you put people with a hearing impairment of presumably different lengths of time - people who've been born deaf versus people who have acquired forms of deafness - who have learned alternative means of communication, and you look at how their brains respond to different stimuli?

Chris - And you're doing this with brain scanning, so you put people with a hearing impairment of presumably different lengths of time - people who've been born deaf versus people who have acquired forms of deafness - who have learned alternative means of communication, and you look at how their brains respond to different stimuli?

Mairead - Yeah. We're using brain scanning as you say. So we use something called functional Magnetic Resonance Imaging (fMRI) where we can get an indirect measure of blood flow which tells us which parts of the brain are being used when we show different people stimuli, whether it may be sign language or visual speech or written text. But actually, we haven't yet compared people who were born deaf with people who become deaf later in life, most of our work is concerned just with people who are born profoundly deaf. But looking at all of these different groups can address very important questions. So looking at people who have become deaf later in life will be something we'll do in the future because it all tells us about our critical question, which is how experience shapes the brain and how plastic the brain is in responding to changes in its environment.

Chris - And if you look at people in whom the opposite side of the brain is the dominant one because the majority of us are right handed, which means the left side of the brain is the dominant hemisphere and that's usually where language is. If you look at people in which that process is reversed, do you also see the sign language and so on being shifted across as well? Is there always this association between the language bits of the brain and the interpretation of things like sign language?

Mairead - Well, that's a good question actually and it's something we have just put in an application to get money to look at! So actually, looking at sign language processing in deaf people in this way, there's maybe 20 studies that have been published in this area. All have focused on people who are right handed, so we want to have consistency across the people that we're looking at. So in fact, there are no studies looking at deaf people who are left handed and looking at the regions that they use in processing language, but that is something that we plan to do in the future.

Chris - Brilliant. Well, good luck with it Mairead and do join us when you do discover how it is that the brain manages to puzzle out these different bits of information, and know that they're all about communication and therefore, to put them into the right brain area.

Mairead - Will do.

Chris - Great to have you on the program. That's Mairead McSweeney who is from UCL, University College London explaining how a deaf person's brain can process sign language in a very similar way to how a hearing person's brain processes spoken language.

37:57 - Auditory Illusions

Auditory Illusions

with Bob Carlyon, University of Cambridge

Diana- Have you ever listened to a piece of music or speech and not heard the same thing as the other people around you? Or, others around you have understood a sound when all you've heard is noise. That's definitely happened to me! And have you ever noticed that when you first walk into a busy pub or bar, you struggle to hear the person you're with? Yet, after a few minutes, you can hear that person clearly. Well, these changes and differences in our hearing could all be called auditory illusions. And this week, Meera Senthilingam met up with Dr. Bob Carlyon from the Medical Research Council's Cognition and Brain Sciences Unit to find out how our hearing can create illusions.

Bob - Well, I think a simple way of thinking about how we process sound is that the brain's a bit like a tape recorder, so we start off hearing a sound from the beginning, go through to the end and we pretty much get a veridical representation of what's going on. But a lot of the time, the sound coming to us is ambiguous, because the sound is often obscured by the sounds or affected by context, that sometimes, we can hear two sounds which are physically and identically the same, but perceive them as being quite different from each other.

Bob - Well, I think a simple way of thinking about how we process sound is that the brain's a bit like a tape recorder, so we start off hearing a sound from the beginning, go through to the end and we pretty much get a veridical representation of what's going on. But a lot of the time, the sound coming to us is ambiguous, because the sound is often obscured by the sounds or affected by context, that sometimes, we can hear two sounds which are physically and identically the same, but perceive them as being quite different from each other.

Meera - So these are things that we hear in our everyday lives, and they count as illusions?

Bob - That's right and sometimes we find that simply knowing what we're listening to can actually affect whether we hear something accurately or indeed at all. We can sometimes find that the percept of the sound will change over time and sometimes we find that bits of a sound are missing because there are other people talking or people clattering in the background or a plumber banging on the radiator. And we find that the brain is capable of filling in this missing information.

Meera - We're going to listen to a couple of examples of these illusions now. And the first one we're kicking off with is one that I actually helped you make earlier in the week.

Bob - That's right, yes. So, Meera sent me a recording of her saying a very important question which I think faces us all today and what we've done is we've processed this in a way which severely distorts it. So we would just like to play this to you and see if you can figure out what Meera is saying...

Meera's Distorted Question

Bob - So this is actually speech which has been processed in a way which simulates  the information available to a deaf patient with a cochlear implant. But of course, in everyday life, we hear lots of other types of distortions for example if you're trying to listen to a platform announcement in a railway station in the United Kingdom, you'll be aware that there's often huge amounts of reverberation and distortion which makes sounds really hard to listen to. Quite often, even if you get three or four chances to hear it, you don't really do any better at picking up what's being said. So, if we now play you the sound which is has not been distorted in Meera's original form:

the information available to a deaf patient with a cochlear implant. But of course, in everyday life, we hear lots of other types of distortions for example if you're trying to listen to a platform announcement in a railway station in the United Kingdom, you'll be aware that there's often huge amounts of reverberation and distortion which makes sounds really hard to listen to. Quite often, even if you get three or four chances to hear it, you don't really do any better at picking up what's being said. So, if we now play you the sound which is has not been distorted in Meera's original form:

Meera's Original Question

Meera - Okay, and then so, having listened to the real version, can we listen to the distorted one again?

Bob - Absolutely.

Meera's Distorted Question

Meera - And I bet most of our listeners now must now understand what I said in the first place and I just need to answer that, No, the Naked Scientists aren't naked, but I thought that was a good way to get the question answered out there! So, distorted speech, what does this really tell you about the way we hear things?

Bob - The distorted signal which was reaching your ears, because the information was greatly degraded, could in fact correspond to one of a number of different utterances. And so, it's quite hard for the brain to map it on to all of these individual utterances and to try and decide which is the correct one, but once you've got the right information, you're just doing a simple one-to-one mapping.

Meera - This kind of thing, it could not even matter how many times you hear it. It's really just a knowledge of what it says beforehand that can really help you to understand it?

Bob - That's right, yeah. I think what's quite interesting is when you do that, it's not that you hear the sound and then tens of seconds later, you figure out what it must have been. The percept really just pops out at you as something like a true percept and it's then really quite hard to imagine how you ever failed to understand what was being said in the first place.

Meera - And why do you think our hearing works like this?

Bob - Well, it's simply because the information with which we're presented is often highly ambiguous and distorted and in real life, we know that our brains do have lots of information, whether it's about the nature of the language we are speaking or about the familiarity we have with the person's voice or what words are likely for somebody to follow one from the other. And so, it would obviously make sense for the brain to use the information which it has. And what's quite interesting as I say is that it doesn't feel like your brain's really using that information when you're listening to these sounds and lots of other illusions we have. The sounds seem perfectly natural and convincing which is of course the way it should be. You wouldn't really want, every time that your input was a bit distorted, to think 'Ooh wasn't that clever how I managed to do that?' You want it to be natural and straightforward.

Meera - Now, another illusion you have is more about our attention and how much attention we're paying to what we're hearing.

Bob - That's right, yes. So there's an interesting sequence of sounds which is often used to study how we perceive melodies in music or how we organize things sequentially, for example tracking one person's voice in the presence of another. And the sequence is actually quite simple. It's just a repeating sequence of tones of frequencies A and B, and they go in this sort of little galloping rhythm, or repeating rhythm, A-B-A, A-B-A.

Short Sequence

Bob - Then what we find is that for most people, if you listen to that sequence repeating over and over again for a few seconds, you lose the galloping percept and it sort of splits into two streams, each of which sounds a little bit like Morse code.

Long Sequence

Meera - Yes, you really can notice the two tones and it just sounds like an engaged tone over the phone or something.

Bob - That's right, yes. And it's used in a slightly more interesting concept, by composers of polyphonic music, who want to have one instrument playing two melodies. So provided you alternate the sequences of notes, which are quite far apart in frequency, fast enough, then you can play two melodies with a single instrument.

Meera - So I guess in situations like that though, which one's the illusion and which one's correct? Or are they both just correct, and what you perceive depends on how much attention you're paying to what you're listening to?

Bob - Well, it certainly depends on how much attention you're paying because what we've shown is that if you divert somebody's attention for the first part of a longish sequence, either by playing another sound or giving them something to look at or even asking them to count backwards in threes. Then, what we find is when they start paying attention to the sounds again, it's like they've just started. In other words, they would hear the galloping rhythm even though the sequence has been on for some length of time.

Meera - Okay, so Bob, you've used all of these samples of different illusions and experimented with quite a number of people. So, what can you actually take away from this now and what have you learned about human hearing?

Bob - Well, I think there's a few points. I think one of them is that the brain can use its top down knowledge about the world and about what we're saying to affect what we hear and to deal with missing information such as when sounds are momentarily masked. We can use the way we attend to sounds to affect the way in which we hear them, and we can even use sounds which are later on in the sequence to affect the way in which we perceive sounds occurring earlier on. In many automatic speech recognition algorithms, such as you might hear when you're on the phone to your bank, these systems don't use that information and we know that they're notoriously susceptible to interfering noise or different accents. So, despite all those technological advantages, we're much better than even the best computer programmes.

In what language do deaf people think?

We put this question to Mairead McSweeney:

Mairead - Yes, so just as he thinks in Spanish and I think in English, so if you're a native user of British Sign Language, you would think in British Sign Language. So then the question really is, well, what's the nature of that thought? What's it like? And so, it can be visual or it could be manual, so motoric, and so we can use different methods in the lab to try and get at that question by using different interference techniques. And so, it seems to be a bit of both. There seems to be more weight towards a motor representation that people use in their minds and their thinking in sign language.

Chris - So they would literally see themselves doing the thing rather than think it through talking to themselves doing it like I might for example.

Mairead - Well, no. That would be a visual representation but there's more weight towards the motoric representation would be more like feeling themselves do it. It's just like you feel you hear yourself speak, if you like. A motor representation of the movement would be the type of representation that they might be bringing up when thinking.

Diana - So it's just like imagining eating chocolate in my head. I can feel the sensations.

Mairead - Yes, exactly!

53:06 - What is the advantage of a cochlear implant?

What is the advantage of a cochlear implant?

We put this question to Karen Steel:

Karen: - Cochlear implants are usually used for people who have very severe or profound hearing impairment where a hearing aid isn't very much use to you because you don't have enough hearing left. Whereas hearing aids are used for a different group of people, a much larger population of people who have mild or a moderate hearing impairment where amplifying what they can hear is of benefit to them. Basically, that's what a hearing aid does - It amplifies the sound.

Chris - Because the cochlear implant (invented in Australia a little while back) is a series of electrodes that directly stimulates the nerves that connect the cochlea to the brain, whereas the hearing aid is relying on putting bigger vibrations in to the ear, to make the hair cells vibrate a bit more.

Karen - That's right. The hearing aid doesn't do anything biologically to your inner ear and your sensory hair cells are still there and doing the best they can. But the cochlear implant is actually placed inside your ear, so it involves surgery and it's quite likely to damage the natural hearing ability that you might have because it directly stimulates the nerve endings.

Why is tinnitus related to age?

We put this question to Karen Steel:

Well, tinnitus is a ringing or noises that you can perceive. Sometimes it sounds like it's really like coming from outside. Sometimes it sounds as if it's coming from inside your head. It's a sign that either the sensory hair cells or something in your neurons is activating at abnormal times when there isn't a sensory input. That can happen because there's something going wrong with the neuron or something going wrong with the hair cell and that can be an early sign of damage. And so, if you come away from a loud concert and you found your ears are ringing, you've got the first signs of damage and you really shouldn't do it. So, why it's brought on by low levels of background noise? I don't think anybody really has an answer to that and every person's tinnitus is very different, so there's no general answer to that.

Why does the sound of nails on a chalkboard bother us so much?

Chris - The only, and the best, explanation I've heard of this is that the frequency distribution that's emitted by the nails going down the chalkboard is very reminiscent, at very high frequencies, of distress sounds produced by other animals. This plugs in to the primeval response in all of us of an animal in distress. For this reason, we experience this sound with an "Ouch! I don't like it! I have to wake up and take notice!" reaction.

55:50 - How do you measure carbon dioxide emissions?

How do you measure carbon dioxide emissions?

We put this to Gregg Marland at the Environmental Sciences division, Oakridge National Laboratory in the US:

Gregg - Actually, I think there's a misconception that CO2 emissions are measured. What you try to do is to measure how much fuel is burned and if you know how much carbon is in the fuel, you can calculate how much CO2 must be produced, and very seldom is that, in fact, measured. Although there are some large power plants in which they actually put measurement devices in the smoke stack and can measure the amount of CO2 that comes out, that is unusual. The Intergovernmental Panel on Climate Change has published a five-volume set of guidelines that all the countries now use, as part of the UN Framework Convention on climate change, for estimating emissions on all greenhouse gases and it does produce uniformity across countries. The error margin depends on the country and on the greenhouse gas. I think the interest is partly in carbon dioxide emitted from energy systems, and in that case, it really depends on how much a country invests in collecting energy statistics. For countries like those in the EU or the US or Japan, my guess is that the error margin is something in the order of plus or minus 5%. For those discharging smaller quantities of CO2, the error bars, I think can be as high as 20 to 25% and there are some very large countries - in China, we've actually published the estimate that they are maybe as large as 15 or 20%.

Diana - And Gregg also said that a major problem countries face in adding up their CO2 emissions is where to charge them if the fuel is bought in one country, but burned in another. So, if you board a plane in the UK bound for the USA and the fuel has been bought in the UK, but it's expended over the Atlantic, whom do you charge the CO2 to? At the moment, the international convention is to charge the CO2 to no one and they estimate about 3.1% of global emissions don't appear in international accounts. And that's on the order of a billion tons of CO2.

- Previous Seeing the Light on Migraines

- Next Food, Fats and Healthy Eating!

Comments

Add a comment