How does radio reach out of the studio? This week, we tune in explore the science and technology of broadcasting to find out how a voice hits the airwaves. We discover the difference between AM, FM and DAB, and use basic physics to build our own microphone. Plus, the 7000 year old cheese and the surprisingly simple solution to a box jellyfish sting.

In this episode

01:36 - The Basics of Broadcasting

The Basics of Broadcasting

with John Adamson, BBC Production Editor, London and South East

This week, the world celebrated Sound Engineer's Day, but can you guess what day this was? Well, it was on 12-12-12 of course, or 1,2;1,2;1,2! Joking aside, behind the thousands of broadcasts that are happening all around the world at only one moment, there's an invisible army of engineers who make the whole process happen, so that sound waves leaving a presenter's mouth can be captured, converted into electromagnetic signals, beamed through the air, and then picked up by your radio, so that you can listen.

To find out how, we're joined now by John Adamson who's the BBC Production Editor for London in the Southeast and he's known colloquially as "the Digital Doctor'. Hello, doc.

John - Hello. Good evening, to you.

Chris - Good evening, John. So did you celebrate Sound Engineer's Day?

John - I was very busy at work, dashing back and forth between London and Tunbridge Wells, and unfortunately, I missed that moment. I was hoping to catch 12:12:12, 12/12/12 on the screen, but a phone call came in and I missed it. Isn't that dreadful?

Chris - I intended to observe it too, but then I think  something cropped up on the television, another form of broadcasting. So tell us John, when I'm speaking, what actually is the journey between this studio where I'm sitting and a radio in someone's car or their living room?

something cropped up on the television, another form of broadcasting. So tell us John, when I'm speaking, what actually is the journey between this studio where I'm sitting and a radio in someone's car or their living room?

John - Starting at the beginning, your voice generates mechanical vibrations that have to be picked up by something called a transducer and that would be a microphone, and that converts the mechanical energy into electrical energy. That tends to be an absolutely tiny signal at that point, so you can't do a great deal with that. You need to amplify it so it's useable.

The next thing you would find in the transmission chain is an amplifier that would boost the signal by probably around 70 decibels. So, in the trade, we work at 0 decibels as a standard level for everything we do in broadcasting, that's just under a volt of audio. If you imagine a microphone produces -70dB or thereabout, this is brought up 0dB.

Then of course, we want to do more than just broadcast the microphone, as much as it's nice to hear your voice of course! Probably, other things you want to hear like CDs, vinyl, maybe cassettes, mini disc, mp3 players, things like that - all sorts of audio reproducer systems in the chain these days. So, they would be fed into an audio mixer and that's just a clever system of mixing what you want to hear and routing it to the transmitter. You might have in your studio, I imagine you probably have about 12 channels of mixing on your desk there, maybe slightly more.

Chris - So when people say, "mixers" what you are referring to is a desk with all these sliders on it that you can move up and down, and they change how loud any individual source is. Presumably, they're basically varying their resistance, so the current has to flow for a bigger or smaller resistance to make it louder or softer?

John - That's exactly right, yes. If the fader is fully closed, it presents a short circuit to the input of the amplifying stage. If it's fully open then all the signal that's coming in from the other side of the fader gets transferred across to the output stage. So, you have a mixer and that mixer might have some clever things like pre-fade channels on it so that as a broadcaster, you would want to hear the signal before you go live. You want to make sure it's working at the right level. So in the case of bringing me up on your sound desk at the moment, you would've talked to me off air, checked on pre-fade that you're hearing me and then when you're happy with it, you would open the fader and then I would be routed to the transmission chain, in the way that you are.

Chris - What about the level, John, because one of the things that we're all watching in a studio is how loud things are, how soft things are, and we're tweaking knobs and things to make sure that things don't get too loud or soft. But is there also work going on behind the scenes to make it sound very consistent? Because one of the things people notice when they're tuning into their radio, in their car or at home, is the sound level is always nice and consistent.

John - That's right. In the early days, we didn't have any sort of processing other than a protection limiter on the end of the audio line feeding the transmitter. That was all well and good, it stopped distortion because the transmitter circuits could only cope with a certain amount of level, +8dBu (decibel unloaded) is what we term the maximum level in the business.

But the difficulty is that music, in particular, has quite a dynamic range, so you want to be able to compress that a bit especially for people who are listening in less than ideal conditions. You know, people are listening in their cars or maybe they're listening in the kitchen, and the kettle is boiling at the same time or the vacuum cleaner is on. All these things may be happening which make it difficult to cope with the wide dynamic range.

So, what we do is we process the signal. We compress it and in the early days, it was a simple compressor which might put in about 12dBs of compression. So in other words, if anything above minus 4dBu came in, that was compressed to be the same level.

Chris - In other words, you're narrowing the gap between the loud bits and the soft bits so you can make everything a lot louder because the peak is not going to be super loud, but then the quietest bits are not super quiet either, so it's normal and consistent.

John - Indeed. Now, if you were to listen to perhaps radio 3 on FM, they still broadcast a very wide dynamic range whereas if you listen to radio 1, the other extreme, it has hardly any dynamic range at all.

Chris - It sounds very loud, doesn't it, when you hear the relative difference between the radio stations.

John - Yes. Originally, we just had compressors and compressor limiters in the chain, but now, we have audio processors. And what they do, is they chop up the audio spectrum from 30 Hertz to 15 Kilohertz into different frequency bands, and they individually compress all these. So that means, you can make the sound even louder. Some people may remember the Phil Spector sound from the '60s, the "Wall of Sound", it was called. That was that principle, where every part of the audio spectrum was squeezed to the absolute maximum, so you had this - as they called it, Wall of Sound - very, very loud, very consistent, ideal for listening in less than ideal conditions.

Chris - A lot of people say they're very fatiguing to listen to. Just very briefly, so once we actually get to the transmitter, most people listening to these programmes are probably going to be listening on FM. What just briefly is FM?

John - Two sorts of modulation systems that we've had for a great number of years, AM and FM. AM stands for amplitude modulation, FM stands for frequency modulation. So, in the case of AM, amplitude modulation, the intensity of the transmitter signal is varied according to the amplitude of the audio. But in FM, it's the frequency of the transmission that's varied according to the amplitude of the audio. So in the FM system, radio Cambridgeshire operates on 96 megahertz. The loudest passages would cause it to swing back and forward between 96.1 and a bit and 95.8 and a bit.

John - Two sorts of modulation systems that we've had for a great number of years, AM and FM. AM stands for amplitude modulation, FM stands for frequency modulation. So, in the case of AM, amplitude modulation, the intensity of the transmitter signal is varied according to the amplitude of the audio. But in FM, it's the frequency of the transmission that's varied according to the amplitude of the audio. So in the FM system, radio Cambridgeshire operates on 96 megahertz. The loudest passages would cause it to swing back and forward between 96.1 and a bit and 95.8 and a bit.

Chris - So actually, although you're tuned to 96, it's actually a little bit either side of the 96 which is wiggling in the spectrum, and that's what your radio is picking up on.

John - Indeed, and that requires a clever decoder in the sets that will allow you to do that. Unlike the days of AM where you could just have something simple like a crystal set which could decode the AM signal. With FM, you need a more elaborate detector.

Chris - And given that FM is stereo as well, does that mean you're actually broadcasting two sets of information, one corresponding to the right speaker, one corresponding to the left, and they're both wiggling around 96 megahertz? How are you doing that?

John - Well, that is true, but it's a bit more complicated than that because if you go back to the history of FM or VHF as we used to call it, back in 1955 when it was opened, we just had a mono signal. So, all we were presenting to the transmitter was an audio signal from 30 hertz to 15 kilohertz and that was mono. Then someone developed the stereo system, but of course, you needed compatibility between the stereo system and the old mono system. So, to transmit left and right would be a problem because how would an old radio which didn't have a stereo decoder in it know what this signal was? So what we did is we developed a pilot tone system and continued to broadcast the mono signal between 0 and 15 kilohertz. But then we took the stereo information, the difference signal if you like, and added that to the FM signal, but stuck it on a subcarrier of 38 kilohertz which meant that if you had an old mono receiver, it didn't pick up that signal. It was quite happy. But if you had a stereo decoder, it would decode the 38 kilohertz subcarrier frequency information along with the mono signal, combine them together and bring it back to left and right.

10:56 - Making a Microphone

Making a Microphone

with Dave Ansell

Probably the most important part of any radio broadcast or recording, is the ability to capture a voice. So we know that when we speak, vibrations in the voice box create a pressure wave in the air, this travels through to cause vibrations in your ear, and they are then converted into electrical signals, and fed to the brain where they're decoded.

But, all the kit that we use for radio is electric, so we do need a device that converts that pressure wave into an electric current and this is what a microphone does. To find out how microphones do that, we asked our own Dave Ansell if he could build one from scratch, and to do that, he looked back into the history books...

Dave - The first kind of microphone which was practically used was developed by Edison in the 1880s to work with the telephone, and that was based on having a little pot full of carbon granules. You apply a voltage across those carbon granules, and you get a current out. Now if you shake that using the sound wave which is coming from your voice, that will change resistance of those carbon granules, so that will change the current going through it and you have an electrical signal. But the problem is, the resulting electrical signal is horrible and it gives a very, very bad rendition of the sound it was producing. But it was very cheap and you didn't need to amplify it, so it's very useful in the early days.

Ben - How did people take those ideas and build on them?

Dave - The next big improvement in microphones was done by a guy called Wente based in Bell labs in 1916 and I'm going to build a model of that now. This is based on the principle of capacitance. I've got a piece of tin foil and if you apply a voltage of that tin foil, some current will flow into it, a tiny, tiny amount, a little tiny bit, and it might become negatively charged. Now, if you apply the opposite voltage to the second piece of tin foil then that will become positively charged and for a certain voltage, a certain amount of charge will flow from one plate to the other, so we say the system has a certain capacitance.

Ben - But if you allow those two bits of tin foil to touch ![]() then presumably, the current will just flow from one to the other and instead of having a way of storing energy, a capacitor, what you've got is just a very poor circuit.

then presumably, the current will just flow from one to the other and instead of having a way of storing energy, a capacitor, what you've got is just a very poor circuit.

Dave - That's right, so we don't want to do that. So, I'm going to separate these two pieces of tin foil with a piece of cling film which is a really good insulator.

Ben - So what we now have is a very basic model of the standard electrical component which is a capacitor, and that is capable of storing a small amount of charge, but that still doesn't look to me like it can collect sound from the environment and turn it into an electrical signal.

Dave - The way it does this is a property of capacitors. This is because if you got the two plates, a positive plate and negative plate very close to each other, they stabilize each other. The positive plate attracts the electrons in the negative plate and so, the voltage you need to push electrons in gets lower, so you can get more charge on for the same voltage, and we say has a higher capacitance. The closer those two plates are together, the higher the capacitance is. So, if the plates move backwards and forwards, the capacitance is going to change up and down.

Ben - So, in order to turn this into a microphone, we need to find a way of changing the distance between the two. Is that as simple as shouting at it? Essentially, we put a vibration in it from our voice that changes the distance between the two plates, and that gives us a model of that sound wave in the changing electrical current?

Dave - That's what I intend to do, yes. Shouting is going to be involved. As the sound waves hit it, they're going to move the plates together and apart. The capacitance is going to change, so the amount of charge on those plates is going to change, you get a current flowing in and out, which it should be able to detect as an electrical signal.

Ben - So, we're wiring up our 2 pieces of tin foil and a piece of cling film now. I would be amazed if it does work. Dave, over to you, what's next?

Dave - Well I've now got this connected to our recorder so we should be able to listen to it later. I'll give it a go. I might speak quite loudly because my experience is this is not the most sensitive microphone in the world, but let's give it a go.

Ben - Okay, hold on. I had better stand back if Dave is going to be shouting. Dave's voice is loud at the best of times. Off you go then, Dave.

Dave - So I'm now shouting very loudly at this capacitor based microphone!

Ben - Let's have a listen and see what it sounded like.

Ben - Well, that is noisy and your voice was quite quiet despite you shouting very, very loudly, but it worked. So clearly, this demonstrates the principle, but why was it so noisy?

Dave - One of the problems is that my microphone is also actually quite a good aerial and it will pick up any electrical interference going on in the room. So, to improve this we'd probably want to put it in a metal tub and earth the outside of that metal tub to give it a bit of protection against the electric fields moving about. If you build this really well, it produces a decent microphone. Though in 1931, the same guy, Mr. Wente, (he seem to have invented most microphones in the world), developed a slightly different technology for microphones called the dynamic microphone, and we can actually have a go at building one over here.

Ben - So, a dynamic microphone, if I remember rightly is the type that you'll see most singers using on stage, but how does it differ?

Dave - So, this is going to do the same thing as the capacitor based microphone. It's going to take a vibration from the air and turn it into an electrical signal. But it's doing that conversion differently. This works on the principle called electromagnetic induction and you may have done this at school. All you have to do is take a coil of wire which I've got here and if you take a strong magnet and wave it near the coil of wire, you'd generate a voltage.

Dave - So, this is going to do the same thing as the capacitor based microphone. It's going to take a vibration from the air and turn it into an electrical signal. But it's doing that conversion differently. This works on the principle called electromagnetic induction and you may have done this at school. All you have to do is take a coil of wire which I've got here and if you take a strong magnet and wave it near the coil of wire, you'd generate a voltage.

Ben - How do you capture the sound wave and make it wave a magnet next to a coil?

Dave - Essentially, you've got to turn the movement of air into a movement of a physical object and a good way of doing that is essentially make a drum skin because that can be easily moved by the air, so I've got a drum skin here. And if you just feel that with your finger and I talk into it, you can feel the vibration.

Ben - Yes, so I can feel the vibration in my finger. What we've basically got here is a plastic tub with a bit of balloon rubber stretched across the top. So that's a good way to collect that vibration and then how do we put that into a magnet that goes into the coil?

Dave - We've got to somehow attach either the magnet or the coil to this and keep the other one of them still. I've connected the magnet to it. All you need to do now is get a coil and wire it up to your recording device.

Ben - So again, I'm going to step back a bit I think and Dave, if you could shout at your dynamic microphone...

Dave - So, this should hopefully be recording something marginally useful and it's basically just shouting at a magnet and a drum skin!

Ben - And now, let's have a listen back.

Ben - So again, very noisy, but it's clearly you and I can pretty much understand what you're saying there. Now, microphones come in all different shapes and sizes, and from the tiny little lapel ones, through to the big fluffy ones that they use for recording people on film sets, are they all based on the same technology?

Dave - Most microphones are now based on the development of the capacitor or they're called condenser microphone and there are some more exotic types of microphone. You get strange kind of piezoelectric microphones based on the same principles as a gas lighter, the clicky gas lighters. And basically, if you can think of a way of converting a vibration into electrical signals, someone's probably trying to make a microphone using it.

18:54 - Building a Biological Pacemaker

Building a Biological Pacemaker

Normal heart cells can be converted into specialised pacemaker cells using gene therapy, and this could pave the way to building a biological pacemaker, according to research published in the journal Nature Biotechnology.

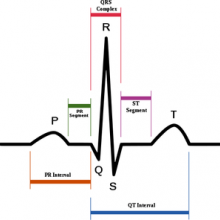

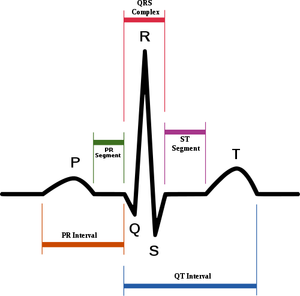

A heartbeat originates from a small region of the heart known as the sinoatrial node, which contains a small number of specialised cells which act as the pacemaker. There are fewer than 10,000 of these cells in a typical adult heart, which may contain around 10 billion other cells. They function by initiating an electrical signal that creates each heartbeat in a smooth coordinated way.

A heartbeat originates from a small region of the heart known as the sinoatrial node, which contains a small number of specialised cells which act as the pacemaker. There are fewer than 10,000 of these cells in a typical adult heart, which may contain around 10 billion other cells. They function by initiating an electrical signal that creates each heartbeat in a smooth coordinated way.

If these cells are injured by disease or through ageing, the heartbeat can become uncoordinated, stopping the heart from being able to pump blood properly. The only available treatment is to fit an expensive electronic device known as an artificial pacemaker. For the last decade, research has been ongoing to find a biological alternative.

Now, Nidhi Kapoor and colleagues at the Cedars-Sinai Heart Institute, in Los Angeles, California, report that they have been able to convert adult rat heart muscle cells, called cardiomyocytes into pacemaker cells using gene therapy. They examined a number of candidate genes, all known to be expressed during embryonic development of the heart, and settled upon a gene called Tbx18.

When Tbx18 was loaded into a adenovirus vector and expressed in adult cardiomyocytes, they become "faithful replicas" of pacemaker cells, exhibiting the correct epigenetic markers and functioning in the right way.

Further tests confirmed that the cells operate the same clock mechanism, respond to external stimuli such as hormones in the same way as natural pacemaker cells, and even took on the small size and characteristic shape of natural pacemaker cells. This was the case both in the dish and in animal models of heart disease, where the gene therapy was local, effective and long lasting.

Furthermore, they retain this new function even after the gene stops being expressed, so further treatments aren't needed.

The researchers call these new cells iSAN, or induced SinoAtrial Node cells, and suggest that in the future, we could grow a clump of pacemaker cells before transplanting them, or simply inject a gene therapy agent directly into a patient's heart, doing away with the need for artificial pacemakers.

21:53 - 7000 Year Old Cheese

7000 Year Old Cheese

with Professor Richard Evershed, Bristol University

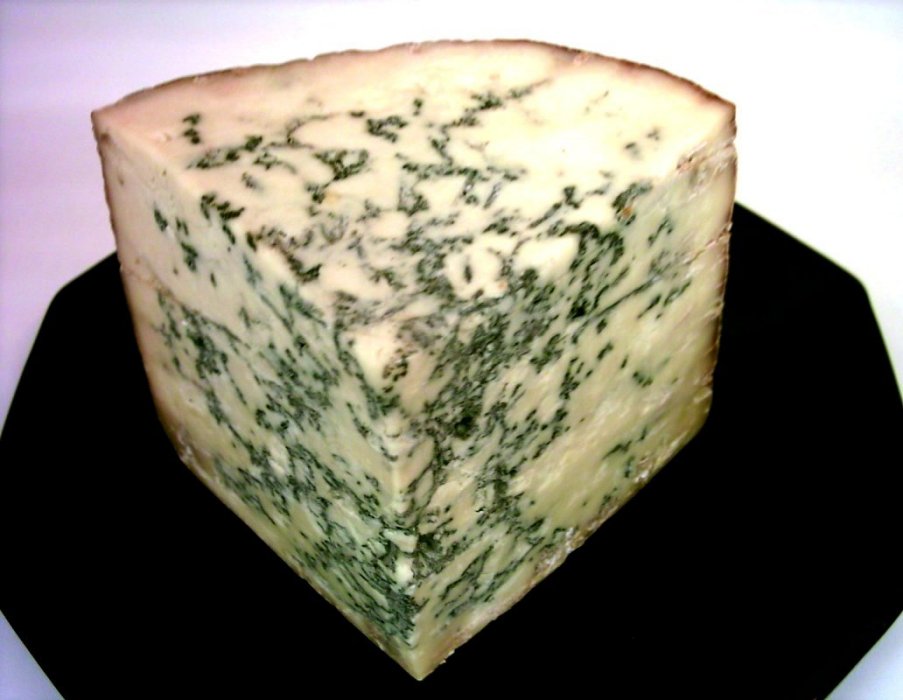

This week, scientists have uncovered residues of 7,000-year-old cheese. Chemist Professor Richard Evershed from the University of Bristol is one of the authors of a paper in the journal Nature this week which describes how he and his team have made this rather milky discovery.

Chris - So, how did this story begin?

Richard - Well, it began truly about 30 years ago when one of our co-authors, Peter Bogucki, was putting together evidence from sites he'd been excavating with colleagues in Poland where they've been uncovering these perforated pot shapes, ceramic pot shapes and he propounded a theory based upon those pot shapes that they were basically cheese strainers and started to develop the idea that these early farmers, which is the Linearbandkeramik culture, were dairy farmers.

Chris - When you say they were perforated pots, we're talking clay pots with fairly substantial holes drilled in them, rather like a top of a pepper pot?

Richard - Very numerous holes, if you look at the images  that are in the article. They're all over the vessels. They look like a sort of small colander.

that are in the article. They're all over the vessels. They look like a sort of small colander.

Chris - Obviously one can say they look like the kind of thing that modern-day people use to strain cheese, but just because they look like it doesn't mean they definitely were being used to strain cheese. Is there anything else they could've been used for?

Richard - That's right and in fact, we've had an interest in identifying dairy products from the archaeological record for a number of years. These vessels were one of the major sources of sort of typological evidence, but I must admit, I've been rather sceptical and I'd often said in my lectures, but surely, this could've been used for straining anything.

Chris - So, how did you approach dealing with this and trying to find out what they really were used for? How did you discover cheese?

Richard - This is where the two studies collide. Our research, going back about 12 years now has been focusing on looking at the origins of dairy by looking at the pottery vessels, usually cooking vessels. We've developed a methodology, a chemical method which allows us to identify milk fat. It's based upon the fact that animal fats, which are the most common type of organic residue you find in archaeological pottery when you study pottery from Europe, can be identified through their fatty acid signatures. Based upon studies that we've done, studying many modern animals, we've developed a very robust proxy which allows us to separate ruminant, carcass fats from dairy fats, pigs, horses can all be separated. So we can quite constantly identify different fats. So if we go into archaeological pottery, recover the residual fats in there, we can identify these fats quite unambiguously as to whether they're dairy fats or carcass fats.

Chris - These pieces of pottery have been in the ground in this site in Poland, in the centre of Poland for 7,000 plus years.

Richard - It's remarkable, isn't it? Yes.

Richard - It's remarkable, isn't it? Yes.

Chris - And you can get fats out of them, that were from the cheese or the dairy products these people were working on?

Richard - Absolutely. It never ceases to amaze me. It's due to the fact that these fats are preserved deep within the fabric of the pottery and I imagine that they go into sort of molecular size pores in the alumina silicate matrix where they're protected from say, bacteria getting in there to degrade them. And in the right sort of soil types, they're protected from leeching from under the pottery by ground water.

Chris - So, you've got specimens of the pots, you took small samples which enables you to isolate these fats. What do those fats tell us then? They say that there was dairy material in these pots?

Richard - That's right and just to clarify, we're not only focusing on these cheese strainer vessels. We've looked at a large number of other vessel types from the site and quite specifically, what it shows is that these perforated vessels, these putative cheese strainers are the ones that contain dairy fats. And so, if you then start to put this together this as like a forensic exercise, we've got dairy fats associated with perforated vessels and you start to ask yourself the question: What other milk processing activity could you reasonably have been doing which required you to strain some sort of milk product? Well, it's cheese making. There is no other milk processing activity that uses a straining activity.

Chris - Why do you think these people wanted to make cheese?

Richard - Well, there's two motivations. One, you would be producing - if you had a substantial dairy herd as the animal bone assemblages from these sites seem to suggest - you would have surplus of milk at certain times of year, in the summer time for instance. So, the production of cheese would've been a way of producing a non-perishable product that you could then preserve throughout the year so you could just take full advantage of the nutritional properties of milk in the winter time. But there is another critical factor and this is where Peter Bogucki comes back into the story with his paper from the 1980s. It's related to this lactose intolerance phenomenon. It's very likely, almost certain that these early farmers were lactose intolerant, so they would, potentially, have got quite poorly, had they been drinking substantial amounts of whole milk. So, it looks like this straining activity was perhaps the recognition of this and they had found a way of getting around the lactose intolerance problem. What you're doing when you separate the curds from the whey, the whey contains the lactose. So you're actually producing a lactose reduced product when you produce cheese and thus, lactose intolerant farmers would've been able to exploit the nutritional qualities of milk.

For more on cheesemaking, check out the

Naked Scientists Scrapbook:

27:15 - Solution to box jellyfish venom

Solution to box jellyfish venom

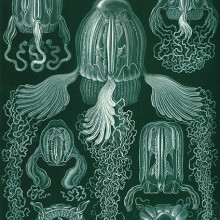

Scientists have discovered how to block the action of the venom of the lethal box jellyfish family.

Using a new extraction technique to isolate the stinging cells, or nematocysts, from the tentacles of two species of the box family, including the north Queensland resident Chironex fleckeri, University of Hawaii researchers Angel Yanagihara and Ralph Shohet were able to isolate the venom components at unprecedented concentrations and purity. This has enabled them to examine in detail how this potent cocktail of chemicals, to which at least 100 people fall victim worldwide each year, exerts its lethal effect.

Using samples of freshly-donated human blood, the researchers confirmed that the venom components attack red blood cells by assembling tiny pore structures that punch holes through the cell membranes. This causes the cells to swell and then burst open, releasing into the bloodstream large amounts of potassium, which is normally kept locked away inside cells. This, Yanagihara and Shohet speculated, could disrupt the electrical activity of the heart.

To test this, they administered varying doses of their purified venom samples to mice, which rapidly developed escalating potassium levels, changes to the electrocardiogram consistent with potassium toxicity and cardiac arrest. This is almost certainly the cause of death in the majority of human victims that receive lethal envenomations, which is not difficult given that the tentacles can be metres long and are each loaded with half a million venom-laden nematocysts.

Next, the researchers tested a range of different treatments to attempt to mitigate the effects of the venom, including using doses of the antivenom traditionally prescribed in emergencies for box jellyfish stings, and a range of metal salts, including zinc gluconate.

Zinc, they found, was extremely effective - in fact more so than the antivenom - at deactivating the venom toxins and preventing the dangerous rise in potassium. The duo conclude their paper, published this week in PLoS One, by suggesting that zinc treatments should be tested on larger animals regarded as good models for humans, like pigs, and also topical application of zinc to sting sites might help to reduce the subsequent venom load by disabling undischarged stinging cells. The result, they suggest, might be many lives saved...

31:32 - Porcupine Quills Inspire Better Needles

Porcupine Quills Inspire Better Needles

Porcupine quills penetrate skin better than a hypodermic needle because of tiny backwards-facing barbs at their tips, which also render the quills very difficult to pull back out. This trick could now inspire better medical equipment such as needles and tissue adhesives.

North American Porcupines carry around 30,000 spines and are known to be an effective defence mechanism - they penetrate tissues easily and are often difficult to remove. Unlike those found on similar animals such as the African porcupine or our own hedgehog, North American Porcupine quills have a series of microscopic barbs on their tips. These barbs intrigued researchers, including Dr Jeffrey Karp from the Brigham and Women's Hospital in Boston, who then undertook a series of experiments to see what effect these barbs had.

North American Porcupines carry around 30,000 spines and are known to be an effective defence mechanism - they penetrate tissues easily and are often difficult to remove. Unlike those found on similar animals such as the African porcupine or our own hedgehog, North American Porcupine quills have a series of microscopic barbs on their tips. These barbs intrigued researchers, including Dr Jeffrey Karp from the Brigham and Women's Hospital in Boston, who then undertook a series of experiments to see what effect these barbs had.

Publishing their results in the journal PNAS, the researchers describe a number of experiments, measuring the force required to penetrate a sample of pig skin, and then to pull the quill back out. They repeated their tests with quills where the barbs had been sanded smooth, and with other items of a similar diameter, including a hypodermic needle.

Unsurprisingly, having backwards-facing barbs means more effort is needed to remove a porcupine quill than a smooth quill - almost four times as much force was required as with a barbless quill.

The surprising result, and for the researchers the most interesting, was that the presence of barbs reduced the force needed to penetrate into the muscle. While an 18 gauge hypodermic needle takes around 0.59 Newtons of force to pierce into pig flesh (bought from a local butcher), the barbed quill required only 0.33N to achieve the same task. Samples taken from the skin and observed under a microscope also showed a much smoother puncture site, suggesting that the tissue had absorbed less energy from the quill, and therefore taken less damage.

Computer modelling suggests that the barbs concentrate force onto a smaller area, much like the jagged edge of a serrated knife. This reduces the need for the tissue to deform around the entire circumference of the quill, resulting in easier penetration and less damage.

The authors argue that mimicking the barbed structure could allow creation of microneedles that penetrate easily but resist buckling, and enhance a range of other biomedical applications.

39:58 - Carbon Storage in Peat - Planet Earth Online

Carbon Storage in Peat - Planet Earth Online

with Heiko Balzter and Ross Morrison, University of Leicester

The flat farmland area in the east of England, known as the fens, isn't everyone's cup of tea, but the rich peaty soil is of particular interest to climate scientists.

Now a team from the University of Leicester have developed an experiment in the centre of a field to measure how much carbon is absorbed and released by this peaty soil.

Planet Earth's Richard Hollingham, went to see the experiment...where he spoke to Ross Morrison and, first, the head of the project, Heiko Balzter...

Heiko - We are trying to get to the bottom of the carbon emissions that are coming off these soils and the different conditions, both different land use conditions and extreme weather as well. We've had very extreme dry conditions last year lasting until about Easter and this year, of course, it has been one of the wettest summers in the last 100 years in Britain, so we are trying to find out what that does to the carbon exchange between the land surface and the atmosphere. The carbon emissions coming off the peatlands here, of course, contribute to the global warming problem, so there is the feedback between the land and climate system and we are trying to get numbers of emissions that are coming off the ground.

Richard - Now, Ross, you're responsible for the experiment here which is cordoned off behind a barbed wire fence. It's a series of solar panels, I'm guessing for electricity. There is a substantial, almost like a fridge-sized green box and then a very peculiar - I don't know how best to describe this! It's obviously some sort of weather instrument but it looks like a weather instrument crossed with a whisk, what is it?

Richard - Now, Ross, you're responsible for the experiment here which is cordoned off behind a barbed wire fence. It's a series of solar panels, I'm guessing for electricity. There is a substantial, almost like a fridge-sized green box and then a very peculiar - I don't know how best to describe this! It's obviously some sort of weather instrument but it looks like a weather instrument crossed with a whisk, what is it?

Ross - This is a weather station but quite an advanced weather station. It's got all the elements you would expect from a normal weather station, for example, we're measuring temperature, relative humidity and radiation and that sort of thing, but then the bit that looks a bit like a whisk is the more advanced part of this. What we have here is a device, an anemometer that basically measures atmospheric turbulence and then we have the other device which basically measures the concentration of CO2 and water vapour in the atmosphere.

Richard - And you're measuring the carbon dioxide, what, coming off the peat and going into the peat? The exchange of gases?

Ross - At certain times of year plants take up carbon dioxide from the atmosphere and throughout the year soils basically lose carbon dioxide to the atmosphere and so essentially using this instrument we can basically calculate how much is moving over quite a high frequency and every half an hour we get a measurement from this. Then, obviously, over longer periods of time we can build that up to get an idea of how much carbon and we're gaining at certain times of the year and then losing at other times of the year and then the balance over time between that.

Richard - So when there's a crop in the field - so spring/summer then you'd expect the carbon dioxide to be coming into the peats and this time of year you would expect the carbon dioxide to be leaving?

Ross - Yes, at the moment there's a vegetation cover but this is just coming back naturally-

Richard - Nettles and weeds and bits and pieces?

Ross - Yes. When the crop is actually growing it does accumulate quite a lot of carbon, at least the crop we measured this year which was iceberg lettuce. This did, on some part of the growing season, actually result in a net removal of carbon dioxide from the atmosphere as the crop was maturing. Then at other times of the year following ploughing and this sort of stuff, at this time of year when essentially most of the soil is bare we would be expecting to see a net loss of carbon to the atmosphere.

Richard - Heiko, this isn't the only set up you've got, you're looking at other sites as well?

Heiko - Yes that's right. We have three towers in total in the fens now. One is operated by the Centre of Ecology and Hydrology and two are operated by the University of Leicester. They are covering a land use gradient with three different types of land use. One is in the semi-natural fen in Wicken fen which is one of the few remaining areas of pristine peatland in the fens. The second one is in a restored area that is Baker's fen near Wicken that is now managed by the National Trust for nature conservation purposes and is trying to re-wet the soil that was previously used to agriculture and this third one that was set up this year is the first one that is on agricultural soil on farmland.

Richard - And how will the information from this be used? What do you want to do with it?

Heiko - Well it has huge implications for knowing exactly the amount of greenhouse gasses that are omitted from the land surface areas. First of all because we need to understand the earth system fully and we need to know how important the greenhouse gas emissions are from this area and to assess how important it is to protect the carbon that is locked in the soils here. Secondly there is of course an implication for the farm managers in the sense that we are trying to identify the best way of actually helping them to reduce and control their carbon emissions which is in their own interest and it is one of the motivations why this farm manager here has made the land for us available to install the instrumentation.

45:08 - Advantages of Digital Radio

Advantages of Digital Radio

with John Adamson, BBC

Ben - If you're not currently listening online, then you could be using one of three different ways to receive your radio. We've already heard about AM and FM, but now, there's also DAB - that's Digital Audio Broadcast. The BBC first started broadcasting digital radio back in 1995 and now, most of their output can be found on a DAB radio. But, if FM was working, then why go digital? Still with us is John Adamson, BBC Production Editor for London and the Southeast, and as we said earlier, 'the Digital Doctor'. So John, why did we want to go digital?

John - I suppose it was a combination of quality and quantity. So, it enhances listeners choice and offers better quality, particularly in marginal signal conditions.

If we go back to AM just very briefly - medium wave and long wave, there's another radio station every 9 kilohertz apart. So, the audio bandwidth is only 4.5 kilohertz, barely better than telephone quality, not very good at all. Also, a finite number of frequencies that cannot be re-used very often because their transmissions spill over a very wide area, they can't be very well targeted. In the case of long wave, there's only 15 channels for the whole of Europe, so you can imagine it's quite difficult to manage that frequency of the spectrum effectively. Medium wave has rather more, but it's still four and a half kilohertz audio bandwidth to avoid interference to adjacent stations. Aerials are rather large. Not a lot of choice there really. Also, medium wave has a problem of after dark interference; Strange noises, the background continental interference, whistles, whines, and buzzes in the background. FM made things a lot better of course because we got stereo as we said earlier and more immunity to electrical interference. Also, because it was on the VHF band, around about 3 metres wavelength, aerials were a sensible size, so you could target your audience quite effectively. So, in the case of BBC Radio Cambridgeshire, they've got a transmitter at Madingley that's targeted just for the environment around there.

If we go back to AM just very briefly - medium wave and long wave, there's another radio station every 9 kilohertz apart. So, the audio bandwidth is only 4.5 kilohertz, barely better than telephone quality, not very good at all. Also, a finite number of frequencies that cannot be re-used very often because their transmissions spill over a very wide area, they can't be very well targeted. In the case of long wave, there's only 15 channels for the whole of Europe, so you can imagine it's quite difficult to manage that frequency of the spectrum effectively. Medium wave has rather more, but it's still four and a half kilohertz audio bandwidth to avoid interference to adjacent stations. Aerials are rather large. Not a lot of choice there really. Also, medium wave has a problem of after dark interference; Strange noises, the background continental interference, whistles, whines, and buzzes in the background. FM made things a lot better of course because we got stereo as we said earlier and more immunity to electrical interference. Also, because it was on the VHF band, around about 3 metres wavelength, aerials were a sensible size, so you could target your audience quite effectively. So, in the case of BBC Radio Cambridgeshire, they've got a transmitter at Madingley that's targeted just for the environment around there.

Why do we go to DAB? Well, FM still had a problem in marginal areas where there's something called multipath interference. I don't know if you've noticed this as you're driving along or using a portable radio, but sometimes you get all sorts hisses and burbles, and squeaks and things. That's multipath interference and that's a function of analogue radio - that if you get a wanted signal and a reflected signal which inherently must be delayed with respect to the original, it will interfere.

Why do we go to DAB? Well, FM still had a problem in marginal areas where there's something called multipath interference. I don't know if you've noticed this as you're driving along or using a portable radio, but sometimes you get all sorts hisses and burbles, and squeaks and things. That's multipath interference and that's a function of analogue radio - that if you get a wanted signal and a reflected signal which inherently must be delayed with respect to the original, it will interfere.

If you think of analogue television, which was shut down just last year, you may remember seeing ghosting on the picture. That is the visual equivalent of the interference you would get on audio.

So, the reflected signal always causes a problem to the main. DAB offered something rather new and that was, that because of the modulation system used and the coding systems used, you could have a number of transmitters, all working on the same frequency.

So, for example, the BBC national digital multiplex operates on one frequency right around the country. All the transmitters work together and although some of them will be delayed with respect to others, if there are in a certain window of timing some delay, they will add, and the listener won't get any interference.

High quality audio is possible as well. Thanks to digital audio encoding, mpeg compression, that allowed us to squeeze more audio into a given bit of spectrum. If you were using linear digital audio, you would need around about 1.5 megabits or 1.5 megahertz worth of space for one stereo audio channel. That's not very efficient. But with mpeg, this allowed us to reduce that to say, 256 kilobits, and that was alleged to give around about CD quality. So, in the early days, what we were thinking of with DAB was, we'd have more channels, but not many more, but high quality, and a more rugged, reliable, transmission system

Ben - Lets come back to the audio compression in a minute, because I think we need to point out that that's different from the type of compression we were talking about before. When we were talking about amplitude modulation and frequency modulation, that's essentially building a model of the sound of that pressure wave in the modulation. But with digital radio, you're not doing that. It's not a direct model of the sound. It's differently encoded.

John - Yes, it's all digitally sampled with a lot of ones and zeros effectively, and all gets assembled back in the decoder again. So, if you were to listen to DAB radio spectrum on an analogue set, all you'd hear is a lot of random noise.

Ben - And how does the signal change with distance? I have a digital radio at home, and it seems to be, ironically, digital. It's either perfect and sounds beautiful or the signal is dreadful. Whereas with an FM radio, I can tune it in, and it sort of seems to fade out with distance, the quality fades out. Is digital always as good as it's going to be, or not present?

John - The theory is that you either get a perfect signal or you're getting no signal at all, but in reality, there's that grey area where the signal isn't quite strong enough for your set decoder to make sense of. So, what you get is this characteristic sound which has been described as "bubbling mud". The difficulty with that is that it's a really irritating sound. With FM, you just get more and more noise. The signal gets weaker or you might get these burbles that I mentioned to you earlier, and squeak sounds with multipath distortion. But with the digital burbling mud sound, it's really quite difficult to follow the programme. So, it's not perfect, that is true. Because of the modulation and coding system that is used, it's generally better for mobile and portable reception. So if you're driving along in your car, it should be more rugged than an FM equivalent. And of course, you're on the same frequency, so you're not having to retune every few miles as you find a different transmitter.

Why is DAB so delayed?

John - There's no magic answer to this one because there's so much intelligence going on in the audio coding system in order to compress it to 256 or less. In fact, the DAB standard for most stations now is 128 kilobits. There's a lot of sampling of the audio to begin with, to analyse how much can we throw away and still make an acceptable signal. That takes time at the transmission end, but also, it takes time at the decoding end where your set has got to understand what's going on there. So, fundamentally, DAB is going to be behind analogue, in the same way as digital television is behind analogue television, when it existed of course.

53:01 - Why do I get radio fatigue?

Why do I get radio fatigue?

John - It's all to do with the amount of compression and the type of compression that is put into the signal. As I said earlier, audio processors nowadays are very clever devices, multiband devices, so they chop up the audio spectrum into different chunks, and they process them separately.

You can put an amount of basic compression in, and you can add clipping. Clipping in moderation is fine because it adds intelligibility. In fact, old telephone systems used to have lots and lots of clipping on it, which made it nice and easy for people to understand what was being said, even on a really crackly horrible line.

Chris - This is where, if you got a wave, if you're literally just chopping the top off the wave, isn't it, to make it a bit quieter.

John - That's right. Exactly that. Now, that in moderation is fine, but it can be quite tiring after quite a short period of time. Normally, around about 5 to 10 minutes, some people start to notice the clipping effects in the audio processing that's used.

Unfortunately, a lot of broadcasters, when they put the audio compression in, do it for an instantaneous reaction to listeners who perhaps are just tuning down their dial, they find their radio station, and they think, "Wow! That's loud! That's very impressive" and are going to hang on in there for a bit.

That's all well and good, but very quickly, this fatiguing effect can take over, and it affects some people more than others. That's the strange thing, you might find one person that doesn't notice the fatiguing effects. But other people find it really, really distracting and disturbing, and want to tune away very quickly.

55:22 - How do touch sensitive switches work?

How do touch sensitive switches work?

Why are touch sensitive switches so sensitive to skin, soap, and potato, but not everything else? We asked Philip Garsed, PhD Electrical Engineer student at Cambridge University about the science behind this home experiment.

Philip - This effect is all to do with the fact that our bodies, along with many other things are able to store a certain amount of electrical charge. If you've ever had a static electric shock, you already have some experience of that. It's how much electrical charge something in store is known as capacitance. In a touch lamp, when an object that can store charge like your hand comes close to the centre circuit, it will influence the circuit's behaviour and usually, this will cause a change either of voltage or the speed of the timer circuit. And if that changes big enough then the lamp will switch on or off. But it isn't just you that can store charge. Loads of other things can and that includes soap, fruit, vegetables, fizzy drinks in bottles, as long as it can store enough charge to fool and tend them into thinking that there's a hand there then it'll probably work. On the other hand, materials like paper, plastic, wood, they don't generally really store electrical charge, so they won't likely to work.

The technology used in touch lamps is very similar to that that's used in touch screens. The sensors do vary a little bit in sensitivity zone though. So, in some cases, you'll need to hold the bar of soap or piece of veg directly with your hand for it to work. But in other cases, just its presence near the sensor will be enough. Why not have an experiment to see what else you can get to work? I found it really funny that I could scroll through my emails on my phone, using a tangerine from the fruit basket Hannah - So, it turns out that anything that can conduct electricity like the inside of a tangerine and also block electricity like the tangerine skin can act as a capacitor and store charge. Simply putting this capacitor near a touch sensitive gadget like Martin's lamp is enough to switch it on or off.

Comments

Add a comment