Can Science Mavericks Save the World?

This week, we're exploring the end. From robotic AI takeovers to global floods, when it comes to the extinction of our species, is science really set up to predict or prevent such events? Plus, how gutbugs might be key to keeping healthy for longer, a holodeck for flies and why Pythagoras was beaten to his own theorem.

In this episode

00:49 - Can gut bugs keep you healthy for longer?

Can gut bugs keep you healthy for longer?

with Daniel Kalman, Emory University

Over the next 40 years, scientists are predicting that the elderly populations in developed countries will increase by 350 fold, placing a significant burden on healthcare. But can we help people to age better and remain more independent into older age? According to Emory University’s Daniel Kalman, the answer is “yes,” with the help of the right bacteria in the intestine. He was studying a type of worm called C. elegans, which either did, or did not have gut bacteria producing a class of chemicals called indoles. Worms with the indoles didn’t live any longer but they did age better, as did flies, and even mice, as Chris Smith found…

Daniel - There’s been a long tradition in the worm community for studying aging. These animals live 21 or so days so they can be studied pretty easily, more easily than humans. We can feed them bacteria or bacteria mutants, that is bacteria that lacks certain genes, and then we can understand what their responses are relatively quickly. To measure healthspan we look at how well the worms move; as they get older they move less well. We began to work with bacteria that produced particular molecules and asked, “could these bacteria cause older worms to move better?”.

Chris - So your rationale is, if we knockout a certain factor from the bacteria and the worms show accelerated ageing, or their more frail, that molecule coming from those bugs might be involved in the ageing process?

Daniel - Correct. Though the molecules that we’ve identified don’t actually affect the lifespan of the animals. They do affect the health span: that is their capacity to move, their capacity to reproduce, the capacity to a lot of the things that we associate with health versus ageing.

Chris - What are those molecules?

Daniel - The molecules are in a class of indoles, they’re made by plants that govern root growth in plants. They’re made by lots of different kinds of bacteria, including probiotic bacteria, and affect the way bacteria talk to one another, and they also affect us.

Chris - So if there are bugs that make these indoles in the intestines, the animals are healthier. If the bugs don’t make these indoles, the animals don’t live any longer but the are less healthy, they don’t age as well. Do you know what the indoles are doing to the worm’s bodies to exact that change?

Daniel - We do. We were able to identify particular receptors for these indoles and we think this molecule, which we call AHR, recognises indoles and regulates gene expression to produce this healthy ageing effect.

Chris - That’s worms. What about more complicated creatures like mice or like humans - do you think the same effect is going to happen there?

Daniel - We tested that in the paper and we did look at these effects in higher organisms, that is flies and mice, and we saw the same kinds of things. With indoles, worms moved better, flies climb higher, and mice are better able to tolerate stressors. And geriatric mice, these are mice that are very, very old also move better.

Chris - Did you try the experiment where rather than get bacteria to give these animals, these indole molecules, you just gave them the indole molecules without any bacteria which would prove that the indoles do appear to be doing this - it’s not some other factor coming out of the bacteria that’s doing it?

Daniel - Yes. We also can provide the molecules specifically to animals, which we did, in worms, flies, and mice. And these molecules, by themselves are sufficient to produce these protective or healthspan increasing effects.

05:21 - Virtual Reality for flies and fish!

Virtual Reality for flies and fish!

with Andrew Straw, Frieburg University

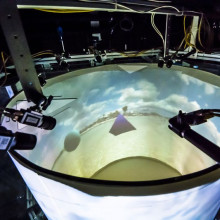

Do you remember the Holodeck that appeared in some episodes of Star Trek - the virtual reality room which could turn into anything you could think of? Well this week, scientists have made one - but before you get too excited to try it- it’s not for humans - but for animals! This new virtual reality room can help scientists run experiments they couldn’t before, testing how animals understand their environments - something that’s been tricky for free moving animals before, because you can’t exactly get a fly to wear a VR headset. Georgia Mills caught up with Andrew Straw, professor of biology at Frieburg University, to find out how they did it…

Andrew - I’ve always been inspired by the holodeck of Star Trek. Basically what we did is we made an arena where animals can go in and we track them as they move around in 3D, and we track them almost instantaneously. Then based on the position of where it’s eye is, then we draw with computer projectors on the walls of the arena everything we need to draw, so that from the position of that animal it sees whatever kind of virtual world that we want because, basically, the animal’s in a computer game.

Then the real trick is, not that we just do that once but we then update the position of the animal and we update the world, so it’s a completely dynamic process. When the animal moves around what it sees is completely consistent with it being really inside this computer game. So, to the animal, it’s immersed in this 3D world that it can see.

Georgia - I see. So it’s a holodeck for animals. So the animals in this environment, can you make it as if there are obstacles in the way, it’s not just running around in a space looking at the walls, it does look as if to it there’s stuff in the middle?

Andrew - Yeah, exactly. Some of the first experiments that we did were kind of validation experiments where we could test how an animal would behave and we did this with flies and fish. We put in just a simple vertical post, a cylinder, in the middle of the arena - a real one - and we quantified animal behaviour with the same tracking system. We measured how they swam or flew around this post and then we could do that same thing, not with a real post, but with a virtual post, and then we could calculate the same statistics again. We could see that the animals behave indistinguishably to the virtual post as they do to real world post and so I think it’s fair to call this a virtual reality for the animals.

Georgia - What animals are can go in it because animals have very, very different visual systems from each other, so does it work on all of them?

Andrew - You’re completely right that animals have very different visual systems. For example, our displays are tuned for humans so in terms of the spectrum, the colours, we use the RGB for humans and that works pretty well for the kind of experiments that we’ve been doing and that we’re interested in. But a lot of animals are sensitive to ultraviolet for example and we’d have to think about some kind of modifications or different display technology if we wanted to really give a good virtual reality in the ultraviolet spectrum.

But in terms of what kind of animals? We’re really interested in model species that are used commonly in neuroscience. Those are rodents, fish like zebrafish, and drosophila (fruit flies). Those are the ones that we spend the most effort testing.

Georgia - What kind of research do you foresee people doing with this?

Andrew - This technique really allows people who are interested in studying interactions between an animal and its environment, or an animal and other animals to do the kind of experiments that they weren’t able to do before. There’s the area where I come from which is the interaction of visual processing with higher level processing like spatial cognition. So, how does an animal know where it is and what it’s seeing. You can imagine that by being able to play games with an animal, like have it teleport from A to B, we can ask, for example, is it building a map of it’s environment or not? People have historically been doing experiments on freely moving animals and there, of course, it’s very difficult to control the visual world. Or they’ve been doing these restrained animal virtual realities and there the feedback that the animal gets is not natural. And so, the innovation here is doing free moving virtual reality.

10:59 - Down to Earth: Spotting Skin Cancer

Down to Earth: Spotting Skin Cancer

with Stuart Higgins, UCL

Down To Earth takes a look at tech intended for space which has since found a new home down here on Earth... This week - how searching for stars could help detect a deadly illness…

Stuart - What happens when the science and technology of space comes Down to Earth.

This is Down to Earth from the Naked Scientists where we look at how the technology and science developed for space is used back on planet Earth.

I’m Dr Stuart Higgins, and today we’re talking about how the mathematics used to spot X rays coming from exploding stars can also be used to catch the early signs of skin cancer.

On June the 1st 1990, the German Aerospace Centre, with the UK and US, launched ROSAT the ROentgen satellite. It was designed to allow researchers to search for X rays coming from across the universe. However, scientists needed a way to identify the faint traces of X rays from the data the satellite was producing, a bit like trying to hear a voice under a background of static. They needed to separate the signals from the noise.

This led researchers at the Max Planck Institute for Extraterrestrial Physics in Germany to develop the scaling index method. It’s an analysis technique which identifies structures from within a dataset.

Imagine a digital photograph of the seaside; a beautiful golden beach, the sea lapping at the shore. The scaling index method would take each pixel in that image, draw a circle around it, and check to see whether it’s neighbouring pixels look similar. The algorithm then uses that information to work out whether the pixel belongs to - say - a straight line like the horizon, a flat plane like the sky, or a point object like a seagull up above. Now imagine the same scene on a greying cloudy day; it’s tricky to see that seagull. The algorithms power is that it can still pick out the seagull from the noise of the grey clouds.

But this algorithm could be applied to more than just astrophysics or, indeed, seagull spotting. The same researchers in Germany teamed up with medical doctors to invent a system for spotting the early signs of skin cancer. Skin cancer can look like a darkening in the pigmentation of the skin and can be challenging to properly identify. In their system, doctors can take magnified digital images of a patient’s skin and apply the scaling index method to identify subtle changes in the colour and variation. The algorithm is able to score how likely the variation is due to melanoma, a type of skin cancer. The big advantage is that the doctor doesn’t need to be an expert in melanoma in order to use the system. The algorithm does the work helping the doctor make an accurate diagnosis.

So that’s how the mathematics of spotting X rays from exploding stars is being used to help identify skin cancers back on Earth.

14:02 - Indiana Jones and the Mystery of the Maths Tablet

Indiana Jones and the Mystery of the Maths Tablet

with Daniel Mansfield, University of New South Wales

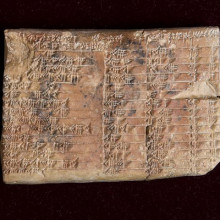

Da da DA DAAA, da da daaa! Indiana Jones may be everyone's favourite fictional archeologist, but he might have been based on a real person. An ancient mathematical mystery has been solved this week by two Australian researchers who have been studying a 3,700 year-old clay tablet that was made originally by the Babylonians. It was first uncovered about a Century ago in what is now southern Iraq and then it was ultimately left, by the owner, to Columbia University in America. Historians knew that the writings on the tablet were numerical, but they didn’t realise their significance. Now mathematicians Norman Wildberger and Daniel Mansfield, from the University of New South Wales in Sydney, have deciphered what they think is the true meaning of the tablet. It describes, they reckon, a previously unknown form of trigonometry for finding the sizes of right-angle triangles, and, get this, it pre-dates Pythagoras by 1000 years… Izzie Clarke caught up with Daniel Mansfield to hear what the tablet looks like, and what it shows…

Daniel - It’s rectangular, and it’s surprising heavy, and it’s written in a very neat hand. Someone went to a great deal of trouble to rule this thing out neatly and carefully write down a whole sequence of numbers. The tablet was obtained by George Arthur Plimpton who was an American publisher, and it was sold to him by a fellow called Edgar Banks who’s the real person upon which the say Indiana Jones was based. He was an archeologist, an academic, an adventurer, and an obtainer of rare antiquities. He’s even got the same hat. He sold it to Plimpton who was a publisher and collector of ancient mathematical texts, but, of course, Plimpton just knew it had maths on it. No-one really understood exactly what this was about. But it really wasn’t known that it was anything special at all until 1945 when Neugebauer and Sachs discovered that it actually contained pythagorean triples.

Izzie - And what exactly are they?

Daniel - They’re three numbers which describe a right triangle. Now, numbers which describe a right triangle are very special because they obey what we call Pythagoras's theorem. So, if you have a triangle with sides A, B, and C where C is the hypotenuse, then the numbers are related - A2, plus B2 is equal to C2. And what Neugebauer and Sachs showed was that Plimpton 322 actually contained pythagorean triples, which is amazing because it shows that the Babylonians knew about Pythagoras's theorem a thousand years before Pythagoras was even born.

Izzie - That is incredible. What do those numbers mean?

Daniel - Well, that what people have been really scratching their heads about. For 70 years we’ve known that this is a very special, highly sophisticated tablet, but why does it have pythagorean triples on it? What is the meaning of it? It’s not just a random sequence, it’s an ordered series of pythagorean triples, and the ordering is very special. It starts with something that’s almost a right isosceles triangle, or almost half square, and then it goes down row by row to flatter kind of triangles. I don’t want to say words like inclinational angle because they don’t exist at this time. The triangles get flatter, if you like.

Izzie - Now Pythagoras’s theorem, which we all learn throughout high school, uses angles. If they weren't using angles, how did Babylonians use trigonometry?

Daniel - They used ratios. This is a thousand years before angles were even thought of but you don’t need an ocean of angle to study trigonometry, you can use ratios alone. In fact, that’s what we propose Plimpton 322 was all about, just a table of ratios of the sides of a right triangle, they used ratios to describe steepness. So you see things like descriptions of a canal or they talk about how much width per unit depth the triangle requires. A long ramp might require a lot of width per unit depth, a really steep one - we call it steep, the Babylonians would say that it’s a very short amount per unit depth.

Izzie - How did these Babylonians use trigonometry?

Daniel - It’s very difficult to say what they used it for; we really don’t know. All we have to explore this world is this tiny window for which we have evidence. Now we have something which appears to be a trigonometric table, but how they used it is really open to speculation. I’m happy to speculate on that. Personally, I think it was used for surveying but, I have to say, all that we have is what’s written on the tablet.

Izzie - This is all mathematics from literally over 3,000 years ago. Can we use this new - well not new, ancient - Babylonian approach for our use?

Daniel - Well I’d love to see this find a way to use this trigonometry. It’s really out there for people to start using it again now that we know what it looks like. I personally think that we should use this to teach trigonometry in school. This is a great way for people to understand triangles and it doesn’t require them to understand what the square root of two is, or trigonometric functions like sin cos and tan, or an angle. You can study triangles without any of these things. It’s very early days; we’ve only just rediscovered this. Certainly, I think there’s a place for it in the world.

19:44 - AI researchers sound alarm against killer robots

AI researchers sound alarm against killer robots

with Peter Clarke, Resurgo Genetics

War has always happened throughout human history and, chances are, it will continue to do so in the future. With this in mind, it’s important to ensure that if it does occur it’s carried out as humanely as possible, which is why treaties such as the Geneva Convention exists. Violating certain parts of this treaty, such as the use of chemical and biological weapons, for example, constitutes a war crime. With recent developments in artificial intelligence, a new version of the convention may be required. There have been two major revolutions in warfare so far: gunpowder and nuclear weapons, and the use of artificial intelligence is seen by many as the third such revolution. In an open letter to the United Nations, more than 100 leading robotics experts, including Elon Musk, Stephen Hawking, and the founder of Google’s Deepmind have called for a ban on the use of AI in managing weapons systems. Tom Crawford spoke to Peter Clark, founder of the Resurgo Genetics is an expert in machine learning…

Peter - The aim of this article was to try and head off the possibility of an AI arms race. So where you start to develop these technologies, and as most of the people developing these AI and robotic technologies they have no wish to create killer robots. But once this technology is in place, and becomes a massively powerful tool in the suite of armaments that a nation state or other factors have to fight warfare these will, inevitably, get used. So what it’s trying to do is it’s trying to trigger a debate about having international legislation much in the same ways that you have nuclear weapons or chemical weapons. We need to start thinking about a similar type of international level legislation for these autonomous weapons systems because they are going to transform the nature of warfare, and we need to prepare for that. T

om - We currently have not quite fully autonomous killing machines, but I feel like we’re very close in some of the technology that currently exists. For example, drones which are flown by remote control from a pilot in one country and can drop bombs which, of course, will kill people. So how are these autonomous systems different to something like that which still has the human element?

Peter - Withe the human element you’re always subject to your own personal morality and ethics that, even if you happen to be separated by many thousands of miles from these events, you still realise and have that capacity to understand that you’re destroying people’s lives. With an autonomous system, programmed with a particular set of objectives, you’re removing that human moral and ethical constraint on the behaviour of those systems and, as soon as that’s lost, we enter a very, very different world.

AI - Intruder, intruder’s must be destroyed - pow, pow, pow, pow, pow

Tom - Is this a case of a robot with a gun just walking around and shooting people that it thinks are a threat or that are enemies?

Peter - This is the interesting thing, and I think that we all have these images from terminator and other films which is the sort of lumbering great robots who are hunting people down but, actually, the reality can be very different. What you may have is something along the lines of swarms of mini, little autonomous drones carrying small packets of explosives that could target individuals in a population, so you could have swarms of millions of these things sweeping over cities. So many very different realisations of the applications of this type of technology, but we know that in whatever form they will transform warfare.

Tom - How would such an autonomous system even go about knowing specifically who to target?

Peter - I think much in the same way for that, for example, credit reference decisions are made nowadays where you’re aggregating information on people’s previous payment histories. Maybe now, as marketing gets more and more targeted where you can build up very, very precise profiles of who people are in an autonomous way and target them autonomously for advertising. These same types of techniques can be used to profile people in all sorts of other ways. You could imagine a situation in the future whereby an authoritarian regime could use this same type of targeting and profiling of the population to target individuals in that population that were a threat to those power structures, and have the entire chain not be subject to human decision. So it’s really taking all of the technologies that exist now in terms of your robotic weapons systems, tying those into these tools that allow very precise profiling of a population, and tying these things together. This is not talking about technologies that are far off, sci-fi, future fantasies, these are talking about technologies that are available now, and could be put together now into a system which could be catastrophic for the globe.

26:21 - Existential risk and maverick science

Existential risk and maverick science

with Seán Ó hÉigeartaigh, Huw Price, Hugh Hunt, Adrian Currie, Cailin O'Connor, Heather Douglas.

When the end is nigh, will scientists be able to save the day? Connie Orbach explores whether science can predict and prevent the end of the Earth, starting with a rather scary image of the future...

Connie - New York is underwater. AI robots have enslaved humanity and genetically engineered mosquitoes have led to an airborne super-malaria. No… this isn’t the start of Hollywood’s next apocalyptic blockbuster, but a just-about-possible vision of the future. And in this part of the show we’re going to be delving into the world of extraordinary catastrophic risks asking, if the worst happens, does science have our back? I’m Connie Orbach, and this is the Naked Scientists…

To start us off on our journey let’s hear from the experts…

Sean - My name is Seán Ó hÉigeartaigh. I’m the Executive Director of the Centre for the Study of Existential Risk in Cambridge.

Connie - Yeah that’s right. Because these are all examples of existential risk. The type of thing that if it did happen, might knock humanity out completely.

Sean - Risks that might threaten human extinction or the collapse of our global civilisation.

Connie - Sounds pretty scary, right - the threatening of our human extinction? But don’t worry…

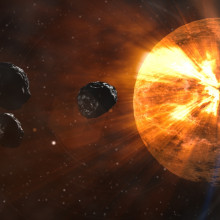

Sean - Most of the risks we look at are high impact , but quite low probability. Now, they aren't all, and climate change is an example of one that is quite high probability I think. It’s likely that we will see more global pandemic outbreaks that will cause deaths of millions of people, like the Spanish flu did last century. Then there are things like, for example, it is entirely scientifically plausible that we would be hit by a meteor the likes of which wiped out the dinosaurs. But, the last one of those that hit us was 66 million years ago which, if you think about it, is 660 thousand centuries so the likelihood that it’s going to happen in the next century is vanishingly unlikely.

So we tend to think of ourselves as, in some ways, an insurance policy. When the stakes are so high, when the consequences are so big, we think that some people should be working on these things. It’s not necessarily what everybody in the public should be worried about. In the same way that it would make sense for you to take out insurance in case your house burns down. We shouldn't be worrying about your house burning down all the time, you should just be taking the precautions of not leaving the oven on.

Connie - Phew! So it’s just an insurance policy. And luckily, we have clever insurers like those at the Centre for Existential Risk, otherwise known as CSER, and they’re willing to take out cover. Then, they and others like them can feed back to science and policy and, soon enough, we’ll all be on a fully comprehensive plan - i’s dotted and t’s crossed. Goodbye existential risk…

Well, of course, it’s not that easy. Not least because that would be the end of the programme. But also, science, as it stands, just isn’t that set up to deal with or predict all of these sorts of catastrophes and really, CSER is just a small outpost fighting against a sea of traditional scientific practice. Sean’s colleague, Adrian Currie, laid out the issues for me…

Adrian - The worry with existential risk is that thinking about this low probability/high impact events have involved a bunch of features which makes science, as it’s currently set up, really ill equipped to deal with. I’ll really quickly list those for you: first you’re dealing with unprecedented events. You don’t have any evidence in a sense, you don’t have any analogies. With big rocks hitting the Earth, we know that big rocks hit the Earth in the past, we can go and look.

With robots becoming sentient and chasing us around is something that we don’t really have analogies for that, so it’s very hard to have much evidence. This means that your science is going to have to be speculative; what you say is almost always going to be wrong; what you say is almost always not going to have the kind of evidential support we expect scientific publications to have. So there’s a way in which in order to do this scientifically, to get the ball rolling, is to start figuring out what the landscape is like. We need to pull back some of those standards about what good science looks like and perhaps have different standards.

So instead of saying something like this deserves to be published because it’s got a positive result which has hit my criteria for statistical significance, we might say something like this deserves to be published because it opens up an area that we haven’t thought about very hard yet. Or get something wrong but in a really importantly interesting way, or it has a negative result in it or a set of negative results. These are different criterias for success and I think if we’re going to start thinking systematically about these kinds of events that requires the sort of speculative thinking.

Connie - So you see the problem. We have these big existential risks and, if they did happen, they would be really, truly catastrophic. But they're also really unlikely and unpredictable and science, which rewards getting things right, pushes people to do things which are likely and predictable and because of this no-one really wants to look into existential risk. In fact, it’s a bit of career downer. Here’s Sean again…

Sean - I guess you can divide the people who are thinking about this into two communities. One is the community, like our centre and other centres like them, who have a specific remit to sort of think the unthinkable, if you will, and we’re allowed to be a little bit exploratory and a little bit weird, and that’s fine. But then there’s a whole other community which is people who have skin in the game, who are really involved in these emerging sciences. Who will sometimes feel like they need to either raise concerns about a particular consequence from a scientific trajectory or who will have an idea that might provide a global solution, but that might overturn an existing set of theories.

I think it’s particularly challenging for those people. One example might be in virology research in the last decade or so. There’s been a lot of debate about the importance, but also the potential risks of doing research to modify the influenza virus. For a number of years, several individuals were raising concerns about both the accidental release from laboratories of modified pathogens, and also the potential risks of publishing this research because it might give bad people bad ideas.

I think that’s a very challenging thing to do because if your whole scientific community is doing this because advancing our scientific understanding is a good thing but, secondly, this helps us to provide tools against natural pandemics. It’s a very hard thing to stand up and say to your colleagues what you’re doing might actually pose as big a risk as the solution it poses. It puts those people in a very uncomfortable position where they may upset their peers; they may alienate the funders who might support their own work; they may even bring their own field into disrepute by causing a public panic about certain types of research.

So I think that makes the role of these people who either have a new idea that overturns existing theories, or have a new concern that pushes against the prevailing ideas in the community that they’re around. I think that’s a very challenging, but a very important position to be in.

Connie - And when people do, they often get labeled as cranks. There’s the 1% of scientists who think climate change is a myth or the proponents of traditional Chinese medicine. In fiction, there’s Back to the Future’s Doc Brown and from history, there’s the many scientists whose names have been lost to the passage of time.

But also, those who were rejected initially were later proven to be right. Galileo and his predecessors who suggested that the Earth orbited the Sun, or Darwin and his theory of evolution. These figures populate our myths and media - sometimes they’re wrong, sometimes they’re right. But, either way, they’re nearly always dismissed.

So there’s two problems here: 1) How do we make science more open to these sorts of people? Let’s call them mavericks so they can think outside of the realms of normal science and help us spot and mitigate existential risk, and 2) how do we tell who’s crazy and who’s correct? Well first of all, we don’t call them crazy…

What we’re trying to deal with is the fact that some of the are people we need to listen to, so using terminology like “crazy,” which is the terminology we use to push them aside, is what we need to try to avoid doing.

Connie - Oops! Sorry Huw - good point. That’s Huw Price, Academic Director Of Cambridge’s Centre for Existential Risk; he’s been pondering a solution - working title “The Maverick Room.”

Huw - Well, what we need are scientific and technological whistleblowers. The kind of people who are thinking in a slightly abnormal way; they see something that other people don’t see. We need to make it possible for them to put up their hand and get listened to in those sorts of circumstances.

What tends to happen is, as we know with whistleblowers in other fields, is that they attacked by their peers; they get marginalised and ostracised. The idea of what we call a maverick room is we’re interested in the question as to whether it would be possible to create a kind of safe space where, instead of the norms and sociology of science pushing these people out, it created a space in which they could be listened to.

Connie - Talk me through this. How would this maverick room, this safe space look - is it a physical building, is it an internet chat room? What are the kind of practicalities around this, what can I imagine for this space for mavericks?

Huw - Okay. There’s no reason for it to be a physical space. What needs to happen is that to get into it the mavericks need to get a certain kind of recognition, so they need to pass through some sort of competitive process. Suppose that there’s ten British mavericks every year and so they get this little prize as one of the ten mavericks of 2017, but that prize comes with status. Hopefully, there'd be some heavyweight scientific institutions backing this. They would then have the backing of those institutions. Somebody who wants to criticise them will be criticising the institutions.

Of course, people may want to do that - you can imagine the Daily Mail having a field day. But there’s something to push back; there’s the reputations of the institutions that are standing behind it. With that kind of protection, those people who’ve been through that selection process will not be able to be dismissed by their regular peers elsewhere in science.

Connie - Because that’s the problem here is this kind of reputation idea?

Huw - Yeah, that’s right. It’s all about reputation. What tends to happen, and this tends to happen more when there’s a group of people who are following some piece of science which is dismissed by the mainstream, by following that they damage their reputation and you get what I call a reputation trap. So anybody else who even takes them seriously in the sense of just going over there to look at what they’re doing risks falling into the trap themselves and being dismissed by the mainstream, so part of what we’re doing here is sort of deconstructing the reputation trap.

Connie - For Huw it’s all about reputation and giving people a safe, respected place where they can try things out protected from ridicule and risk. But this doesn’t deal with my second problem: we still have to decide who's in and who’s out of this safe space. How do we do that when everyone who goes against normal science has the potential to be a genius?

Huw - Exactly, exactly. If you just set up a committee and, in effect, some funding programmes are doing this because some funding programmes are recognising the value of encouraging innovation in science. So they say we want blue sky out of the box, unconventional thinking. But then they appoint committees who, of course, think in the conventional ways and so it’s very hard for those committees to actually pick the unconventional thinkers or, at least, to pick them in a way in such that they have a reasonable probability of picking winners. Somehow we have to find an incentive structure for the committees so they get rewarded when they pick somebody that an ordinary committee wouldn’t have picked, but it turns out to have something important to say.

Connie - Still a few wrinkles to iron out there then but, Huw’s on the case.

This week we’re asking if science is set up to deal with some of humanity's bigger risks. Huw Price’s idea of a maverick room seems like a nice, fairly simple way to encourage creative thinking in science. But who are these mavericks we’re trying to protect? Well, Hugh Hunt, and that’s a different Hugh - keep track - is an engineer at the University of Cambridge, and while he may not consider himself a maverick, his field is definitely a little controversial among scientists and nonscientists alike.

Hugh - We’re in a position with climate change that it looks like we’re not going to meet our CO2 emissions targets. We’re going to have to figure out a way of cooling the planet, particularly a way of re-freezing the Arctic because it’s melting much faster than we’d like to think, and geoengineering is about man made interventions in the climate.

Connie - Can you give me an example of the sort of man made interventions we could be thinking of?

Hugh - Geoengineering is divided up into two broad categories: SRM which means solar radiation management, and CDR which stands for carbon dioxide removal.

SRM is about reflecting the Sun’s rays back out into space. We can make clouds whiter so that they can reflect sunlight out to space, or we can put stuff into the atmosphere. It’s been proposed that we emulate the effect of a volcanic eruption by putting sulphur dioxide up into the stratosphere, which volcanos do, and that causes global cooling. We could do things like putting mirrors into space - a bit dramatic. These are all SRM, solar radiation management techniques.

Carbon dioxide removal is about perhaps we can get the oceans to absorb more carbon dioxide by making algae, and so on, grow rapidly so you could seed the oceans with oceans with iron filings or something. Or, perhaps, we could capture carbon dioxide from the atmosphere and pump it deep underground - carbon sequestration - carbon capture and storage.

But all of these geoengineering techniques are big exercises. And they're quite scary because we’d be manipulating the climate.

Connie - What sort of reception do you find you get within this field for doing these sorts of things?

Hugh - Geoengineering does seem to be a bit of a sort of Frankenstein science, and we tend to be categorised as being in someway evil. Why would anyone want to mess up our climate? But we’re currently pumping about 35 billion tons of carbon dioxide into the atmosphere every year; that’s about 5 tons per person on this planet every year - that’s enormous. So the idea that we shouldn’t consider doing something to clean up the mess that we’ve made, to me that’s what would be irresponsible. I think we ought to be looking at geoengineering as a responsible means of dealing with the mess we’ve made.

Connie - What is the situation with geoengineering? People have kind of negative idea around it but is it still happening, is there still research happening; what’s the reality of the situation?

Hugh - Geoengineering is, I think, inevitable. But, unfortunately, research in geoengineering is going very, very slowly. It’s considered to be unwise to research technologies to fix the climate because maybe that means we’re going to take our foot off the pedal in terms of trying to reduce our emissions. That’s a reasonable concern, but the problem with that is that we will probably want geoengineering in ten years time and we won't be ready to do it.

Connie - So what would you change so that you could be working on this?

Hugh - I think it ought to be easier to do outdoor experiments, on a small scale, on geoengineering technologies. At the moment, it’s almost impossible to do outdoor experiments without getting people concerned about the slippery slope that if you start with a small experiment in geoengineering, then you’ll end up doing geoengineering full scale. Well, I think we just have to live with that as an issue because we’ve got to start these experiments to develop our insurance policy against us not achieving our targets for carbon dioxide emissions.

What would I change? I think I would allow small scale experiments at a scale of say one millionth full scale. That would really help us move the science of geoengineering further forward.

Connie - As I said, pretty controversial. And the pros and cons of geoengineering are something that could do with a whole hour to themselves. But, in terms of how science treats it’s mavericks, Hugh finds an interesting sample of one.

Especially as, on the scale of existential risks, extreme climate change is fairly high up there as a likely possibility.

So, if for the purpose of this argument, we decide that considering the possible impacts of things like extreme climate change, giant asteroids, and unregulated artificial intelligence use, some fringe science is worth exploring. And there should be an outlet for people willing to battle against mainstream thinking, is a maverick room really the right way to go about this.

I mean, firstly, is putting the responsibility for our salvation on only a handful of shoulders. Huw Price suggested ten British mavericks a year. It seems a bit like saving a sinking ship by bailing out water with a teacup. And even if our ship isn’t actually sinking, these sorts of risks are broad. Can a handful of scientists really be expected to spot every iceberg in the ocean?

And finally, who are these mavericks? The examples I’ve given and people I’ve interviewed so far are all white men. Does that in itself limit what we can do?

Cailin - Diversity matters for science, especially diverse backgrounds. Because people who have different life experiences notice different things in the world and they make different assumptions about it.

Connie - That’s the University of California Irvine's Cailin O’Connor.

Cailin - I work on things at the intersection of philosophy, and biology, and economics. The first primatologist studied male primates because they were men. And then, when women joined the field they studied the female primates and learned all these other things about it. So they made these special discoveries motivated by their personal identities.

In the cast of existential risks, there can be cases where particular populations are at special risk. So if we think about global warming, for example, people who are on low lying islands are at special risk, existential risk that is not necessarily threatening people in different countries. If those people on those low lying islands don’t have access to science, if they don’t have social security or job security, if they’re not able to join scientific communities, that might really affect the way we think about these kinds of risks. They might affect the sort of science we do about it.

Connie - What can we do to change that and to make it more open so that we can have more of these conversations?

Cailin - One thing that some people have pointed out as a good way to solve these kinds of problems are funding early career researchers, because young scientists tend to be more diverse than old scientists. Just as a historical path dependency, there used to be more white people and men in science, now there are more people of colour and more women. So, if you fund young researchers they’re going to be a more diverse bunch in general.

A lot of people have pointed out that when you have the opportunity to set up some kind of discussion, if you set up a maverick room say, you have a lot of control over who you invite to the table. So, in cases like that you can just say let’s get different voices in the room, hear what different people have to say. Maybe that will tell us about perspectives that aren’t ones that would be obvious to us.

Connie - But also, if you have a maverick room, you’re dependent on knowing who those people are already and there’s an issue there, no?

Cailin - Yeah, sure there is. Science and academia is based on networks of people knowing each other and who get invited to conferences, and groups like that depends a lot on just who you know. That’s a challenge that’s maybe hard to solve, but maybe it’s a responsibility of people to try to at least look for other voices if they can to bring to their discussions.

Connie - Yes. Our maverick room as a safe space would provide the protection needed for more diverse thinkers to speak out. But, with science the way it is, finding those people in the first place might provide it’s own challenge. Is there a way to encourage creative thinking at a wider level? Remember Adrian Currie from earlier… He thinks there might be.

Adrian - I think one of doing it is really changing the incentive structures that tend to create the conservative science. One of my colleagues, Shahar Avin, has really interesting ideas about funding science through lotteries instead of through peer review. Peer review is a process where, in effect, what you do is you write your paper, or you write your funding application and your peers, that is to say other people, other scientists who work in the same discipline as you who are experts in the same thing that you’re an expert in, look at you work and basically say whether it’s good or not, whether it ought to be published, whether it ought to be funded.

One thing that seems great about this is it encourages this kind of inter-subjectivity - the fancy word that we would use for people agreeing on stuff. That seems important, it build consensus, but the problem is it also serves as a kind of gatekeeping function. Often if you’re a reviewer and you get a piece of work and you’re like, this does not fit the usual ways that we do this, you’re going to reject it, so Shahar Avin’s thought is if you remove that aspect and in fact you have lotteries determining funding. That means first you don’t have to spend so much time writing these annoying grants, but second it’s going to remove that incentive to be particularly pleasing to your reviewers. That’s an example of how you might encourage this kind of maverick thinking without having people to have to be anti-establishment, or rebels, or antisocial in some respects.

Connie - That all sounds very nice and everyone likes a lottery, but that also sounds like you could end up with a lot of stuff being done because there’s a reason for peer review, right? It’s a quality control and if you’ve got a random lottery of science that’s surely going cause you some issues with what science is actually being done? Also for diversification of the type of science you could end up with. You roll a dice six times and everytime you get a one, you could end up with everything clustered in one part.

Adrian - You’re absolutely right that a pure lottery doesn’t look very nice. What you want is the ideal combination of these things. So again, I’m just drawing on Shahah’s work here; one way you could do it would be to have a kind of lower bar so you do have a review process, and the reviewers basically throw out a bunch of them. Then there are the ones that meet some agreed standard, relatively low bar, and there’s maybe some that everyone definitely says yes to. So you can imagine there’s the middle ones, the ones that aren't definitely no, the ones that aren’t definitely yes, do a lottery for those ones in the middle.

Connie - Enabling mavericks more widely seems to deal with some of the problems of the maverick room but, practically, it sounds much harder to put into action. Either way, encouraging scientists to think more creatively must be a positive move in a world that feels ever more unpredictable. However, as a way of combatting existential risk it does seem to be, well, a little belated. It appears to me that the existential risks we’re talking about are risks related to new technologies: genetic engineering, artificial intelligence, and, well, the car. Should science be taking more responsibility for the work it puts out there in the first place. Heather Douglas from the University of Waterloo seems to think so…

Heather - It’s because scientists, so far, are also human beings, also have responsibilities to think about the ways in which their work might be used to exacerbate existential risk even if that’s not what they intend it to mean.

Connie - Well, I think we can agree that all scientists are human beings, but we can also ask how do they practically go about taking responsibility because, let’s face it, lots of science carries potential risk but it also carries great potential benefit. We don’t want this sort of work to stop now do we?

Heather - Certainly scientists are also very much involved in efforts to stem the tide of nuclear proliferation, the risk nuclear war at various sorts of national levels. Some scientist groups have science and diplomacy efforts that are centred around some of these things - science and human rights efforts. I think they’re already having conversations about what our responsibilities for scientists [are]. That the responsibilities of scientists are not just to produce new stuff, and make new discoveries, and offload it off onto an utterly unprepared public. That’s actually not such a great plan and even if taking more time and being more reflective about the implications of one’s work, and having more conversations about the shaping of it, even with non-scientists, or scientists from other disciplines and expertise slows science down; that is probably, at this point in time, a valuable trade off that it’s better to be a bit more reflective and a bit more careful about the directions we take on scientific research even if it means things don’t move quite as quickly in most areas.

A lot of scientists who work at the science policy interface have noticed that governance is lagging horribly behind our technological innovation. I don’t expect governance to ever catch up. In fact, being on the edge of the new means, in some ways, that there’s always going to be something ungovernable about it, which is why I think responsibility is always going to have to be a big part of it. That you can’t just depend upon external regulatory agencies to make it work. But if everything feels like it’s accelerating and getting away from us, slowing down is probably an okay thing.

Connie - Slowing down science sounds like 1) very hard to do, it kind of feels like a runaway train at times and 2) like something that people aren’t going to want to do. Is there really an appetite for this and a response within the community?

Heather - I think one of the problems with slowing down science is all the incentive within scientific practice and scientific research we’re currently stacked towards publish faster, get your paper out the door, that’s how you get credit, that’s how you get priority. You have to make a discovery first, you have to get the paper in Nature and in Science, so there are all these pressures to do it more quickly. But I think over the past ten years, we’ve seen a lot of the cost of that. A lot of the concerns over scientific replication are driven by the desire for speed. A lot of concerns over scientific fraud are driven by the desire for speed.

So I think the scientific community, even internally, is already seeing the problems with this continual pressure to publish more, and more, and more and wondering about how do we take the pressure off that. But I think the same can be said for the broader societal context that if scientists want to continue to experience the support of the public then they have to not just produce results that are reliable and not fraudulent, which is of course true, but they also have to think about their relationship between science and society, and the implications of their work for the broader society.

Connie - Practically, are you hopeful that this can happen?

Heather - Yes, but I’m a terrible optimist. It’s a blind spot of mine. It probably makes it easier to do my work, but I can’t tell you that I think that it’s grounded in a robust data set. But, in my conversations with scientists over the past 15 years, it seems to me that scientists are increasingly aware of the complexities of interfacing with society and are willing, with assistance, to begin to take these things on.

Connie - That was University of Waterloo’s Heather Douglas with a plea for slow science. An idea that does seem to be at odds with Hugh Hunt’s battle against climate change. So how do we balance the books? Maybe it’s a matter of a more nuanced approach to scientific regulation, in general, taking into account how far down the road we are for each risk and acting accordingly.

Whatever we do about existential risk, I can’t emphasise enough how unlikely most of these scenarios and mass panic over minute possibilities does not seem helpful. In the words of Seán Ó hÉigeartaigh “if we’re concerned about our house burning down, the least we can do is not leave the oven on.”

Comments

Add a comment