Senses Month: Can you Hear Me?

Are we heading for a hearing-loss epidemic, and can science step in when the world starts to go quiet? This week, The Naked Scientists go on an odyssey into the science of hearing, listen in to find out the strange ways our ears decode sounds, get baffled by some auditory illusions and meet someone who can see with their ears.

In this episode

00:49 - A tour of the ear

A tour of the ear

with Professor Brian Moore, University of Cambridge

How does our ear decode the sounds around us? Professor Brian Moore from Cambridge University took Georgia Mills on a guided tour of the ear...

Brian - Sounds enter the ear canal and travel down to the eardrum, which is like a drum of a drum, and that causes the eardrum to vibrate. In turn, those vibrations are transmitted through three tiny bones in the middle ear called the malleus, the incus and the stapes, and these are the smallest bones in the body.

Georgia - When I was in school we called them the hammer, anvil, and stirrup because of their distinctive shapes.

Brian - And a third of those bones - the stapes…

Georgia - That’s the stirrup.

Brian - … makes contact with an organ called the inner ear, which is also called the cochlear, the part that’s concerned with sound. The cochlear is encased in very rigid bone but there’s a small membrane covered opening, and the stapes rests on the top of that opening, so the sound is getting in through that small opening in the bone.

Now the magic starts to happen inside the cochlear; that’s where the analysis of sounds first takes place. The cochlear is filled with fluids and there’s a kind of ribbon that runs along the length of the cochlear that’s called the basilar membrane. The basilar membrane is kind of rather flexible and wide at one end, and it’s narrow and stiff at the other end, and because of those physical properties each place along the membrane is tuned to a different frequency. So the end that’s closest to the stapes is called the base of the cochlear and that responds best to high frequencies, and the other end is called the apex, and that responds best to low frequencies. There’s a kind of an analysis of the different frequencies that are present in the sound.

Georgia - So we have the sound banging the drum, the eardrum, travelling along those three tiny bones before being transferred into the cochlear. On most diagrams this cochlear looks like a snail shell, and inside is this basilar membrane, which separates out sounds to their different pitches. But before we even get to the brain our ear has another trick up its sleeve, one we didn’t know about until recently.

Brian - There’s a very wonderful thing that’s only really been discovered in the last 20 years, and that’s an active biological mechanisms that amplifies the vibrations on the basilar membrane. This active mechanism depends on the operation of specialised types of cells called outer hair cells.

These outer hair cells behave like miniature motors and the feed energy back into the basilar membrane and amplify the response, and they also sharpen up the tuning so each place is much more narrowly tuned to specific frequency than would be the case if you didn’t have this so called active mechanism.

This active mechanism is crucial for letting us hear very soft sounds, and the weakest sounds that we can detect produce a vibration of the eardrum that’s only the size of the diameter of a hydrogen atom. It’s really a very tiny vibration that we can detect and this all depends on the operation of these outer hair cells.

There are still many aspects of these processes that we don’t understand, including this active mechanism in the cochlear. Although we know that it’s there, exactly how it works is still not fully understood. But what we do know is that you can detect its effects relatively easily, and one remarkable thing is an effect called the cochlear echo. When you put the sound into the ear, as a result of the operation of this active mechanism, sounds actually come back out of the ear that you can measure in the ear canal.

Georgia - That’s incredible! So it’s not just our mouths making sounds, our ears are doing it in an albeit very quietly too?

Brian - That’s right. Our ears are actually generating sound. Another interesting thing is that this active mechanism is partly under the control of the brain. The brain actually sends signals down to the cochlear to control the operation of the active mechanism, so even the mechanical vibrations produced on the basilar membrane are partly influenced by the brain. No-one 20 years ago had even thought that that was a possibility that the brain is indirectly controlling what we hear by controlling the operation of the cochlear.

Georgia - So how does this information get transported into our brains?

Brian - Within the cochlear there’s another type of hair cell called the inner hair cells, which are like the microphones of the ear. They’re detecting these vibrations on the basilar membrane and converting them to an electrical signal, and that electrical signal, if it’s strong enough, can lead to what are called nerve spikes or action potentials in the auditory nerve. The more intense the sound is at a given place on the basilar membrane, the more action potentials you get. So information is signalled to the brain as a kind of digital code in terms of these patterns of action potentials in different neurons, and how rapidly those action potentials are occurring.

08:07 - Decoding the hubbub

Decoding the hubbub

with Dr Jennifer Bizley, University College London

Once a sound has reached our brain, how does our brain work out what to do with all the different pitches? Georgia Mills found out from Jenny Bizley, of the UCL Ear Institiute...

Jenny - We have some clues about how the brain does this. We know, for example, that many natural sounds like people’s voices have a pitch associated with them, and sounds with a pitch tend to be harmonic. So that means that they have some fundamental frequency, the lowest frequency, which is what we think of as the pitch; for example the A string on a cello that’s 220 Hertz, but there'll also be energy at multiples of that so at 440, 660,880, and so on.

Georgia - It’s helps to imagine it as a ladder, where each step represents energy at a different frequency. We interpret it as one lovely tone, but really, it’s many layered over the top of each other. Our voices do this too.

Jenny - Our brain is aware of that kind of pattern so it will be able to, essentially, associate sounds that have that, or sound components that have that harmonic structure, and group them together. We know that it does that because, if in the lab, we create a sound that is harmonic, but we mess around with it so that we take one of those particular harmonics and shift it a little bit in frequency; then perceptually, you’ll go from hearing a single note to hearing two distinct sound sources.

There are other cues that your brain can use, so sound components that come from the same source tend to change together in time so that they’ll get louder together and quieter together. They’ll also tend to come from the same place in space. So there are all of these hints that the brain can use to try and make a model of how the sounds that have arrived at the ear have existed in the world beforehand.

Georgia - Do we use any other senses when we’re trying to untangle this mess?

Jenny - We, without really realising it, integrate information across our senses all of the time; this is particularly true for vision and for hearing. There are specific examples you can think of where integrating visual information with what you hear can be helpful to you so, for example, our ability to localise a sound in space is really good. We can tell apart sounds that are about one degree different; that’s sort of the width of your thumb at arm's length. But vision is 20 times better than that so it makes sense that you integrate information about where you see something coming from with where you hear it coming from.

Another example of when it’s really helpful to be able to see what you’re trying to listen to is if you’re listening to a voice in a noisy situation, so in a restaurant or a bar. If you can see a speaker’s mouth movements; then that gives you additional information about what they’re saying. So the mouth movement that you make, for example, a “fu-” sound is very different from the mouth movement that you make for a “bu-” sound.

But at an even more lower level trying to tackle this problem of how when you’re faced with a really complicated sound mixture, you seperate it out into different sound sources; then actually, if you’re looking at a sound source so, for example, someone’s mouth, if you look at someone’s mouth you’re getting a rhythmical signal where the mouth gets wider as the voice gets louder, and smaller as the voice gets quieter. Even the way that someone’s perhaps moving their hands as they’re speaking is giving you another sort of rhythmical cue.

We’ve recently learned that that very basic information is enough to help you group together the sound elements that come from a sound source that’s changing at the same time as what you’re looking at, and that allows you to separate that sound source out from a mixture more effectively.

Georgia - You mentioned there separating out where a sound is coming from, so what do we know about how our brain works this out?

Jenny - In the auditory system, we have to rely on the fact that we have two ears, and the brain has to detect incredibly tiny differences in timing and sound level that occur between the two ears. If you have a sound, for example, to your right; then it’s going to hit your right ear slightly sooner than your left ear, and it’s going to be louder in that ear. And your brain is incredibly sensitive to these tiny differences in timing and sound level, we’re talking about fractions of a second here.

There’s a third ear that you can use which is that as the sound is funneled into your ear it passes through the pinna, which is the part of the ear that you see on the side of the head, and it has these complicated folds on it. As the sound comes in it’ll interact with those complicated folds in a way that depends on where the sound source comes from, and the effectively filter the sound and give characteristic notches in the frequency spectrum which give you information about where a sound source originated from.

What am I going to say next?

with Dr Thomas Cope, University of Cambridge

How do our brains effectively 'cheat' when decoding speech, and what does that have to do with tinnitus? Georgia Mills found out from Cambridge University's Thomas Cope...

Thomas - The important thing to always remember is that your brain is not just representing what's going on in the outside world, it’s representing an interpretation of what’s coming into you through your sensors. So, if I’m talking to you now in a quiet room, you’re predicting as you’re going along what the next word that I’m going to say is. If I said to you “I’m just popping out to the shop to buy some…” You would have a list of things that are most likely for me to say next; top of that list might be milk, and then bread, and then there’d be less common words lower down. When you then get information from your senses, you are comparing that information against your prediction to see was I right or wrong? Do I need to do a lot of work in processing this auditory information to work out what it was, or was the start of it, like milk, and therefore it’s probably milk and can I just move onto the next thing saving energy in my neural architecture?

Georgia - I see. So I think you’re going to say one word, my brain prepares itself for that word, and if it’s the same word job done, move on, and if it’s not then we have to take a step back and think a bit harder?

Thomas - Yeah, that’s exactly right. Your auditory cortex, which is the first bit of the brain that auditory information goes into, sets up a prediction and it only sends forward to other parts of the brain errors from that prediction. So, if there are no errors, it was correct; then it has to send nothing further forward and nothing else has to happen. If what comes in is a different word or you’re not sure what it was; then it has to work harder in sending information higher up the processing stream to other parts of the brain to work out okay, he didn’t say milk he said eggs. What was it, how does that change what I’m going to predict next?

Georgia - How did we find out this is what our brains were doing?

Thomas - The grand idea is called predictive coding. And there’s evidence for predictive coding, not just in speech. This is a process that underlies everything that the brain does. The brain is really a predictive engine, and we know that errors in predictive coding underly a lot of different diseases. There’s evidence that in schizophrenia predictions go wrong and that can lead to abnormal perceptions like hallucinations.

In tinnitus, predictions go wrong, so you have a lack of input from one part of the ear because of damage there, and that sets up a little bit of noise, and the brain starts to predict that maybe the noise is meaningful and maybe I should pay attention to this noise and, over time, that’s perceived as tinnitus. Even if the noise goes away, the prediction that there will be tinnitus remains. And there’s nothing to counteract that because the ear has died in that part and then you perceive tinnitus as an interaction of strong predictions about hearing something, and nothing meaningful going in.

Georgia - It’s interesting you should mention tinnitus because that’s a very common condition as I understand, so we think that this is caused by our predictive system going into overdrive?

Thomas - Yeah; that’s the emerging view. About half of all adults will have some tinnitus if they’re in a quiet room and they’re really concentrating on listening to what’s coming into their ears, they’ll hear a quiet buzzing or ringing and that’s completely normal. When tinnitus become troublesome is when that quiet buzzing or ringing gains an abnormal perceptual salience, so people start to attend to that buzzing or ringing. They start to expect that buzzing or ringing, and any information that does come in is interpreted very precisely, and that prediction of buzzing or ringing is verified and then the tinnitus essentially ramps up in how irritating it is independent of what the volume of the tinnitus actually is. Predictive mechanisms, together with some problem in the ear, are what causes tinnitus.

Georgia - When Thomas says the buzzing or ringing can ramp up, it can get really, really bad. It can stop people from concentrating or from sleeping and potentially ruin lives. This is what it can sound like. That’s from the British Tinnitus Association. So does knowing about this mechanism help us treat tinnitus?

Thomas - Well, yeah. This is one of the reasons that things like cognitive behavioural therapy for tinnitus can be quite effective because it’s not just a problem of what’s going on in the ear causing the perception. It’s a problem of how the brain is working subconsciously to really eek out every bit of information it can get from what’s essentially noise ramping up the intensity of this perception. We know that therapies that reduce the loudness of tinnitus don’t necessarily reduce the distress of tinnitus, and these two thing need both to be tackled.

18:10 - Sounds sometimes behave so strangely...

Sounds sometimes behave so strangely...

with Professor Diana Deutsch, University of California San Diego

How can sounds baffle our brains? Georgia Mills spoke to the queen of auditory illusions, Professor Diana Deutsch and the University of California San Diego...

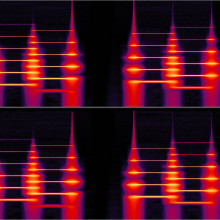

Diana - One earphone was producing high tone, low tone, high tone, low tone, while the other earphone was producing low tone, high tone, low tone, high tone. I put on the earphones and generated the sounds and listened to them, and I was absolutely amazed at what I heard because it wasn’t at all what I had entered into the computer. What I was hearing was a single high tone in my right ear alternating with a single low tone in my left ear. So then I switched the headphones around and the same thing happened; I kept on hearing the high tone in the right ear alternating with the low tone in the left ear. At that point, I thought well, either I’m crazy or I’ve gone into a different universe or something.

I went into the corridor and brought in a few people and they all heard what I was hearing, except for one person, who happened to be left handed, who heard the opposite: high tone in the left ear alternating with the low tone in the right ear. And then I realised that, in fact, this must be a new illusion that would be of importance to the understanding of how we hear patterns of tones.

Georgia - What did that reveal to us about the brain?

Diana - First of all, as a group, right handers and left handers differ in what they tend to hear, and this is just what you would expect from the neurological literature on patterns of cerebral dominance in the case of right handers and left handers. Most right handers have the left hemisphere dominant; in fact for speech, whereas left handers can go either way. It had been thought that cerebral dominance was simply a function of speech, but these were musical tones and so it showed that, in fact, cerebral dominance was more complicated than had been thought, more general than had been thought.

Georgia : Now this one only works if you have headphones on, but here’s one that works on the radio. It will help if there’s someone else in the room with you. I’ve got fellow producer Izzie Clarke with me to help me out. So here are a set of paired tones, all we need to do is tell if they’re going up or down in pitch.

Number 1 – so that’s clearly going up.

Izzie – no that’s going down.

Georgia - Okay, here’s the next one? That was going down.

Izzie – Yeah

Georgia - Okay, we agree on that one.

Izzie - That was going down.

Georgia - Okay, good… Up.

Izzie - No! That’s still going down.

Georgia - That was definitely going up.

Izzie - No, they’re all going down.

Georgia - What do you know about music?

Izzie - Loads.

Georgia - Well, according to Diana, we’re all wrong, or we’re all right. There is no real answer, it’s ambiguous, kind of like the audio equivalent of that infamous blue/gold dress. Out of interest, which colour did you see the dress?

Izzie - Oh, it was white and gold.

Georgia - It was blue and black. Okay. We clearly have very different brains.

Izzie and I can rest assured, even trained musicians will disagree on this. And Diana’s discovered your brain interprets it according to your life experience.

Diana - It varies depending upon the language or dialect to which they’ve been exposed particularly in childhood. In one experiment, I found that people from the South of England tended to hear this pattern of tones in different ways from people who had grown up in California. Now, the pitch range of speech in the South of England is higher on the whole than the pitch range of speech in California. In another experiment, I looked to see if this correlated with the pitch range of the speaking voice to which you’ve been exposed and, in point of fact, that is the case.

Georgia - Why do you think illusions make such a powerful tool for studying hearing?

Diana - I think that you can learn a great deal about a system when it breaks down. For example, if you have a car that’s broken down you can find out a lot about the way the car works by examining it and examining the way it’s broken down, and that would be true of any piece of equipment. I think the same is true of illusions in perception, both visual and in hearing that you can learn a great deal about them by causing them to fail. So I think that illusions perhaps show more about hearing than anything else. So when you hear something correctly, what can you say about it? Well, okay you're hearing it correctly but, when something goes wrong, then you can start asking what is it that’s gone wrong? And then you can delve into the system and find out more about it that way.

Georgia - So what about the first one we heard ‘sometimes behave so strangely’? Now we’re not exactly sure about why this happens, but Diana says it provides a clue as to how our brain processes song, and that there’s a central director somewhere, deciding whether to send sound information to be processed as speech or processed as music.

Diana - My favourite view of what’s going on, in part, is that the tonal structure, the structure of pitches is rather similar to that of a phrase in the “Westminster Chimes” - boing boing boing boing, so ‘sometimes we hear so strangely.’ And the rhythm is very similar to that of “Rudolph the Red Nosed Reindeer” - ‘sometimes behaves so strangely.’ So this sort of central director decides okay, this is song and sends the information to be analysed that way.

26:05 - A world of silhouettes: Seeing with sound

A world of silhouettes: Seeing with sound

with J Steele-Louchert, The World Access for the Blind & Lore Thaller, Durham Unviersity

How do some people use sound to see? Georgia Mills spoke to Lore Thaller and J Steele-Louchert about how human echolocation works...

J - I’m J Steele-Louchart. I’m an orientation and mobility instructor with World Access for the Blind, and I specialise with perceptual mobility techniques, teaching blind people to see through sound and using their cane.

I went blind when I was 12 years old and I had a tiny bit of vision left, by not really any to be functional. I knew about echolocation and other kinds of perceptual techniques from a Discovery Channel special that had been aired in the United States, so I just kind of grew up with it in the back of my mind. And then when I went blind it seemed obvious; I remembered the tongue click, and I remembered a couple of main features of it and I thought well, I don’t have my eyes but I do have my ears and both shut off from a distance perception.

So I started clicking around and experimenting and teaching myself what different distances and textures and things sounded like. Then, when I was 14, about a year and a half later, I went to a conference of this person named Daniel Kish. I started working with Daniel; he started teaching me and, eventually, I became one of his instructors.

Georgia - Could you describe what it’s like to me?

J - It’s like freedom. When I click, I make a really small tongue click, so I might say ‘click, click.’ And what happens is the sound goes out in a cone shape, it tries to wrap around objects and it tries to go through objects, and then it flashes back to me. As I turn my head and I make that noise; I make it every few seconds at most, usually not that often, it gives me all these different pictures of the physical structures around me. I might describe as the world made of mannequins or silhouettes, and I can see all the physical structures. Everything from fences and hedges all the way to buildings, cars, trees, lamp posts, and they all have a very specific dimensionality, texture, density. It is really the world made up of sound.

So, effectively, I’m a low vision person moving through the world even though I’m totally blind. I’ve been totally blind for about five years now and I see with my brain. I see everything that’s around me even though I’m not receiving the information visually.

Georgia - Oh, wow! So there is an image appearing in your head. When did this first happen and did it go straight from nothing to this vision, or did you think this sound means this and it gradually changed over time?

J - That’s a very astute question. It did gradually change over time. When I first started I got sound but not images, and it took a while for it to become true three dimensional, actual images. I remember the first time I ever got a true image, it was when I was on a college campus and I was walking past a building and I clicked, and I saw the building. I didn’t hear it I saw it. My brain filled in the light because I grew up with light and so my brain filled in a visual image in my head, but I very clearly heard all the angles. And the way in which that came about, for me in particular, although it’s a little bit different for everyone, was a long period of building what I call a “sound catalogue” where you do exactly what you described. You kind of discern what sounds go to what distances, what hardnesses and densities, of course, and you build this catalogue of sound where you can assign the sound to a variable and you build your pictures that way. But that turns into an image catalogue through the process of neuroplasticity, the brain actually begins processing these sounds through the visual cortex of the brain, and your integrated visual system creates that visual image even though it’s being processed originally through the ears.

Georgia - What was that like the first time you say this building?

J - Mind Blowing! Even though I grew up with it and knew what it was supposed to be one day. I knew what was going to happen; I’d seen the research. And then it happened and it was - I have no words. It was seeing, it was seeing as a blind person.

Georgia - How does this work? How do our ears enable us to effectively ‘see’? That’s exactly what Lore Thaller, with J’s help is trying to find out.

Lore - What we found is that when we investigate what’s happening in the brains of people who have used echolocation for a while and who are blind, we found that when they listen to these faint echo sounds the parts of the brain that typically process input through the eyes, so light, is very active, so it does seem to pick up on these echoes. At the same time, the part of the brain that processes sound, so the hearing part, is active as well.

What we’ve done then in a second step is we contrasted. Basically, let’s say a person listens to these sorts of echolocation sounds and we’re measuring what’s happening in the brain, and then let’s compare that with what’s happening in the brain when they listen to sounds that do not contain these echoes. It’s sort of still the same sound but it’s just the echoes that are missing. So when we took the difference in brain activity between these two conditions, the part that really came out as still being very responsive to the echoes was this visual part of the brain, and to us that was really surprising. It sort of suggests are they actually seeing with sound; what’s happening? So in research, which we’ve done since then, we’re trying to tease apart what it is that’s actually going on in these sorts of neural structures, and what sort of information that they respond to.

Georgia - Right. Is there some sort of rewiring of the brain going on here then?

Lore - Yes. People who are blind, their brains are organised in a different way as compared to brains of people who are sighted. And that’s just the brain being plastic and adapting to whatever it really has to deal with day to day. Even the brains of sighted people reorganise if you’re learning a new language, if you learn a new skill like playing tennis or something like this, juggling; your brain will reorganise itself. And so this change in the activity of the brain that we’ve observed in these echolocators, that’s very consistent with this knowing that the brain is a plastic organ that reorganises itself.

Georgia - What’s next? What are you looking into at the moment? What do you want to find out?

Lore - One thing we’re very keen on investigating is how this learning actually takes place and how it changes the brain. At this point, most of our work we have taken different groups of people; for example we’ve worked with people who are sighted and then they newly learnt to echolocate, or we have worked with people who are blind and then newly learnt to echolocate, and then we have worked with people who are blind and who are these echolocation experts. And we find these differences in terms of brain activity, in terms of their behaviour, and so on.

One thing we are curious about is let’s say you have a person, and they learn to echolocate, and follow them over a period of time, how does their brain change? Will this learning then actually lead to these changes which we have observed in the brains of expert echolocators/

Georgia - So while we wait to understand more about how our brains can do this, more and more people are taking up the skill. It came be taught quickly, according to J, and is completely life-changing.

J - The word I always come back to is ‘freedom’ when people ask me that question. I mean, what is it to be able to see with your brain? What is it to be able to learn a new area very quickly and efficiently with great accuracy, even from a distance, and to be able to recreate the world without your eyeballs? It’s freedom. It means that I can go anywhere I want and do anything I want. And many blind people can say that, but I can do it very quickly and very efficiently with a great deal of autonomy.

Georgia - But while this may sound, to me at least, like a superpower, J was keen to emphasise this is a skill anyone can learn.

J - It’s not special people out there who are just doing this in very small numbers. There are greater and greater numbers of people who are proving that regardless of your background, it seems very learnable and very teachable as long as you have a structured way in which to do it. I just want people to know that it’s something that they can attain.

35:30 - What causes hearing loss?

What causes hearing loss?

with Bella Bathurst, Author of 'Sound', & Professor Brian Moore, University of Cambridge

What causes hearing loss and what is it like? Georgia Mills heard from Bella Bathurst, author of the book 'Sound', and Professor Brian Moore of Cambridge University.

Brian - There are many causes of hearing loss. There’s likely to be a genetic component, so different people vary in their susceptibility to getting hearing loss. And there are quite a large number of genes now have been identified that affect hearing and, if you have a problem in those genes, you’re almost bound to get the hearing loss.

But there’s also strong evidence that noise can damage hearing, so intense sounds generally, which used to be used produced mainly by factory noise. Now even leisure noise, very loud concerts and discos can damage your hearing, or working in the military can be very dangerous as well and many people are still being deafened by working in the military.

There are also many drugs that can affect hearing: antibiotics ending in “mycin”; kanamycin and neomycin will poison the ear if they’re given in large enough doses. And many drugs used to treat cancer, particularly ones with platinum in them can damage these hair cells in the inner ear.

Georgia - Now I’m just worried about being an audio producer. It’s probably not a great experience for my ears. Do we know why loud noise has this affect, and could it be one very very loud bang or is it over time?

Brian - Again, there are probably different mechanisms. If you have an exposure to a brief, really intense sound like a gunshot or an explosion, that can actually produce structural damage inside the cochlear; it basically is ripping up the structures, which is very nasty. But for more moderate, long term exposures like working in a noisy factory or going to a loud rock concert, there seem to be metabolic processes that take place in the cochlear that can actually poison the structures inside the cochlear. It’s a bit like if a marathon runner overdoes it, all the metabolites that build up in their muscles can poison the muscles. And this active mechanism that I talked about requires quite a high metabolic turnover, and if you work it really hard because of intense sounds coming in, the chemicals that build up in the cochlear can result in the hair cells being poisoned and then they die off and, unfortunately, once they die they never come back.

Georgia - So it’s like if I were to run well, judging by me about five minutes and I would get a stitch, it’s like the audio equivalent of a stitch and the bad chemicals just build up?

Brain - That’s right. And, of course, in our muscles we have receptors that tell us when things are going wrong; it starts hurt and become painful and then you stop doing it. But it’s not clear yet whether the ear actually has pain receptors and so we may do all this damage to our ears without actually feeling anything bad going on, so that’s a real problem I think

Georgia - So please do adjust your sets and turn down the volume. But it is being predicted that with our current music behaviours, hearing loss is going to continue to rise.

Bella - People are always being told to turn down their ipods or whatever it is but, actually, there is a bit of an epidemic of hearing loss coming for the young as well. You can completely see why because most music, or a lot of rock music, is not supposed to be heard quietly, it’s designed to be loud. With all that I know about hearing, I still want to stand next to the amps because that’s the only way that you get that thud in your heart, in your bones.

Georgia - Bella Bathurst is the author of the book “Sound.” She knows exactly what it is like to go deaf, as when she turned 27 she started to lose 50% of her hearing.

Bella - Signs that had previously been audible like station announcements, or people’s voices, or laughter, or whatever it was just became inaudible. The sound of a mobile in another room had previously been easy to hear and then it became difficult to hear, so it was a sort of gradual muffling. But one of the odd things about hearing loss is that it makes your more aware of acoustics and your sound environment, not less.

Georgia - What do you mean by that?

Bella - I just became completely obsessed with rooms and spaces. There are certain situations which are much more difficult, it doesn’t matter what kind of of hearing aids you’ve got and what kind of amplification. Outside, where sound is very echoey and it gets blown away very easily, it’s much more difficult than a nice, muffled, low ceilinged room with lots of carpet and lots of fabrics.

Georgia - The kind of rooms I look to record in?

Bella - Absolutely. I was looking at the studio here and thinking ideal acoustic environment.

Georgia - With this time when you lost 50% of your hearing, did that stay consistent?

Bella - No, it kept on dropping. By about 2009, which is when I was re-diagnosed, it was down to about 30% in both ears, probably slightly worse in the left ear.

Georgia - How did that change your life?

Bella - I’ve always been a writer, and the bit of the job that I always enjoyed most is interviewing, and I worried most of all that it was making me unprofessional because when I played back those interviews, I would hear how many times I hadn’t heard, or had misheard, or come out with a completely wrong connection or something and I really hated that. I thought that was terrible and so I felt very guilty and rather ashamed, and I also felt horrified at the idea that I might be blanking people without meaning to.

I also resented actually, going deaf aged 27. I disliked the stigma that deafness has that it’s to do with age and I’m afraid to say stupidity. People see deafness as something that’s connected to slowness and I’ve fought those things quite hard. And now, I’ve just got exhausted and then I just got depressed, which I think is common to a lot of people with hearing loss that what it wants to do, what deafness wants to do, is to push you away from your fellow human beings and into a state of isolation.

One of the things that I realised when I started researching the book, which I found completely fascinating, was that without prompting, without me looking for it, every single person who I spoke to who had hearing loss said: I find it completely exhausting. I sleep like I’ve been clubbed. I need 10 hours of sleep a night or whatever. People do find it completely knackering and again, people tend to sort of blame themselves for finding it knackering.

Georgia - Hearing aids are usually the first port of call for someone who is losing their hearing. These essentially turn up the volume by receiving sounds through a microphone, boosting the volume electronically before rebroadcasting sounds into the ear through a tiny speaker. They can make a big difference, but in some situations, like outside or in an busy room, or for some types of deafness, they aren’t very effective. But there’s lots of work into improving them. Here’s Brian again…

Brian - One thing that people are working on that’s still in the future is that you would actually pick up electrical signals from the person’s brain to decide who they were trying to listen to, and then you would steer the beam in that direction. But that’s still some way ahead, but there is a large research group working on that right now.

Georgia - That's incredible! So really smart hearing aids - you can decide you’re finding this person really boring, just shut them off and turn up the other...?

Brian - Exactly, yes. What we do have already are hearing aids that you can control from smartphones, and you can use the smartphone to control the operation of the hearing aid to some extent and set it up in different situations. But the smartphones can also do what’s called geotagging, where they remember how you set up the hearing aid in a specific situation and when you next go back to that same place it says “oh, this is the same location as before” and it automatically sets up the hearing aid the right settings for that situation.

Georgia - That’s incredible! So I guess compared to my glasses that are just pieces of glass, they have to be very active and clever machines?

Brian - Yes. Nowadays, hearing aids are miniature computers and they pack a lot of computing power into a very small space, all operating of 1.1 volts.

Georgia - Wow! Because I know when I’m editing sound and changing the pitches my software takes about five minutes to do it, it has to do it all in real time?

Brian - Yes. This has to all be done in real time. And it’s got so complicated that I think, even within one manufacturer of hearing aids, there’s no single person who understands how everything in the hearing aid works. Each person does one block in the processing and then somebody tries to put it all together, but it requires a big team to get all these things working in a coordinated way.

Georgia - Ah! So optometrists have it easy?

Brian - Yes, the do indeed, yeah.

45:32 - Hearing: genetics and stem cells

Hearing: genetics and stem cells

with Jeff Holt, University of Harvard Medical School

Hearing loss will affect 1 in 6 of us. Could some cutting edge therapies help? Georgia Mills heard from Boston Medical School Professor Jeff Holt.

Jeff - Some of the techniques we’re working on in my lab, and others are also interested in this, are gene therapies. So if we can identify a gene that has a mutation that leads to hearing loss, the goal would be to introduce the correct DNA that doesn’t have a mutation, a healthy gene in other words, reintroduce that back into the sensory cells of the inner ear and thereby restore function.

Georgia - How would you go about doing that?

Jeff - The strategy we’ve been using is to engineer a viral vector. We take a virus that’s known as adeno-associated virus; this is present in most human populations. It doesn’t cause disease but we can engineer it, remove the viral genes and then insert any gene of interest, any DNA sequence we’d like to put into the ear. Viruses are particularly good at carrying DNA sequences into the cells so the virus will do its job, carry the DNA that we’ve inserted into the sensory cell of the ear and then the ear knows what to do with that gene to make the correct protein and restore function.

Georgia - Just as an example: if the DNA didn’t know how to code for the hair cells properly, you would get a virus to sneak in the right segment of DNA; that would then go into where it’s meant to be and start being used by the cell to start building a hair cell properly?

Jeff - Exactly.

Georgia - That sounds brilliant, so does it work?

Jeff - So far, we’ve tested it in mouse models and, remarkably, it does work. We can take a deaf mouse and allow them to recover sensitivity to sounds as soft as a whisper.

Georgia - Can this intervention happen at any age of the mouse or does it have to happen while it’s still developing?

Jeff - That really depends on the specific gene we’re targeting. Some genes don’t turn on until late in development or only at mature stages, some are critical at very early stages of development so there may be a window, a therapeutic window of opportunity which we would want to intervene before that window is closed, and it really has to be dissected out on a gene-by-gene basis. In some cases, we’ve seen that it’s required to put the gene back in at the early stages, but in other cases we think we can do it later. It has not been tested in humans yet, but that’s something that we’re working towards, and there’s growing interest in trying to move this to the clinic.

Georgia - What barriers do you need to cross before we can give this a go?

Jeff - We need to identify the suitable patients to target for a clinical trial. We also need to confirm that there’s enough safety data; we want to make sure we’re not making the situation any worse or making anybody sick with side effects and so forth. Once we’ve got those two bits confirmed then we need to find the funding to be able to do this. But, I think if we pull those three together, we should be able to begin a clinical trial for gene therapy in humans for hearing loss.

Georgia - Oh right! So as far as we know there are not massive scientific barriers, at least, that would stop us from attempting this?

Jeff - That’s right. I think the science is showing that it should be possible.

Georgia - That’s the genetic side of things. What about using stem cells?

Jeff - There are a number of approaches for using stem cells to try to regenerate the inner ear. Some of them currently are to take a stem cell in the lab in a dish and grow it it into an inner ear organoid. An organoid is something that would resemble or look like the inner ear containing the sensory hair cells, as well as the neurons, that would connect the hair cells, the sensory cells, to the brain. Those might be used to test or screen for other drugs, but eventually they could possibly be used a transplantation situation where you could transplant a whole organoid into a deaf ear and maybe recover function. I think that’s a little further off.

Some of the other approaches that are being explored at the moment are to see if we can reawaken the native stem cellness of cells that are present in the ear and cause them to differentiate and become new sensory hair cells.

Georgia - How hard is it to build a mini ear in a dish then? Have you managed it yet?

Jeff - Yes, we have managed it. We start by using stem cells that are pluripotent, meaning they could become any number of different cell types. And we provide a couple of the correct factors that cause them to head towards an inner ear cell type and the we leave them alone to let them follow their own path. Just giving them a little bit of push at the beginning leads them down the right path and we eventually, after 30 to 60 days, and end up with something that looks like an inner ear.

Georgia - And then you can use this to test various drugs on to see how they would work in a human ear without risking a human’s ear?

Jeff - Exactly. It’s a great model system to be able to screen pharmaceutical compounds, as well as some of the gene therapy compounds I mentioned earlier.

Georgia - In terms of getting stem cells into an ear, we’re far away from this, but what are the challenges involved in getting something like this to work?

Jeff - There are still a few challenges. We need to be able to make a correct cell type, and if we introduce that correct cell then into the ear, it needs to be integrated into a complex organ so that it’s oriented properly, it’s stimulated properly, and responds to sound, and so that it’s corrected properly with the neurons that transmit information to the brain.

Georgia - And are there any risks with a technique this?

Jeff - Well, with stem cells, this hasn’t really been done in humans too much yet. There are always risks that the stem cells could differentiate into some unwanted cell type.

Georgia - Would this be either something you don’t want or could it become cancerous as well?

Jeff - That’s always a possibility. In the inner ear, luckily, there are no native cancers in the inner ear, but we would want to be cautious about putting some new cell type in the ear that could differentiate into something pathogenic.

Georgia - So perhaps genetic therapy could reduce genetic deafness down the line, and if we can make sure the stem cells turn into the right cells and don’t become cancerous, they could one day help people who do wish to restore their hearing.

Bella’s hearing was restored to her through an operation, after she was re-diagnosed with what we know to be a curable condition. She described her first experience hearing music again.

Bella - When I’d had those operations, I did have this extraordinary experience of listening to music for the first time without any artificial amplification and it did absolutely blow my mind. It was extraordinary because it was about six weeks after the second operation and a friend of mine had got tickets - a rather cultured friend of mine had got tickets to go and see the Berlin Philharmonic playing Schubert and Haydn, neither of whom I really knew anything about.

Simon Rattle walked to the podium and he raised his baton, and then it was just like something completely sort of all body experience. It was like the sound didn’t just enter my ears, it was like standing under a waterfall. It was a kind of complete physical experience. It was like it was resetting my cells or something, it was absolutely extraordinary. It just poured through me and was like a sort of fuse box came back on again, it was properly mind blowing.

53:50 - Sensor of the Week: The mighty moth

Sensor of the Week: The mighty moth

with James Windmill, University of Strathclyde

Why does the moth get the accolade of sensor of the week? James Windmill from Stratchlyde University presents the case to Georgia Mills.

James - Everyone has seen moths fluttering around a streetlight. But, did you know that the commonplace moth has one of the most sophisticated ears in the world?

Georgia - Moths need to have good hearing, thanks to their predators – bats, who find them by using high pitched squeaks. If a moth can hear these squeaks it knows when it’s about to get got!

James - To do this they have what appears to be the simplest possible ear; an eardrum with only a couple of sensory cells connected to it.

As a mechanical sensor system, this would just tune to a set frequency range. Unfortunately then for the moth, some bats change the frequency of their sonar call as they approach their insect prey in order to get a better signal.

Boooeeeep!

However, some moths’ ears can change their frequency tuning when they hear ultrasound, pre-empting the bats’ frequency change, a feat no other insect ear can do.

Georgia - Moths then can effectively tune in to detect the bats squeaks. But some other moths don’t need to tune their hearing.

James - Other moth species have evolved incredibly wideband frequency ears, enjoying the highest frequency hearing of any animal on the planet. This allows them to not only hear any bat, but also pick out tiny ultrasonic chirps that their own species make to attract mates.

Georgia - To put that into context, us humans can hear up to 20,000 hertz, mice can hear up to 70 000 hertz, and the greater wax moth can hear up to 300 000 hertz. This was only discovered recently by James and co, because previous researchers hadn’t been able to find an upper limit. And that’s not all.

James - Very recently, it was discovered that one species of moth that makes their own ultrasonic chirps has ears that can separately tell the direction that ultrasound is coming from. All other animals use both ears together to get directional information, making that one moth species the only animal known in the world where a single ear gives it sound direction.

Comments

Add a comment