Simulation Science: Living in The Matrix?

This week, could we be living in The Matrix? We're talking about the power of computer simulations, and we’re asking are we all living in one! Plus, in the news - making diabetes treatment smarter; diagnosis by an electronic doctor - would you be comfortable with that? And the world’s first robot that picks lettuces...

In this episode

00:48 - New device for diabetes

New device for diabetes

with Mark Evans

People with diabetes cannot make enough of the hormone insulin, which controls blood sugar levels. In type 1 diabetes, the body cannot make any insulin at all, and instead the hormone has to be injected through the skin in varying amounts tailored to mealtimes and what someone is eating. Now scientists in the US have developed a sugar-sensitive molecule that works like an insulin “sponge”, dispensing insulin automatically into the bloodstream whenever blood sugar levels climb. Mark Evans, who’s a diabetes specialist at Addenbrooke’s hospital in Cambridge but wasn’t himself involved in the research, spoke to Chris Smith...

Mark - So this is one of a group of approaches which people refer to as smart insulins. They've taken standard insulin that we already use to treat diabetes and they've combined it with a polymer, so a large molecule a bit like a ball of wool which soaks up the insulin and then can release it.

The particular polymer they've chosen is boronic acid which binds and responds to changes in glucose and sugar levels. So by tinkering about with this they've rather neatly been able to come up with a formulation which means that the polymer is glucose responsive. So in other words this ball of wool is able to react to sugar, change its charge, and release more insulin when it is exactly the time it's needed. In other words, when the local glucose levels are higher. And equally importantly, it's able to release less insulin then when glucose levels are lower.

Chris - At the moment, a person who is diabetic would need to tailor how much insulin they have in their body that they inject, in order to match what they anticipate their meal is going to deliver, or their activity. So this would release them from some of that wouldn't it would mean that the molecule’s doing the work of calculating how much insulin to release, rather than them.

Mark - Absolutely it'll certainly help. The life of somebody with diabetes, particularly let's say with type 1 diabetes, is challenging and they spend their whole time chasing their tail doing lots of blood glucose testing and trying to catch up with ups and downs. And the hope is that something like this will at least help to let's say shave off some of the ups and fill in some of the valleys in daily blood glucose levels.

Chris - What's the evidence this technique actually works?

Mark - So they've tested their system in mice and in pigs with type 1 diabetes under conditions of artificial glucose load. So essentially giving a slug of glucose and delivering either this insulin or regular insulin. They've also in mice done a slightly longer term study over a couple of weeks or so of twice daily injections and does seem to work at least better than the control insulin that they gave under that particular experimental conditions they tested this under.

Chris - How is this new smart insulin actually administered? Is it injected in the same way that traditional insulin that a diabetic is familiar with is given?

Mark - Yes. So they delivered this by injection in these studies. At the moment insulin has to be delivered across the skin of the body in some way shape or form, whether that's by injection or increasingly we've got people treated with insulin pumps which are devices which pump insulin continuously under the skin.

So this new approach would probably substitute in for the existing insulins but it would be delivered in the same way so by injection or infusion under the skin.

Chris - You mentioned that it's the glucose going into this polymer which then causes it to release or squirt out the insulin, so you match how much insulin you produce to how much glucose is there. But could any other molecules accidentally do that? So you could unfortunately trigger a big surge of insulin when you don't need it because something has tripped the molecule into releasing it inappropriately?

Mark - Absolutely, that's a great question and that's one of the questions that arises out of this - how specific is that polymer for glucose as opposed to other non glucose sugars things like fructose and things which occur naturally for example in fruit. So this is all part of the work that they're going to have to do to really try to nail this before they're able to move into human studies.

Chris - And say they can pull it off, what are the implications for a person with diabetes? Because we can we can manage diabetes pretty well in this day and age can't we? You can use pumps, you can use injections to keep people well.

Mark - So the way in which I, as a clinician, I would see this being used is as an alternative to the insulin that people are currently using, but actually used in the same way. And I think what it will do is hopefully help smooth out some of the ups and downs in daily blood glucose levels.

Chris - And that will translate into a health benefit?

Chris - Absolutely. If people get less variable and more predictable blood glucose levels, one we think that the variability in glucose itself may be important in terms of risk of complications but two, if people have got less variability they may be more confident to drive blood glucose levels lower which reduces long term risk of complications.

06:32 - Electronic doctors and your health

Electronic doctors and your health

with Peter Cowley, Angel investor; Ari Ercole, Cambridge University

Around the world, more people are living into old age, which is putting increasing pressure on health services and patients are struggling to get appointments. In poorer countries, people might have to walk 20 miles just to get a clinic. The answer, we’re increasingly being told, including by Matt Hancock the UK’s Health Minister, lies in technology, including “electronic doctors” that use AI - artificial intelligence - to make diagnoses. So is this a realistic prospect? With us to discuss this are tech entrepreneur Peter Cowley and intensive care consultant and specialist in medical data science at Cambridge University, Ari Ercole. First up, Chris asked Peter, how do these "electronic doctors" work?

Chris - What’s actually behind these A.I. systems, how do they work?

Peter - Yes. Okay. So A.I., Artificial Intelligence, should not be confused with the intelligence that human beings have. The letters A.I. have been rather taken by the tech community and overused, something like 60 percent of all A.I. startups apparently in the UK are not using A.I. at all, they're using algorithms still. So what should be used is machine learning and deep learning and it’s all about taking data and deriving outputs from that. And a couple of examples certainly in the medical field: these chat bots which you can start to use now in the UK and other countries, where the computer system will be asking questions - not voiced at the moment, though may be when the smart speakers come online - but they’ll be asking questions with text to the point where it's trying to derive what the problem is then pass you on and signpost you back to a human GP or whatever and learning from that. That would be within a primary care setting and then in secondary care using machine learning of images, whether that's moles growing on your skin or some sort of scan, perhaps of the lung, and using that data from the past, from data from medical records and the data it's learning from communicating the interactive to give an output of some form.

Chris - But Ari, bringing you in, this is all about data. Do we have enough data in order to teach these things so they’re intelligent from the get go?

Ari - I think this is really the time for Data Science in Medicine. We've always generated a huge amount of data from health care from looking after patients, but in the past this has been unstructured, it's been on paper notes and actually from a clinicians point of view a lot of it was just sort of lost and you just wouldn't have access to it. Increasingly now the data is being represented in electronic health records and we're going to see those spreading over all of health care and actually now I think we have the reverse problem we have too much data that even the clinicians can't really appreciate and know what to do with properly.

Chris - Will this bear fruit though? Assuming that it plays out the way that Peter's outlining, are we actually going to see tractable, tangible medical benefit from systems like this or is this just hot air?

Ari - No, I think it will, but I think we need to be quite careful about exactly how we apply these sorts of technologies. So it may well be that in the future that we can replace doctors with computers, but we'll have to ask ourselves if that's really playing to the strengths of the technology. So computers are very good in that they never get sick, they don't get bored, they don't need lunch breaks and they can just do things repetitively in the same way every single time unlike human beings, but what human beings are very good at is dealing with uncertainty and being flexible about things. So it seems to me that we should be applying these technologies to do the drudgery, take away that work from clinicians and actually free them to make the best informed decisions, the best use of the data, make the data as salient as possible, but actually do the human part of the clinical practice.

Chris - Peter, most people don't want to deal with a machine though, they actually relish the human contact that comes with, say, a GP appointment.

Peter - That's absolutely true, when you've got that ability to do that, but bear in mind that a huge amount of the world has not got that access. If you take the Global South, the developing world, I don't know what the number of primary care GPs per head is but I should think it's 100 x less and they may be a very long distance away, so don't just assume that we're sitting in this sort of nice - we're all sitting here in Cambridge in this nice bubble and that it's not possible - but if you take the developed world: yes, many people would want to be in front of a human being when they're discussing something. But you know I'm obviously a bit older, as you can tell, the millennials are more and more wanting to be able to communicate in a way that's quick, convenient so you don't have to travel, you don’t have to wait around, you don’t have to book something when you can't do it, and get some sort of diagnosis or beginnings of a diagnosis what's going wrong. These systems will not send you a prescription, yet, for a drug, they will refer you on if necessary, or more likely say “don't worry about it - don't be so cyberchondriac”, as they say.

Chris - Comfortable with that Ari? Would you be comfortable as an active practising doctor to have patients being seen by these sorts of systems? Is this safe?

Ari - I think at the moment we're probably not quite at that point, in terms of actually making real decisions, I think the actual decision making is probably still firmly within the grasp of the clinician, but that doesn't mean that there aren't massive opportunities from these kinds of technologies to assist the clinicians and actually improve availability of healthcare in that way instead.

Chris - One of the criticisms though, Peter maybe you can answer this, is this whole question about data security, because on the one hand we're saying these systems are driven by data but that depends on people being willing to share their data and if people don't trust data holders, and we've had a lot of examples of this going wrong in recent years and people are becoming very data aware now, it could stumble at the first hurdle.

Peter - Yeah, there's two initial issues there: one with explicit and implicit use of data. If you actually sign something off at least you've got a choice, but there is data out that's probably being implicitly used without your choice. And then there's a level of anonymisation: if you've got a very rare disease in a particular location then difficult to make anonymised, but if you're a general member of the population anonymisation’s easy. But in the end it comes down to trust, personally, and you know I'm pretty... a technophile here, I think the data will lead to better outcomes in time, I think. And I know there'll be doctors listening to this. There are misdiagnoses going on, and if that could be reduced in time, which I believe it can be, we might be looking 10 or 20 years out, it will be beneficial to the population. So it all depends on one's view. In the end, we've had this conversation many times Chris over the last four years, if you don't like being tracked don't switch on a smartphone, you know, don't use tech.

Chris - Last question Ari in 15 seconds, who gets sued?

Ari - That's a very good question and we don't really know, at the end of the day at the moment it's still me.

Chris - You take responsibility of the whole of Addenbrookes hospital?

Ari - I'm afraid I do yes.

14:08 - Lettuce picking robot

Lettuce picking robot

with Simon Birrell, Cambridge University

Automation has revolutionised the farming industry, but some crops, like lettuces, have proved tricky to harvest in any way other than by hand. Now that might be about to change, with the help of a new robot. Heather Jameson has been to the Cambridge University Engineering Department to hear how ...

Heather - Billions of lettuces are produced globally every year and every one has been picked by hand. Whilst harvesting of other crops such as wheat and potatoes has for a long time been automated, lettuces have so far eluded automation for various reasons. Firstly, lettuces are very easily damaged and supermarkets have very high standards for what they will accept. Secondly, if you imagine looking out at a field of lettuces all you see is a sea of leaves. It's actually very difficult to pick out individual lettuces even for humans. But now engineers at Cambridge University have developed a robot which they believe is up to the task. At the end of a large robotic arm, the robot has a square cage, big enough for a football, and the cage does the cutting and collecting. The robot also has two cameras to see the lettuces. Simon Birrell showed me how it worked.

Simon - So this cage that you see, this is what we call the end effector at the end of the robot arm. This goes down over the top of the lettuce. At the top we have a valve attached to compressed air. So when the robot decides it's time to grasp, it activates the valve and this soft gripper, which is covered in silicone, comes over and grabs the lettuce and it grabs it gently so that it doesn't bruise it. And then the valve is activated again and this rotary belt goes round and it drives this blade down here, through the stalk of the lettuce and goes through and gives it a clean cut.

Heather - But before the robot picks the lettuce, it first has to find it in the confusing sea of leaves and then decide whether the lettuce is good for harvesting. The robot has been trained to identify the lettuces using neural networks. A neural network is a computer system which is inspired by the way the human brain works.

Simon - It classifies the lettuce into good - suitable for harvesting now, immature - which is a lettuce that we’ll come back for later when it's grown a bit, and a diseased lettuce. It's very important not to pick a diseased lettuce, because if you do, you can contaminate the end effector and then when you move it to the next lettuce, you run the risk of spreading mildew or whatever the infection is.

Heather - At the moment the cutting process is not intelligent, it's a pre-programmed process. But in future the engineers would like the robot to also be able to learn to cut more accurately as well.

Simon - The supermarkets are extremely picky about what the cut looks like. So the cut can't be too close to the lettuce head. It can't be too far away. It's got to be at 90 degrees. All of this is nonsense in many ways. I mean, the lettuce still tastes exactly the same. But, yes, we are interested in adding neural networks so that it can learn the best way to cut and continuously improve the way it does it.

Heather - The team had tested the robot in the field. Literally, in a field of lettuces. The robot sits on a rig, powered by a generator and the wheels roll between the rows of lettuces.

Simon - Conditions are totally different from the lab. You have wind, you have dust, you get rained on... everything is bumpy so that all the equipment gets knocked around a lot. So all the kind of fine tuned calibration that you do in the lab is completely useless out in the field.

Heather - In the tests, the robot successfully located the lettuces 91 percent of the time and successfully classified them 82 percent of the time. In terms of speed, the robot is currently about four times slower at picking lettuces than a human. But Simon reckons they can easily reduce this difference by changing to a stronger robotic arm. The current arm moves quite slowly because the cage is quite heavy. But whilst the robot will be able to take over the physical demands of harvesting there will still be a role for humans to play.

Simon - There's always going to be a place for humans - in terms of maintaining the robots, and in terms of managing the robots. Particularly if we do learning. One of the things we're considering is having people monitoring remotely a series of robots through video feeds. If they see the robot making a mistake they can call that out and that data then gets incorporated and retrained into the next iteration of the neural network.

Heather - And if you're worried about the robots taking over, Simon kindly pointed out to me that the cage was the perfect size for cutting off human heads… but he hasn't tested this theory out yet.

19:08 - Meet the plant taking 57 years to flower

Meet the plant taking 57 years to flower

with Alex Summers, Cambridge University Botanic Garden

A giant agave plant at the Cambridge University Botanic Garden is preparing to flower for the first time in 57 years! It belongs to the Asparagus family, and the first hint of the flower appeared on the 19th June. Since then, the flower “spike” has grown a whopping 10 centimetres every day! Adam Murphy spoke to Alex Summers from the Botanic Garden, asking firstly what kind of plant agave is...

Alex - So tequila as a drink is actually fermented from sap taken from agave, a different species to the one we're growing here. We do grow agave tequilana and as you said it takes them a long time to reach a point of flowering. If we were growing it to make tequila, we'd actually cut off this flower spike because we want to get it as large as possible and then we take all the leaves off and it's actually the central crown structure that's actually baked and then fermented to produce the drink.

Adam - What does it look like? What would people see if they went?

Alex - They'd see a large rosette of leaves. So imagine a dandelion with very very thick leaves, and then amplify that up to about two or three metres in size, and then you'll be thinking about the sort of rosette that you're looking at with our current agave that's about to flower now. And then the structure coming out the top of it stands now at about 3.4 metres.

Adam - So that’s a metre above me at least?

Alex - It's absolutely huge yes. So it is well set for being in that desert environment where it really wants to advertise those flowers to as wide an array of pollinators as it can.

Adam - And why is it doing this now, why is it behaving like this?

Alex - Good question. So we've had this since the 1960s and it would have been planted in the house it currently sits in now, about 10 years ago. Prior to that it would spend most of its life in a pot. So probably what's happened is whilst it was in a pot the restricted root room meant that it didn't reach a size at which it could do what it's doing now, which is putting that flower up. So the agave is a plant which comes from deserts. And so what it does is over its life it builds up those limited resources until a point at which it can send up a massive flower spike. So whilst it was sat in our back reserve houses it never reached that point.

When it was planted out and it had the space it has now quickly reached the point at which it can flower and post that it will die.

Adam - And so there'll be no plant left, it’ll just be gone?

Alex - It's an interesting one, what can happen is it can reproduce vegetatively, so it can produce little offsets or pups, but its main aim is to produce lots and lots of seed

Adam - Do any other plants grow this fast or is this special?

Alex - It’s one of those situations where this plant's been putting a lot of energy and time into producing that flower structure in the very centre of the rosette. But there are many other plants that are able to quickly increase their size like that so if you come at the moment we've got our very large Victoria water lilies and those pads are up and increase in size over the course of a couple of days.

Chris - Alex can I just ask you a question because if this can defer flowering like this, until the time is right for that particular plant isn't that a bit of a high risk strategy. Because if you want to have sex with another plant you need another plant to be flowering at the same time and if they're all over the place in the desert and they've got a really ad hoc process like this, where most plants solve that problem by all flowering at the same time this is not going to happen.

Alex - I wouldn't say plants always solve that problem by flowering at the same time. I think that what you actually see in situations is plants have a fantastic opportunity, unlike us which is they usually produce male and female parts. So what it can do is it can self. It doesn't want to self. You're absolutely right.

Chris - Self pollinate?

Alex - Yeah. The pollen can produce seed from the same plant. That's not always the case but in the case of something like this it's obviously working on the situation that it grows in a massive environment and probably one of the drivers of evolution is that it is a huge ecosystem, which means that there is a likelihood that it's going to be in flower at the same time as another. It’s also setting up so it can present itself to organisms which are able to travel long distances. And that's why it presents itself probably at such a large size.

23:09 - The origin of pointing

The origin of pointing

with Cathal O'Madagain, CNRS Paris

If you stopped someone in the street - or even in the most remote place on Earth - and started to try and communicate with them, the interaction would probably involve a lot of pointing and gesticulating as you both attempt to get your points across. Surprisingly, we’ve probably got our baby selves to thank for the fact that we can all do this, as Ankita Anirban heard from Cathal O’Madagain from CNRS in Paris...

Ankita - Pointing is the universal language. If we're on holiday somewhere and we don't speak the language most of us will resort to pointing and smiling to try and communicate with other people. In fact, pointing is one of the first things that babies learn to do.

Cathal - What's remarkable about pointing gestures is that we use them to coordinate attention. So when you point something out to somebody you are looking at the object, typically, and you get them to look at it and then you look at each other and you acknowledge “Hey look at that, isn't that interesting?” This is crucial for all sorts of human cooperative interaction. Infants all over the world develop pointing gestures in exactly the same way at around the same age.

Ankita - So is pointing a special human trait or do other animals also point?

Cathal - Other animals don't point spontaneously. Apes, our closest genetic cousins, or chimpanzees, they can be trained to point and to understand imperative pointing. This is where you point in order to get something handed to you, for example. But they don't do this spontaneously and in fact they have a very hard time understanding informative pointing. And this is remarkable because adult apes are so much smarter than nine month old infants, but nine month old infants understand this immediately.

Ankita - So when we point at something, what is it that we're doing?

Cathal - What we found was that, rather than pointing gestures working like arrows, people produce pointing gestures as if they're reaching their hands out to, quote-unquote, virtually touch an object in their field of view. So we tested this by looking at the angle of the index finger in a pointing gesture. Most people intuitively think that when you produce a pointing gesture you stretch your index finger out as an arrow that's directed at the object that you're pointing at. But we found that in fact, often the angle or the arrow that stretches out from your index finger leads nowhere near the object that you're referring to, but instead if you photograph somebody from the side while they're pointing at something and you draw a line from their eye through their fingertip this line will very accurately pick out the thing that they are referring to. And this suggests that there's some fundamental connection between pointing and touch and, of course, for us it suggests that pointing may originate in touch. In addition to producing pointing gestures at this funny angle, we also rotate our wrists when we're pointing at things that are out around a corner, for example.

So you imagine if there's a label on a bottle of wine oriented to the right, if you're asked to point at it you might find yourself rotating your wrist clockwise, so that the touch-pad of your finger is oriented to match the surface of the thing that you're pointing at, just as you would if you were reaching out to touch the label. And if you rotate the bottle around in the other direction, so that the label is facing to the left, you might find, if you're pointing with your right hand, that you'll rotate your hand, in a quite awkward movement, anti-clockwise. But again, just in the way that you would if you were trying to reach out to touch that label.

Ankita - But why would pointing be related to touch? Surely when we point at the moon we're not trying to touch it.

Cathal - So what we think is happening is that infants are exploring their environment by touching objects that they're paying visual attention to. They're looking at let's say an interesting toy and they're poking it with their index finger to see what it feels like. Parents then pay visual attention to what the infants are touching, so they discover that they can get their parents to pay attention to things by touching them. They then extend to objects further away from them, so they make as if to touch things in the distance and their parents then look at the object in the distance, looking for the thing that the infant is trying to touch and this is the origin of the pointing gesture.

Ankita - Well it makes sense that babies are always trying to get our attention and it seems to work. So not only do we all know how to point. We can also all interpret other people pointing very effectively. So what's the takeaway message of all of this?

Cathal - The pointing gesture originates in the coordination of visual attention with touch based exploration and this tells us something about the development of the pointing gesture within our lifetime in early infancy. But you can imagine that it also suggests a story about the evolution of this gesture and of human coordination that humans, as a species, seem to take real advantage of.

29:50 - How do you simulate a universe?

How do you simulate a universe?

with Nick Henden, Cambridge University

Could we be living in a computer simulation? And if so, why would that be, and how could this happen? Well first up, to find out how scientists go about simulating a universe, Heather Jameson spoke to Cambridge University's Nick Henden...

Heather - We humans are a curious bunch. Throughout the aeons we've been striving to improve our understanding of the world around us, and over the last few decades we've developed a really powerful tool to help us achieve this: computer simulations. We use computer simulations across all branches of science and engineering; from aerodynamics to design better cars and planes, to climate models to better understand the effects of global warming. Some people even believe that we might actually be living in a computer simulation.

In 2003, philosopher Nick Bostrom proposed the simulation argument. The argument goes like this. Imagine a super-advanced civilization with immense computing power. If we assume that they had the same thirst for knowledge and understanding of their world as us, then they would probably put that computing power to the task of simulating a whole universe inside their supercomputers for the purpose of research. In fact, they might simulate lots of different universes to understand what differences small changes make. Then what if the simulated civilizations also attained the ability to run their own simulations? Well then we've got simulations within simulations, and quickly the number of simulations vastly outnumbers the one actual reality. If that were true then there could potentially be billions of simulated universes and only one real universe. So in that case statistically we are very unlikely to be living in the one real universe!

Wow. It's a lot to get your head round - especially because I just don't feel like I am a simulation. How would you go about simulating a universe anyway? Well to try and answer this question I spoke to Nick Hendon from Cambridge University’s Institute of Astronomy to find out how cosmologists use computer simulations to improve our understanding of the universe. Nick showed me a computer-simulated video of a cluster of galaxies forming. The colours represented temperature, with the red at the cold end of the scale going through blue, to green, and then to white at the hot end.

At the beginning of the video the space is filled by a red gas.

Nick - So what you're seeing at the beginning with all that red material is fairly cold gas, and it's almost uniformly distributed, but it’s not quite.

Heather - The red gas formed into a 3D web - a bit like candy floss - then bright blue spots started appearing in the web.

Nick - There are some areas with more gas than others and the extra gravity of those regions pulls gas from the less dense regions in towards it.

Heather - The bright spots grew larger into clouds of greenish-white gas.

Nick - The regions that are most dense with gas start to grow, and as gas collapses onto those regions, the gas heats up because the pressure is increasing.

Heather - The individual clouds of gas gradually merged together to form larger clouds, until eventually the scene was dominated by large clouds of greenish white gas, with specks of red gas in the dark spaces in between. It looks just like what I’ve seen in science fiction films.

But how is a simulation like this produced? Well first you tell the computer to simulate a very large box - a box that is hundreds of millions of light years across - and you fill the simulated box with particles.

Nick - Those particles represent mainly gas and dark matter. This is mostly the gas that was around in the very early stages of the universe, so that's about three-quarters hydrogen and one-quarter helium. And then you apply the physical laws such as gravity and the laws of motion that describe the fluid motions of the gas, and you also add additional processes such as star formation, black hole physics, and various other physical processes that are important for the formation of realistic galaxies.

Heather - But the universe isn't a box. So what does the computer do at the edges? The way they get around this problem is by setting it up so that when a particle goes out of the right hand side, it re-enters on the left side. In other words you pretend that the box is replicated on all sides. This works pretty well as long as the box is large enough. But how do cosmologists know that their simulations are accurate representations of reality?

Nick - You can mimic observations with your simulation. So you could pretend, “if I took my telescope and looked at my simulation, what would I see?” And then you compare that to what we actually see on the real sky, and if they match then you know that you've done a reasonably good job in creating a realistic simulation.

Heather - If we look at a distant galaxy with a telescope we are actually looking back in time. That is because the light from those galaxies takes time to reach us. The further away we look, the further back in time we are looking. So if we look really far we can see what galaxies looked like shortly after the Big Bang. If we look less far away we can see different galaxies at a different point in time, but we can't watch individual galaxies evolve. But with simulations we can fill in the gaps. How far can we turn back the clock with these simulations? Can we simulate the Big Bang?

Nick - With these sets of simulations we typically start in the early universe, somewhere around 300,000 years after the Big Bang, which is not very long at all in astronomy terms. And in fact if we were to go back all the way to the Big Bang, at some point the laws of physics would essentially break down, in which case the simulations would be no good anyway.

Heather - So it seems that computer simulations are helping cosmologists to answer some of life's most fundamental questions. And finally - as someone who is in the business of simulating the universe - does Nick think that we might be living in the matrix?

Nick - I think it's definitely possible. I think that with simulations - the sorts of simulations that we do - the computational power has increased hugely over the past two, three decades. And so it's easy to imagine that if that rate continues, one can imagine that eventually it will be possible to simulate a universe that is indistinguishable from our own. And in that case it seems somewhat arrogant to assume that we are not in one of those simulations run by some future race.

Predicting the weather

with Chris Bell, Weatherquest

Let’s look at an example of one of the most powerful computer simulations that affects every single one of us every day: the weather forecast. Chris Bell is a forecaster from Weatherquest and a lecturer at the University of East Anglia and he explained to Adam Murphy how this happens...

Chris - Well it starts with observation. So you can't make a good prediction of the weather without observing the weather correctly and that's one of the biggest challenges, actually, in terms of getting a weather forecast correct. So we first take every bit of weather observations we can get. That goes for the traditional weather stations that we would see at the ground, to offshore observations from ships, and aeroplanes as well in the sky, weather balloons and also from satellites. Increasingly so from satellites, actually, and all of this data is fed back into computer simulations and that's really how we forecast the weather.

Adam - What's the simulation actually doing to spit out the forecast?

Chris - So we know, generally, the equations of the atmosphere in terms of how moisture and temperature and air moves around in the atmosphere from basic physics equations. You can put those physics equations into these big computer models and input the data that we observe into those equations and run them out for an hour, and see what the answer is, and then run them for another hour, and see what the answer is and then so on and so on. You can go out to, you know, some computer models go out for a couple of weeks and some out to a couple of months. And as you know, climate models are simulating weather all the way out for several decades.

Adam - Now, when I look at the weather app on my phone, it can say something like a 50 percent chance of rain. But what does that number mean? Is it like a coin flip whether it will rain or not?

Chris - That is a good question. In fact, more and more people nowadays are getting their weather forecast from these mobile phone apps and one of the things about that is so there's two different ways you could come up with a percentage in a mobile phone app for a weather forecast. One of them can be, you can take a computer model and there are areas of the atmosphere and around the Earth that we don't know the actual weather observation for. So we have to estimate using what we do know.

And so it turns out if you tweak those estimations just slightly. So for example, maybe there's an area south of Iceland and out to the to the southeast of Greenland where we don't have observations and you tweak the estimations you make just slightly, it can have a big impact on the forecast out several days in advance. So you do these tweaks and you run the same computer model over and over and over, maybe 50 times, and then you have a whole solution of possibilities out to day one, even to day 15 in advance. And if your question is “is it going to rain?”, you can see how many of those computer models are forecasting it to rain and then that's how you get your answer for percentage. The other way of doing it is to look at how much rain is around your area in the computer model and if all of the grid points around your area are covered with rain then that's a high chance of rain and if only one or two of them are. Then you've got a lower percentage.

Adam - How good are our predictions of weather and why is it important to make sure my barbecue doesn't get rained on?

Chris - Say back in the 1950s and 60s when weather forecasters used to sit down and draw up a weather chart and then they would see new observations come in, and they would draw up another weather chart. They would do that hour after hour and they would look at those weather charts and they would project how the different weather systems were moving and they would use that to make their forecast. When they do that they would be lucky to get the weather forecast right more than a day or two in advance because you simply didn't have all the information that we do nowadays. But, obviously, with the invention of weather satellites we can see clouds moving, we can input that data into these big computer models that we have now and it allows us to make weather forecasts from much further in advance and also expect more from the accuracy of the weather forecast.

So I would say nowadays getting the forecast right two to three days in advance you should be able to almost do that to an hourly time step. You know, to get within a couple of degrees of the temperature and wind speed and whether it's raining on that hour or not. Obviously that can be affected a lot by whatever weather pattern you're in but three or four days fairly accurately to the hour. Beyond that, there starts to be the uncertainty that creeps in and once you get past about seven to ten days out things start to get much more tricky.

Adam - And why is it important to have that level of accuracy?

Chris - Well, I mean, you have lots of big organizations making massive decisions on the weather. So let's just take an example of maybe a port for example. So there might be a strong wind event coming up that keeps the cranes from being able to operate. So that has an impact on the ships that are offshore that are coming to that port. The lorries that are coming from the different distribution places within the country to the port to pick up the goods coming from the ships. If a port can know that there is a disruptive spell of weather coming, say five or six days in advance, that might shut down their operations for 24 hours, being able to adjust what they do leading up to that can make a big difference. And that's the kind of things that the big companies are doing to try to minimize their their loss in terms of costings from the weather forecast.

Adam - Now despite all your hard work, the weather forecast isn't always right. So how might we improve predictions in the future?

Chris - Yeah, that's a really good question because we are starting to see that computer models are getting much much quicker. We are having more and more data that we're putting into them, but actually we're having fewer and fewer weather observations from the surface of the earth and more reliance on satellite. So I think that's probably the way forward in future is to improve our ability to monitor the weather from a satellite. Because it's still quite difficult for a satellite to see through the atmosphere so it can have a good idea of what the temperatures are at the cloud tops and what the temperatures are at the surface of the Earth.

But seeing what's happening in between is is quite difficult for a satellite to do and we're constantly improving the ability to do that and I think that's probably the way forward. That, combined with something else that was mentioned in the show; machine learning. So I think machine learning is another thing that's going to be happening in the future for weather forecasting. So you look at loads of different weather variables; wind, temperature, pressure, pressure anomalies and that sort of thing and you put all that data into a machine and let it kind of come up with the solution rather than the traditional style of weather forecasting where you're running a bunch of equations.

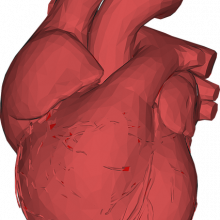

Simulating a human heart

with Elisa Passini, Oxford University

Can we simulate parts of the human body, to speed up the development of new therapies and the discovery of new drugs? Katie Haylor heard how Oxford University’s Elisa Passini is doing this for the human heart...

Elisa - What we do is to build computer model of the human heart, to understand more about how the heart works and what can be done to improve diagnosis and therapies for patients. We are very interested in what is called drug safety. So not really drug discovery, when people try to develop a drug that can treat a specific disease but after that, when they need to check that the drug is safe for the heart. And this applies to any drug, all drugs on the market need to be tested on the heart and we can do the test on our model.

Katie - I see, so before a drug goes into a human heart you want to make sure that it's safe and, of course, you can't do that by testing it on a human heart. You need to have a model.

Elisa - Exactly. So what is done currently is animal testing and then if a drug results safe in animal testing it goes through clinical trials. But we would like to go even before these animal testing and use our computer models, which are human based, to try and predict, early on, what would be the effect on our patients.

Katie - You might have an early candidate, put it through your model and say “uh uh” this is not going any further?

Elisa - So they test, let's say, 10 candidates with our models, they see which is the best one and then they move forward, but instead of testing all 10 they make a selection before, based on our results, to reduce the animal experiments down the line. That's also one of our aims, we are really interested in contributing to a reduction of these experiments.

Katie - So tell me about these models, then, how on earth do you build something on a computer as complex as a human heart?

Elisa - You need to do experiments to understand what's going on inside the cell: all the little processes, all the little particles moving and you write that as equations. So in the end our models are a sum of mathematical equations that represent the behaviour of a human's cardiac cell.

Katie - And what kinds of features of these cells or the bits in the cells do these equations model, are they how they're behaving? How they're moving? What what are you looking at?

Elisa - It’s more the electrical activity we're interested in and this is because the cardiac side effects when taking drugs is usually an arrhythmias, which is an irregular rhythm of the heart and is due to the electrical activity. So what we study is the ion that are moving in and out the cell through ion channels. So our equation model these ion channels and the little particles moving in and out that produce currents.

Katie - So these things like what, Potassium?

Elisa - Yeah, sodium, calcium. So we go from the subcellular level to the cellular level and then we can also put many cells together and get to a tissue or an organ level.

Katie - Wow! And all of that is being portrayed in terms of equations? That's a lot of equations!

Elisa - Yes!

Katie - So now you've got this model, how good is it?

Elisa - So I would say it’s really good! What we have done is some evaluation studies with drugs that are already known, because first we need to prove to pharma companies, for example, that these models work. So we took drugs that were on the market, or they were withdrawn from the market because they had side effects for the heart, we tested these drugs and we predicted the risk or safety with almost 90 percent accuracy.

Katie - Thing is, not all hearts are the same, maybe things like age might be a factor, so what kind of heart are you building? Is this a generic heart or are you factoring in variation?

Elisa - Until, I would say 2010, what was using computer models of cardiac cell, was mostly an average model. So we had one model of the heart that was sort of representing everyone. So the method we developed is a sort of random generation of cells based on the idea that we are all similar. So, of course, cardiac cell will have the same mechanisms inside. But, for example, I may have more potassium channels than another person or sodium channel or calcium channel and we can model these by assuming a sort of random variability of these ion channels in the membrane.

Katie - So looking ahead then, could this actually be used in future to make a very specific, individual model of a heart? For instance, if you're trying to diagnose a potential condition in someone?

Elisa - We would like to get there and we actually started some works in this respect. What we do is we collaborate with hospitals and from them we can get images from patients and data about, for example, a genetic mutation or a specific disease. From the images of the patient we can get the geometry of the heart of these patients and then we can incorporate our models into patient specific geometry. And, for example, if someone had a heart attack or has a scar in the heart as a result of this heart attack, then we can include the scar and see what a drug would do to improve this heart conditions or what drug wouldn’t be safe because of this scar, for example.

Katie - How much precedent is there for computer modelling different parts of the body? Have other people done it for kidneys, liver, lungs, other organs?

Elisa - Yes, definitely there are models, for example, of bones and muscles. As for organs, I would say the heart is quite advanced, compared to the others, and this is because it’s been around for like 60 years. So there are people doing modelling of kidney, gastrointestinal system, neurons. So, yeah, there are lots of people working on computer models of human body and physiology. And the idea is in the near future, maybe, we'll get to a point of having a whole virtual human, all simulated.l simulated.

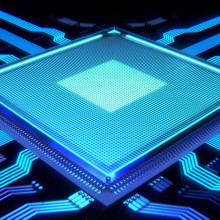

50:25 - Chatty computer chips

Chatty computer chips

with Alex Chadwick, Cambridge University

There has been a huge leap in computing power over the past few decades. And to tell us how this has been achieved, and why computers could be about to get even more powerful, Chris Smith spoke to Alex Chadwick from Cambridge University...

Alex - Yeah, so I think between 1970 and the early 2000s, I think computers got a thousand times faster, which, just for context, if planes had done the same thing we'd now be able to fly from London to New York in 28 seconds.

Chris - What did it take to realise that?

Alex - Well the biggest thing driving this is actually not really computer scientists. I think the most fundamental reason for the advance is because electronic components could be made that were smaller and faster and cheaper. It's really weird as a computer scientist, what you could do is you could design a processor and then two years later you could use the latest components and it would be twice as fast, half the size and half the cost.

Chris - This is Moore's Law isn't it? Where we see every X number of months a doubling in power and usually the price as well! But where is this all going then? I mean, we're going to reach a point though surely, where we can’t improve with present technology any more than we have already.

Alex - Yeah. So it's really interesting around the early 2000s things started to change a little bit actually because what really went wrong, and there's a number of factors, but the biggest one probably is that when you have all these tiny components and they're getting faster and faster and you’re cramming them into a small space, they get really hot and actually the trouble was, that essentially if you just kept making them faster and faster they would start to melt. And so we no longer really could keep making the computers faster. And so that's why computers actually haven't, sort of, numerically got any faster since the early 2000s. What's happened instead is we've realised that, because the components getting half the size, we can actually put two computers together in the same space we would previously have one. And so that's the concept, you may have heard of a dual core computer: that's when you essentially have two old computers, effectively, a core, stuck together. Although each of them are roughly the same speed as the previous computers, by working together they can achieve results that are faster.

Chris - I suppose though that in order to support having multiple cores, effectively multiple computers working together, you've got to have the right architecture inside the computer so that you can feed the instructions in and they're divvied up among those cores the right way to make those instructions get followed to produce something useful.

Alex - Yes, and I think this is what's, sort of, has been changing in the last few years. Thing is when people first started putting multiple computers together they more or less didn't think about that. They more or less just took two existing computers, bolted them together, changed nothing and expected that to do well. And for two it kind of works. The trouble is sort of nowadays we're getting more and more computers stuck together, I think you can probably buy 16…

Chris - Yeah the server that's running the Naked Scientist website has got some crazy number, its like 24 cores…

Alex - Yeah, yeah, yeah, exactly.

Chris - Just amazing to think you can do that.

Alex - They're really good individually, these cores at working on their own problems and that's kind of what they were designed for so that makes sense. But when you stick them together, it's like if you have you know multiple people working in a team. You know, they need to communicate and work together. And the more of them you have actually the more communication you have. You know in a business with hundreds of employees there's gonna be people all the time in meetings constantly coordinating. It's the same with computers as we get to, you know, 24 cores or more, we have to communicate between them.

Chris - Because you're working on something which is hopefully going to mean that they are less antisocial and they get on better, these cores, and communicate better. So is that the linchpin?

Alex - We thought “what would we do differently if we were redesigning the core from scratch”? Rather than just bolting existing ones together - let's throw that design out the window - and say okay what would we do differently today, knowing that the computers of the future will have many cores? And so we have designed a sort of sociable core, if you like. Yes. So one that is capable of working together all the time. And so these cores are able to do computations at the same time as sort of having a natter to their mates and sort of constantly talking about what is happening on the calculations and so on. Working together to solve the problem is sort of fundamental to the design.

Chris - And how much faster will your architecture be?

Alex - It's very difficult to answer that question because essentially the individual cores are worse because as a trade off for their…

Chris - There's an overhead, because of their being more sociable they get distracted more often.

Alex - Exactly, yes they're all having a natter, exactly. So I think it really depends on the problem. So like in the previous interview we heard about the heart simulation. So in that example, you can imagine each core sort of handling one cell of the heart and then having a natter about, you know, what's happening to that cell to the next core.

Chris - So I guess it's going to be a question then of actually writing software and writing systems that will exploit your system to make the most of it. Because if you take your system and just shove the present day operating environment at it, it's just not going to cope so well. But if you've got things written bespoke for it's gonna do much better.

Alex - Exactly, and the programmers really need to think in a different way to use it. That's kind of what I'm personally researching.

55:37 - QotW: How do huskies stay cool?

QotW: How do huskies stay cool?

Matthew Hall sweated over this question with Christof Schwiening from Cambridge University...

Matt - Let’s help out our animal lovers and get to the bottom of this hot topic. On the forum, we got a response from Evan who thinks "dogs often shed their hair when the seasons change, adopting a thicker, more insulating winter coat. That allows them to 'insulate themselves from the cold of winter and from the heat of a hot summer's day', but not in the same season. To turn up the heat on finding an answer, I combed through some professionals in insulation, and found Christof Schwiening from Cambridge University.

Christof - Your friends are right, Alex, but there is nothing very special about huskies. Insulation, and fur is a relatively good insulator, has the property of reducing energy transfer across the insulating material in either direction, whether the temperature on one side is colder or hotter than the other.

For example, insulation in the roof of a house will keep it warmer in winter, and cooler in summer. Equally, insulation within a flask will help maintain the temperature of cold or hot liquids put into it. So, by extension, an animal with a furry coat should lose less heat in cold conditions and gain less heat in a hot environment. That is, insulation helps to isolate the animal's own body temperature from the external environmental temperature.

Matt - Well, the problem here is that it conflicts with our own experience, and simply extrapolating our physiology to other animals can be problematic. If you put on a thick winter jacket in the summer you will rapidly overheat - especially if you also do some exercise whilst wearing it.

Christof - What is missing here is the difference between how dogs and humans regulate their body temperatures in hot environments. Our main way of losing heat in warm conditions is through the evaporation of sweat from our skin. Putting insulation over the skin, or indeed just preventing air flowing over it, stops our ability to lose heat and so we rapidly become too hot.

In hot environments, dogs don’t lose much heat by sweating. Like us, they do still lose heat by evaporating water, but that water comes from panting, and fur does not prevent that process . Dogs can also lose heat by lying down on a cold surface, and fur does not prevent that either: the animal’s fur gets squashed flat and loses its insulation properties as the trapped air is pushed out of it.

So, in most mammals fur does not interfere with active heat regulation. Instead, it helps isolate body temperature from a potentially changing external environment and in this case humans are the odd ones out, because we rely mainly on sweating to keep us cool.

Matt - It’s a good thing here the naked scientists don't have to worry about our sweat getting disturbed by clothes. Get excited though, because our next question of the week - from Monique - is pretty sun-sational.

Monique - Can you tell from a painting or a photo, if it’s sunrise or sunset?

Comments

Add a comment