The high-tech scanners that can home in on chemicals produced by cancers, how bats and dolphins share genes for echolocation and why barefoot runners have a smoother track record. Also this week, augment your reality: find out how new technologies can add extra information to the way you see the world by making a mobile phone into a virtual tour guide or even a pocket mechanic! Plus, how virtual reality worlds are helping to rehabilitate stroke victims, and, in a theatrical twist, for Kitchen Science Dave discovers the workings of a baffling stage illusion...

In this episode

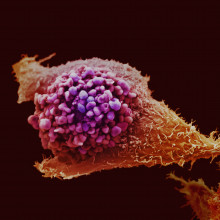

00:11 - Scanning for Cancer’s Biochemical Signature

Scanning for Cancer’s Biochemical Signature

Researchers in the states may have found a way to detect potential prostate tumours using Magnetic resonance spectroscopy, and this should lead to fewer false negatives, better precision when locating tumours and a better idea of how aggressive they are.

Magnetic resonance spectroscopy analyses the biochemistry, unlike things like MRI, which look at the structure of tissues. This means it can be used to look for the distinct chemical, rather than structural, signatures of a tumour.

Leo Cheng and colleagues at Massachusetts General Hospital published a study in the journal Science Translational Medicine that builds on some earlier work published in 2005. This earlier work looked at the biochemistry of a tumour and identified a metabolic spectrum for prostate cancers - a series of chemicals produced by the tissue that identifies it as a tumour. Studying the entire suite of metabolites left behind by a cell is known as metabolomics.

With this ensemble of metabolites in mind, they set about scanning 5 cancerous prostate glands that had been removed from patients. Their scans measured the proportion of these signature metabolites to give an indication of whereabouts in the prostate had a higher 'Malignancy index' - i.e. a higher likelihood of being cancerous tissue.The prostates were then passed on to histopathologists who, while being very careful to preserve information about whereabouts in the prostate any particular sample was taken from, used standard histological methods to analyse the tissue visually, and determine where the tumours were located.

When the results were compared, 5 out of seven tumours coincided with areas of high malignancy index - the remaining two it is thought were compromised by being close to the edge of the prostate, where interactions with air could have altered the metabolomic profile. Overall, its accuracy was over 90%.

Interestingly, there was also a correlation between the size of the tumour and the magnitude of the malignancy index - suggesting that this technique could not only identify malignant tissue, but also give you an indication of how aggressive it is.This is only a prospective study, and the low sample numbers may lead to an overestimate of how accurate it really is, but these are very promising early results.

As they say in the discussion of the paper: "Metabolomic imaging has the potential to detect lesions, guide biopsy, and eventually identify other conditions of malignancy, such as tumour aggressiveness". They also add that it could be adapted to identify other types of cancer.

The echoing links between bats and dolphins

Bats and dolphins may appear to be very different types of mammals - after all one of them flies and the other swims - but it turns out they have both, independently evolved exactly the same gene that allows them to use sound as a way of visualising the world around them.

Both bats and dolphins hunt using echolocation. They emit bursts of high-pitched noise and listen carefully as the sound waves bounce back to them. They use these echoes to build a detailed mental picture of their environment and - hopefully - to pinpoint other animals that will become their dinner.

Both bats and dolphins hunt using echolocation. They emit bursts of high-pitched noise and listen carefully as the sound waves bounce back to them. They use these echoes to build a detailed mental picture of their environment and - hopefully - to pinpoint other animals that will become their dinner.

A vital part of both the bat and dolphin echolocation systems is a series of tiny, stiff hairs in their ears that vibrate and detect very high-frequency sounds and are made from a protein called prestin.

An international team of researchers have published two papers in the journal Current Biology, uncovering the remarkable fact that the prestin gene has undergone precisely the same changes in DNA sequence in distantly related bats and dolphins.

Many other groups of species have evolved to look remarkably similar despite not being closely related, such as modern-day dolphins and ancient, extinct reptiles called ichthyosaurs. But this is the first time that so-called "convergent evolution" has been detected at the molecular level. The research team were able to build a genetic family tree showing how the changes in the prestin gene built up identically over time in both bats and dolphins.

It suggests that there may only be one way that mammals can physically evolve the necessary apparatus to echolocate. It is remarkable that many species of bat and cetaceans - including whales and dolphins that can echolocate - have taken the same evolutionary pathway towards an identical genetic solution to the challenge of seeing with sound.

The Benefits of Running Barefoot

People who run barefoot learn to minimise impact shock, adopting a different style of running from those in shoes, according to research published in Nature this week. This could help us to understand the impact-related injuries suffered by a high percentage of runners.

Daniel Lieberman and colleagues at Harvard University used kinematic and kinetic analyses to observe runners who were either habitually barefoot or who generally wore shoes. Both groups were asked to run in shoes and barefoot, and high-speed camera footage was taken to observe exactly how their feet moved. They also got volunteers to run over a force plate, to analyse how forces were transmitted during different kinds of running.

There are three ways your feet can land when you're running - a rear-foot strike (RFS), landing on the heel first; a mid-foot strike (MFS), when the heel and ball of the foot land simultaneously; and a forefoot strike (FFS), in which the ball of the foot lands before the heel comes down. Sprinters and the habitually barefoot seem to use mainly Forefoot or mid0foot strike, while shod endurance runners (and the majority of joggers) use rear-foot strike.

There are three ways your feet can land when you're running - a rear-foot strike (RFS), landing on the heel first; a mid-foot strike (MFS), when the heel and ball of the foot land simultaneously; and a forefoot strike (FFS), in which the ball of the foot lands before the heel comes down. Sprinters and the habitually barefoot seem to use mainly Forefoot or mid0foot strike, while shod endurance runners (and the majority of joggers) use rear-foot strike.

To understand why we use these different ways of landing, and what it means for injury risk, Lieberman looked at the force profile for each step type. By plotting the forces felt against time on a graph, it was easy to see that rear-foot strike, either in shoes or barefoot, has a large spike of applied force just at the time of landing, while FFS running gives you a very smooth wave, with little or no sudden impact forces - essentially a much smoother ride. This step also helps to lower the body's centre of mass relative to the vertical force, and as such reduces the mean force acting on the feet.

Landing on the forefoot first, therefore, helps to reduce the amount of the body's mass that needs to come to a full stop per step, and considering that most runners will strike the ground around 60 times per kilometre, this is significant for the likelihood of developing repetitive stress injuries.

Humans and their ancestors have probably been running ever since we adapted to bipedal locomotion, and only in running shoes for the last 40 years or so. Evidence from the structure of the modern human foot suggests that it's adapted to get the best out of forefoot-strike running, reducing the likelihood of stress injury, and offering a selective advantage. As the incidence of running injuries remains significant despite advances in footwear technology, it seems that even the best shoes may not be as good for you as no shoes at all!

The Fish with wonky mouths

Among the enormous diversity of cichlid fish living in Lake Tanganyika in eastern Africa, one group in particular has evolved a most unusual feeding habit: they sneak up behind other fish and pick their scales off, approaching every time either from the left or right side. You can easily see whether a fish is a lefty or righty by the shape of its mouth, which is hugely lopsided, bending around to one side or the other, like a pair of tweezers with the ends bent over.

Now researchers Thomas Stewart and Craig Albertson from Syracuse University in the US, have discovered that these fish are genetically programmed to be left or right -jawed. The reason both lefty and righty fish are found in a population, rather than one dominating, is because there will always be an advantage for the minority form. If there are more righty cichlids around, the prey fish learn to expect an attack from the right, and so lefty fish can easily sneak in and get a bite to eat.

Now researchers Thomas Stewart and Craig Albertson from Syracuse University in the US, have discovered that these fish are genetically programmed to be left or right -jawed. The reason both lefty and righty fish are found in a population, rather than one dominating, is because there will always be an advantage for the minority form. If there are more righty cichlids around, the prey fish learn to expect an attack from the right, and so lefty fish can easily sneak in and get a bite to eat.

But, it turns out that the situation is more complicated that was originally thought, because some scale eaters start out life with a straight mouth, pointing neither left nor right. More studies are now needed to work out what happens to these young straight-mouths that don't seem to make it to adulthood. It could be that they aren't good enough at hunting and so don't survive. Or as they grow up, their mouths could bend around to the left or right.

It just goes to show that from flat fish with eyes that migrate from one side of their head to the other, and human beings who have hearts usually on the left side, not everything in life is neat and symmetrical.

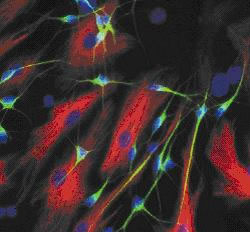

12:05 - The Chemical that Keeps Nerves Alive

The Chemical that Keeps Nerves Alive

with Dr. Michael Coleman, the Babraham Institute.

Ben - Also in the news this week, scientists at Cambridge's Babraham Institute have identified a factor that helps to stop nerves from degenerating. This could lead to better treatments for degenerative diseases, but also better ways to halt the degeneration of a nerve when it gets damaged as a result of an injury or stroke. Dr. Michael Coleman leads the group responsible for the discovery and he joins us now. Hi, Mike.

Michael - Hello.

Ben - So first of all, what does a nerve cell look like?

Michael - Let's start with the cell body - which is essentially the equivalent of what happens in most other cells. In this cell body, you have the nucleus which contains all the genetic material, compared to other cell types the nucleus might be slightly bigger. There's a little bit more metabolic activity and protein synthesis going on in that cell body, but by and large, the cell body is not so different from other cell types. Then we have, coming into that cell body, what we call the dendrites. Now there could be a very huge number of these - literally thousands or tens of thousands of dendrites coming into that cell body, and the job of the cell body really is to integrate the signal that comes from this enormous number of dendrites, and to place what we call an all-or-nothing response. That all-or-nothing response, the electrical activity transmitted to the next cell, then goes down to what we call an axon, and that's the bit we're interested in. There are two things really that are special about the axon. First of all, there's only one of them and this means that this is effectively the most vulnerable part of the neuron because if you lose that axon, you have totally lost the functional capability of that neuron. The second thing that's interesting about the axon is its length. This can be enormously varied between different types of neuron, but in its extreme case in humans, this can be anything up to a meter long. It can go the length of your arm or leg and it can go the length of your spinal cord.

Michael - Let's start with the cell body - which is essentially the equivalent of what happens in most other cells. In this cell body, you have the nucleus which contains all the genetic material, compared to other cell types the nucleus might be slightly bigger. There's a little bit more metabolic activity and protein synthesis going on in that cell body, but by and large, the cell body is not so different from other cell types. Then we have, coming into that cell body, what we call the dendrites. Now there could be a very huge number of these - literally thousands or tens of thousands of dendrites coming into that cell body, and the job of the cell body really is to integrate the signal that comes from this enormous number of dendrites, and to place what we call an all-or-nothing response. That all-or-nothing response, the electrical activity transmitted to the next cell, then goes down to what we call an axon, and that's the bit we're interested in. There are two things really that are special about the axon. First of all, there's only one of them and this means that this is effectively the most vulnerable part of the neuron because if you lose that axon, you have totally lost the functional capability of that neuron. The second thing that's interesting about the axon is its length. This can be enormously varied between different types of neuron, but in its extreme case in humans, this can be anything up to a meter long. It can go the length of your arm or leg and it can go the length of your spinal cord.

Ben - They certainly do sound like the fragile part, the weak link in the chain. What happens when a nerve becomes damaged?

Michael - We've already pointed out that the axon is very long and clearly, the axon has to be supplied with all sorts of material from that cell body. Most of the proteins, certainly all of the RNA and many of the organelles are made within the cell body, and they have to be shipped out. There's a very intricate system of what we call motor proteins, ATP using proteins, that are responsible for taking out and controlling the delivery of those proteins and organelles to the further parts of the axon. Clearly, like any pipeline or supply system, that's going to be vulnerable in various ways. So in various disorders, which may be inherited or neurotoxic or viral disorders for example, and protein aggregation disorders, you can have a blockage in this axon which prevents materials from getting to the far end. Sooner or later, that can result in functional impairment and ultimately, the death of that axon.

Ben - You've been able to identify a particular factor that seems to be a "stay alive" signal for the axon itself. How did you find it? Why did you know that there was something like this there?

Michael - That's right. So, what we did was effectively ask the question - among these thousands of different cargoes that are being transported down the axon, is there something which is actually a limiting factor for its survival? A nice analogy here might be a car accident on the motorway, causing a huge build up of traffic behind it. Among that traffic, among those vehicles caught upon the motorway, you will have an enormously different number of reasons why those people are trying to get from A to B. Some will be relatively trivial and not a major problem and some might be a life or death issue, in the most extreme cases. For example, the family trying to get to the beach for a day out will be very frustrated to be held up for half an hour, but it might not be a major problem. But if the ambulance is trying to get to the accident at the front is held up then you quickly have a life or death issue on your hands. What we have effectively done is to go in there and say, "What is the first protein that becomes life threatening to that axon if it cannot get through to the far end?"

Ben - And how did you identify it?

Michael - I should say that the experiments didn't happen in this order, but when we stand back now and take a sort of broader look at it, we can interpret it in this way. Effectively what we did was to cut the axon - clearly, that results in a catastrophic death of the distant parts of the axon. That's something called Wallerian degeneration which we spend most of our time studying. And then we ask - what, within there, is the first factor that's not being able to get through that kills that axon. To do that, what we have done (not always knowing at the time) is to replace that factor by something that can substitute for its action. We knew that there was something which will keep those axons alive because experiments back in the University of Oxford in the late 1980s indicated that there was a mutant strain of mouse which acquired a spontaneous and harmless mutation, and this, in experiments where those nerves were being cut, actually delayed the degeneration of those nerves by tenfold. Over the subsequent 10 years or so, we and others identified the gene that's underlying this process. In the last 10 years, this has led to us trying to understand why that, or how that protein works.

Ben - So, by identifying the gene in these mutant mice, you were able to work out which protein or at least which family of proteins it was that was responsible? Proteins always have strange names that are very hard to remember. What's this one called?

Michael - Yes. It's called Nmnat2, nicotinamide mononucleotide adenylyltransferase 2.

Ben - So, difficult to say, as well as difficult to remember.

Michael - Yes.

Ben - What does it actually do? We know that it seems to keep the nerve alive, but by doing what?

Michael - That's an interesting question. It certainly has an enzyme activity. It makes a molecule called NAD which the biochemists among you will know is heavily involved in energy metabolism inside the cell. That is, in a way, the most obvious potential consequence of this protein being missing when it can't get into the axon with enough quantity. However, sometimes the most obvious direction to take is not the correct one. We've seen this a number of times and there is some discussion in the field at the moment about whether NAD synthesis is the most important or the key function of this protein that's involved in the axon degeneration or whether it's something else. Maybe it works in reverse or maybe it catalyses a different reaction as well.

Ben - Clearly there's still some work to do, but what's the next stage for you?

Michael - So what we try to do often as scientists is to keep away from animal experiments, where we possibly can, by taking cell culture alternatives or work in other organisms such as fruit flies. The work that we've done up to this point has been in a cell culture system. At some point there will be a need to confirm this looking at a mammalian nervous system to know that what we've seen is physiologically relevant. That's a very important step because if we always stick to alternatives, then there is also a risk of diverting the science if we don't actually confirm that we are looking at the right thing. So that's one very important step to take in the near future and another one is to look at what this means in terms of disease. So, we need to actually remove this protein now and ask whether the nerves actually start to die back and whether this mimics certain disease situations.

Ben - But certainly very promising work. I do like the fact that we seem to feature all these really promising things and hopefully, we can follow up with you in the future and find out how it's doing?

Michael - Yes. That would be good.

Ben - Well thank you ever so much, Michael. That was Dr. Michael Coleman. He's based at the BBSRC's Babraham Institute. They've published this discovery in the open access journal, PLoS Biology.

22:48 - What is Augmented Reality?

What is Augmented Reality?

with Dr Tom Drummond, Cambridge University

Helen - Dr. Tom Drummond is a Senior Lecturer at the Machine Intelligence Laboratory at Cambridge University where they're working on some of these technologies, and Tom has very kindly come into the studio today to talk to us about augmented reality. Hi, Tom. Thanks for coming.

Tom - Hello.

Helen - And I think we need to start off with - what is augmented reality? It sounds like something out of a sci-fi movie, but what is it?

Tom - It does sound very science fiction, doesn't it? It's about taking computer graphics off the computer screen and making them available over the natural world, over the real world. Now obviously, the real world doesn't have a computer display capability, so you need to put those graphics there somehow. The first way we thought of doing this was to use a head mounted display - you look through the head mounted display at the world and then a computer can display computer graphics on a part of the world too.

Helen - So you're looking at the world and you're pushing a layer of information of some sort that refers to that world.

Tom - That tells you something about what you want to do with the world.

Helen - What you're looking at...

Helen - What you're looking at...

Tom - So you might want to, in a medical application for example, use it in laparoscopic surgery to be able to see what your instrument is doing inside the patient. Where blood vessels are, maybe there's a tumour that you're trying to target or something like that. So that's one kind of application. There are obviously entertainment applications. There are games available now that you use this technology or indeed, there are educational benefits, and so on.

Helen - It seems to me as I browse around the internet that quite recently, the entertainment and advertising side is really developing quite quickly. You can have magazines with augmented reality covers - you wave the magazine in front of a computer, and something pops out on it in three dimensions through your webcam. And it's in sporting events as well...

Tom - Sure, American football for example.

Helen - ...and races and things.

Tom - The first down line is done by augmented reality in this.

Helen - And that counts as a way of putting information, and advertising as well, into sporting events. But as you said, there are more worthy and useful applications of this technology as well. You say you started off thinking about a head mounted way of doing this. What are the alternatives?

Tom - Well the thing that we're starting to see now is handheld augmented reality which runs on, for example, a smart phone. In that version, what you see on the screen of the smart phone is what the camera sees of the world. It's a bit like having a video camera or a digital camera where you're seeing the preview of the picture. But what augmented reality does is it traps the graphics in flight between the camera and the screen, and you work out what you're looking at and where it is, and you add the virtual elements to the image at the same time, so that you can blend the piece of information that you want to add to the world over the top of it graphically.

Helen - So it feels like you're holding up a magic spy glass and you're looking through that, and you're learning something else about what you're looking at. I could hold it up to you and it might tell me something about you, perhaps...

Helen - So it feels like you're holding up a magic spy glass and you're looking through that, and you're learning something else about what you're looking at. I could hold it up to you and it might tell me something about you, perhaps...

Tom - Yes. You could see my name floating above my head or something like that.

Helen - I believe you've been looking at the pros and cons of these different approaches of a head mounted system versus something we can put in our pockets. What are the differences between those two approaches?

Tom - A head mounted display gives you a very immersive feel. When you're looking at the world, the computer graphics are right there in front of your eye. So, there's a very strong connection between the virtual elements and the real elements. But then there are some negative consequences as well. It's very difficult to build these systems without latency in them. So when you move your head, the computer graphics might follow a tenth of a second later. Unfortunately, one of the consequences of this is that it can make people feel motion sickness and it can be very unpleasant to use a system like this. Head mounted systems are also very expensive and that could be a barrier to their useand they're also very cumbersome. You have to put something that gets between you and the world on top of your head, whereas by in contrast, a phone is a small thing. We all carry it and it has all of the computer hardware inside that you need to run some of these applications. If there's some latency and the picture takes a tenth of a second to catch up as you move it, nobody really minds because it's not directly affecting what you're seeing, and conflicting with what your inner ear is telling you for example.

Helen - How are we actually seeing this being used in the real world, outside the laboratory? One of the possibilities that I thought was rather exciting was the use of these kind of things for tourists - for going to a site, perhaps of a ruin that's fallen down now, holding up your smart phone or perhaps even wearing your tour guide helmet and goggles, and it would recreate what the acropolis looked like when it was full of people or you know, when it was still there. That seems to me to be quite exciting. Are we seeing this kind of thing actually being used?

Tom - Absolutely. There are applications available now on the iPhone store and on other phones like the Google Android phones that use GPS to locate the smart phone and a compass to work out which direction it's pointing in, and then you can display computer graphics like "this mountain is..." whatever it is or "this building is King's College Chapel...". These systems are appearing now and I think that they're going to become very popular this year. In some sense, the limiting factor of those is that GPS and a compass isn't that accurate, and one of the problems is that if you want to draw your labels very precisely over what you're seeing, they tend to jitter around and often, if you look at videos of these systems in action, you can see that the labels are jittering around a bit, relative to the image.

Helen - So it's not quite pointing it to King's College Chapel. It's sort of hovering about in the air a bit...

Tom - Hovering around somewhere nearby.

Helen - Yes.

Tom - Now, one of the things that's driven our research into this is using the image that's coming into the smart phone to locate what we're looking at. If you can work out what every pixel in the image from your camera is looking at, then when you draw the graphics on the screen, you're going to be drawing them roughly to pixel accuracy over the top. That tends to lead to a much more stable viewing experience and the graphical elements look very stable on the world, and really look like they belong there, which is actually quite important in terms of how the users respond to these extra elements being displayed.

Helen - I can only imagine, being a humble marine biologist myself, the technology involved in taking a moving image of the real world and incorporating your position on that image must be extremely challenging. We won't go into the details now, but I'm just wondering; what are the main problems that you have to overcome to be able to put these images together and use, say a smart phone to shine at something, and tell you what it is?

Tom - Sure. Yes, there are a lot of issues. In particular, smart phones are not the most powerful computers available and so there has to be a lot of effort going into shrinking the algorithms down, so that they can run in the computer capacity of a smart phone. When you're talking about the data from a camera, there's actually a huge flow of data coming out of the camera of the smart phone. So it's actually a serious issue to be able to process that in time, to be able to work out where you are and what you're looking at.

Helen - And finally, I think one thing that seems to me to be very clever use of this is to communicate expertise, to be able to transfer yourself into another place, and almost get someone else's brain on the case. Can you tell us about that quickly?

Tom - Sure, yes. That's one of the systems we developed and really, that came from an occasion where I was phoned up and asked, when a car is out of water, where do I put the water in for the windscreen wipers? I'm standing there with my eyes closed, trying to picture the engine bay of the car, thinking - well, at the back on the right, there's a translucent white bottle there somewhere... And I was thinking, if a person could just take a photo with their phone and send it to me, I could draw an arrow and say, "its here." And then even better, when that photo goes back to them, when they move their phone, that arrow stays pointing at the image of the water bottle, that would be brilliant! And in fact, some very clever people in my lab built a system that did exactly that. So, what it does is it extracts information about what it can see, for example, the engine bay of your car and then in real time, it builds a 3D model of the things that it can see, and it calculates at the same time where the camera is moving, and then all of this information together is used to help a remote expert place information into the scene that will help the local user in solving the problem that they have.

Helen - Fantastic! I know, next time I need to refill my water in my car, I would love to have a gadget like that on hand. Thanks ever so much Tom for giving us a great introduction to the world of augmented reality, explaining how machines can recognize and track reality. He comes from the Machine Intelligence Laboratory in Cambridge University.

32:51 - Rehabilitation in Virtual Reality

Rehabilitation in Virtual Reality

with Dr. Paul Penn, University of East London

Meera - This week, I'm at the University of East London which is located in Stratford. I've come along to their psychology department's virtual reality lab and with me is Dr. Paul Penn, lead researcher of the virtual reality research group here. Now Paul, some interesting work you've been doing here is using things like virtual reality in order to rehabilitate brain injured patients and stroke patients...

Paul - Yes, that's right. We're really interested in looking at VR as a means to improve the lot, in terms of rehabilitation and assessment of people that have suffered a brain injury. A lot of brain injured patients, as part of their recovery, will spend a great deal of their time completely understimulated and not officially in therapy and things like that. That's really a problem for patients from a neuropsychological perspective because the way the brain responds to injury is determined by the environment in which you're trying to recover. So in other words, if you have a very stimulated environment, the chances of you getting better functional outcomes and better recovery from the injury will be that much better. So what we're really looking at is using VR as a means to enrich environments post brain injury.

Meera - What aspects of brain function do you focus on with brain injured patients?

Paul - It tends to be things like memory. So we'll look at how well people are going to remember route information for example. We look at how well people can remember objects they see in an environment. We also look at the way people can remember to do things at some point in the future, what we call prospective memory. So that's things like remembering to close the front door after you've opened it, remembering to attend appointments at certain times. Very simple things that we take for granted, but are actually very pervasive in everyday life. And these are the kind of problems that, because they're so intertwined with everyday life, you can't really assess properly in the lab, and that's what VR gives you. It gives you that chance to put a real world scenario into the lab.

Meera - The key point of the virtual environments that you create here are that they can be used simply on laptops and desktops which must therefore make them much more accessible?

Paul - Yes, absolutely. This is a really important part of our remit. So really, one of the fundamental criteria that we use when looking at VR is, will this run on just an average, modest-spec PC. What we tend to do now is what we call a windows-on-world system where the virtual world just appears on a computer screen. So, it probably looks like a computer game, like you or I would play on a PC game or a Playstation 3 game. But the difference is that our environments have a purpose other than entertainment.

Meera - So now, we've got a laptop setup in front of us and it's got a particular virtual  environment on it which is a virtual bungalow...

environment on it which is a virtual bungalow...

Paul - So what you can see here is a simple, four-room bungalow with a hall. The patient's task here is to help the person who owns this virtual bungalow move to a larger bungalow. The person's task is to go through these rooms; first, we've got a hall followed by the lounge, and then allocate furniture to these new rooms. So they're engaged. They're searching around the existing bungalow and they're looking for items of furniture that, for example, belong in the hall. While they're doing this, what we actually have is a series of three memory tasks. You can think about the removal task as a kind of distraction task, essentially because that's the way memory works in the real world. It will be very easy if all we ever had to remember is just what we have to remember, but the problem is we have all these distractions around us all the time, and it's how well we can filter out those distractions that actually gets to the crux of the matter. That's really what this environment is assessing, with the removal task as the distraction task. What we're actually looking for is how well they can remember to do three different things.

Now, you might remember that I told you that remembering to do things at some point in the future is what we call prospective memory in psychology. Broadly speaking, there are three types of prospective memory. First of all, we have what we call "event-based prospective memory" which is a kind of memory that's precipitated by seeing something in the environment generally. So, in the example of the virtual bungalow we have here, when the participant has been strolling around the house, they have to look for glass items, and when they see a glass item, they have to remember to put a fragile notice on it. For the very simple reason that we don't want the removal men manhandling and breaking it. The second type of prospective memory is what we call "activity-based". This kind of thing occurs when you see something in the environment that itself serves as a cue for the memory. So for example, if you turn an oven on, that's also your cue to turn the oven off again because you performed an action which should then prompt another memory to perform the reverse of that action. The task we have in the virtual bungalow here is that they have to remember to close the kitchen door after they've opened it. Simply because we've got a cat, a virtual cat if you like, in there and the cat escapes if they don't. The third type of prospective memory is what we call time-based. This is the category of memory whereby you have to remember to do something at a certain time.

Meera - So if somebody's got a meeting or an appointment, they need to remember to do that...

Paul - Yes, exactly. Or remembering to tune in to a radio show for example. The idea here is the person opens the front door every five minutes to let the removal men in.

Meera - Having these three tasks in action then when someone's in this environment, what are you specifically looking for?

Paul - The extent to which people have actually recalled or remembered to perform the tasks. So; how many times out of the possible three have they remembered to open the front door for the removal men? How many of the - I think it's about eight - items that are fragile do they remember to put fragile notices on? And by looking at that kind of data, that gives us an idea of their memory profile, and from that you can extrapolate what kind of problems they might have in everyday functioning.

Computer generated voice - "...Look for items and furniture to be moved into the hall..."

Meera - I'm just having a go on this now and I'm walking through the hall; it's reasonably easy to move around. I'm just using the cursor, the arrow keys on a keyboard to move around, and then just mouse buttons in order to open doors, and pick items. So, I guess that's quite crucial, making it quite easy to use.

Paul - Yes, the interface is really important obviously because what we're looking at here is potentially using this environment with people who may not just have memory problems. They might also have problems with their physical mobility or their dexterity.

Meera - Having actually tried this environment out on various stroke patients or brain injured patients, what have you found to be the improvement?

Meera - Having actually tried this environment out on various stroke patients or brain injured patients, what have you found to be the improvement?

Paul - What we tend to find with this task, we used it on stroke patients for example, is as you would expect really. They're impaired in all three types of memory, they're particularly impaired on the time-based task. The time-based task tends to involve what we call self-initiated retrieval in terms of - there are no prompts in the environment. You have to remember to provide your own prompt which is to look at the clock and we find that people who have had a stroke can often suffer with this kind of behaviour. It's very, very difficult for them to self-initiate.

Meera - What can you then do with this information to improve their condition or improve their memory?

Paul - What this kind of information can do is allow this rehabilitation professional to actually orientate the rehabilitation very precisely, to address the problems that person has. So for example, if they just have a problem with time-based retrieval, there's a technology you can use like personal organizers, maybe iPods, to actually provide prompts at certain points in the day for critical activities. What you can do, having interacted with this environment, is get an indication of the kind of prompts that someone will need to offset their memory problems.

Ben - That was Dr. Paul Penn from the University of East London, taking Meera Senthilingam on a virtual experience, to show how simulated versions of real environments can be used to monitor and rehabilitate patients that have suffered strokes or brain injuries.

40:26 - Augmented Reality in Space

Augmented Reality in Space

with Luis Arguello, European Space Agency

Ben - Now, when something needs changing or repairing on the International Space Station, astronauts need to open a printed manual or look at a laptop to find out what it is that they need to do. But that's not necessarily easy to do when everything is floating about or when you're working in a confined space. So what's the solution? Well, the European Space Agency are developing a system called WEAR that's short for WEarable Augmented Reality. It's a headset that superimposes instructions and information onto the thing you're looking at. Luis Arguello is one of the principal investigators behind the new system, and he joins us on the line now. Hi, Luis.

Luis - Hello. Good evening.

Ben - So, what's main point behind the WEAR system?

Luis - Well, this is just a tool to help the astronauts to perform their activities onboard with more accuracy and using less time. The thing is, as you mentioned before, the astronauts are floating in space. They have to perform different tasks, experiments, maintenance, and they have instructions for things which have been there for a long time - they use paper. And for once in a time task, they use the laptop to see what they have to do. And I don't know if you've seen people working in space, but when they move around, they are floating and they have to help themselves to move around with their hands, and restrain with their feet to the floor. So, it's a bit difficult to look at the laptop or hold the papers.

Luis - Well, this is just a tool to help the astronauts to perform their activities onboard with more accuracy and using less time. The thing is, as you mentioned before, the astronauts are floating in space. They have to perform different tasks, experiments, maintenance, and they have instructions for things which have been there for a long time - they use paper. And for once in a time task, they use the laptop to see what they have to do. And I don't know if you've seen people working in space, but when they move around, they are floating and they have to help themselves to move around with their hands, and restrain with their feet to the floor. So, it's a bit difficult to look at the laptop or hold the papers.

So the idea is that you have this system that you put on your head and is connected to the computer, and then you talk with it while you perform your task, and then it gives you the instructions of what you are supposed to do. What we were trying to do is to help the astronauts to identify the small elements within a very complex structure. So, he doesn't lose too much time, trying to find the little valve he has to open or close or the thing he has to replace. And so, he can do things more precisely and also saving some time.

Ben - So, in order to build this, did you need to build special equipment for it or can you actually use kit off the shelf?

Luis - We did use off the shelf elements, but it was mainly by accident. I've been collaborating with Frank De Winne, the commander of the space station, for six months. He's been working with us, with our section in the Space Agency for many years, and I invited him to view the requirements for the first WEAR tool we were developing. Well, he told us, "I'm going onboard and I'd like to take one with me." So, usually you develop custom equipment to fly onboard according to the standard safety and to make it more operable onboard. In this case, we didn't have the time, so we built this prototype, this is only a prototype, to show how helpful it would be to have this kind of system onboard, and also to assess the usability. We had very little time but we had to do the whole development, safety assessment with NASA, and all of the process of integration testing. And so, it was very challenging project.

Ben - So, once they actually put it on and presumably calibrated it for each person, did they find it really helped?

Luis - The main problem, as you mentioned, is the calibration. Because the system was off the shelf, we couldn't think much about the adjustment to make it very simple. So it takes a bit of time to get the overlaying of the images according to what you're looking at. But after the first calibration is done, they find it very convenient to use, and easy to use. Well, you need some voice training because the system is guided by voice. We made an assessment, we gave them a questionnaire and this was one of the points - usability - and he was happy.

Luis - The main problem, as you mentioned, is the calibration. Because the system was off the shelf, we couldn't think much about the adjustment to make it very simple. So it takes a bit of time to get the overlaying of the images according to what you're looking at. But after the first calibration is done, they find it very convenient to use, and easy to use. Well, you need some voice training because the system is guided by voice. We made an assessment, we gave them a questionnaire and this was one of the points - usability - and he was happy.

Ben - So, it sounds like there's quite a long way to go. This was just a prototype with off the shelf kit, but still very promising. What's your next step?

Luis - Well, our friend, Frank, the commander of ISS says he's happy with it and he would like to have a second version. So, we have more people, more colleagues working on human interfaces onboard. We work together with NASA because on ISS, we have many partners. We have Americans, Russians, European, Japanese, so if you want to put something on board, it has to be agreed with all of the people working on ISS. So now, if we want to use it for good, then we need to improve the next phase of size, calibration, usability, and to try to integrate it more with other systems onboard the ISS - the inventory, management system and user interfaces we have onboard.

Ben - So, there is definitely a lot of work to do. Well thank you ever so much for joining us and for sort of filling us in on what could be a very nice new way to stop bits of paper and Haynes manuals essentially floating around the air. So, thank you very much, Luis.

Luis - Okay. Thank you for the invitation.

Ben - That was Luis Arguello from the ESA, explaining how Wearable Augmented Reality Systems could greatly improve efficiency on the international space station, eventually doing away with the need to have paper manuals floating around.

What’s the point of keeping a nerve cell alive without an axon?

We put this question to Dr Michael Coleman:

Michael - That's a very good question. We cut axons in a culture dish because that's a very well defined beginning of the degeneration period, and it gives us good control over when that degeneration starts. But there's very good evidence now that a similar mechanism of degeneration takes place in several neurodegenerative disorders; motor neuron disease; glaucoma, where pressure in the eye actually causes the axons to degenerate, and probably an Alzheimer's disease too. So, we're using the cutting model as a model for what's happening in the neurodegenerative disorders. A good analogy, going back again to the traffic holdup, it would be the difference between actually closing a motorway, so you totally block the motorway - that will be the cut - and restricting the traffic for example to one lane or some speed limits. That the type of holdup is quite different, but the particular traffic that's affected by that is actually going to be quite similar.

Can augmented reality help with forensic reconstruction?

We put this question to Dr Tom Drummond: Tom - Well, this business of taking the real world and building virtual models of it is something we also work on. In context of computer version, that's called reconstruction. And indeed, one of the things that we do in the lab, for the purposes of producing content for augmented reality, is to have a system whereby you can put an item in front of a webcam, rotate it slowly in front of the camera, and the computer will automatically build an accurate 3-D model of what it's looking at. Now, that's at the small scale, but indeed, people do work on building larger systems for forensic purposes as well. Helen - So there you go. It could actually be real. Excellent. Thanks very much. Ben - Well, that's quite nice to know. It does look to be absolutely incredible whenever they do it on TV. A bit like their incredible ability to reconstruct things from a reflection in a raindrop on a window somewhere, and from that of course, they can read car number plates or get very accurate pictures!

56:37 - How cold can it be before evaporation stops?

How cold can it be before evaporation stops?

We put this question to John King, from the British Antarctic Survey in Cambridge:

John - Even when it's very cold, washing will still dry, but it may dry so slowly that it really just isn't worth it. The reason washing dries is because water evaporates from it. If a wet surface is in contact with the air, some molecules of water will leave the surface and go into the air, but at the same time, molecules of water vapour from the air will be coming into the surface. Eventually, it will reach some kind of equilibrium where the amount of water leaving the surface is the same as the amount coming in. We then say that the air is saturated with water, and once the air is saturated, no more [net] evaporation can take place. Now, if we look at the basic physics underlying this, we find that the amount of water that air can hold when it's saturated depends very strongly on temperature, and the warmer the air is, the more water it can hold. So, evaporation tends to proceed much more quickly when it's warmer than when it's cold. But even when it's quite cold, as long as the air isn't saturated, your washing will dry, but it may dry very, very slowly, and it may rain before it gets dry! In general, we don't hang washing out to dry in the Antarctic because it is so cold that things would take such a long time to dry. Maybe on a really nice sunny day in the middle of summer, you might get the tea towels dry, or something like that.

Diana - Evaporation does require energy and the warmer the air, the more energy there is to remove dampness from your washing. But as our forum goer, Eric Taylor said, it has more to do with the relative humidity than temperature. So, if you live in a dry but cold area, you might be better off hanging out your washing than if you were in a hot but humid country. Something similar can happen in the Antarctic where, in a region called the Dry Valleys, there is no ice or snow on the ground because what does land there is sublimated directly into vapour.

Comments

Add a comment